James Vicary experiment

In the 1950s, James Vicary decided to test the effectiveness of subliminal perception by projecting hidden messages in the frames of a movie. According to his experiment, the messages “drink Coca-Cola” and “eat popcorn” increased the consumption at the exit of the cinema by 57.7% and 18.1% respectively *

* Although Vicary acknowledged the falsity of his experiment, in 2006, Johan Karremans, Jasper Claus and Wolfgang Stroebe, from the University of Utrecht, achieved a similar study with real results.

How could frames be infiltrated into a video? What would be the scope of this technique used for propaganda and brainwashing? Can Artificial Intelligence be used to infiltrate videos automatically? Could an indoctrination machine turn a person with logical-analytical thinking into a sympathizer of a vetust Argentine movement?

Details on subliminal perception

As early as the 5th century BC, the Greeks used rhetoric to influence people (ars bene dicendi). By inserting fragments in the sentences, carefully selecting the words and verb tenses, the Greeks could define the acceptance or rejection of an idea. With technological advances, rhetoric was added to the possibility of influencing using audio and video. As for video, there are limitations of the brain to process more than a certain number of frames per second. James Vicary’s hypothesis was that imperceptible frames had an influence on the unconscious brain.

What is a tachistoscope?

The tachistoscope (from the Greek τάχυστος, ‘very fast’, and σκοπέω, ‘look’) is a device that serves to present luminous images for a very short time, in order to experiment and measure certain modalities of perception and memorization. In this case the word is used with licenses since the machine will have many additional functions.

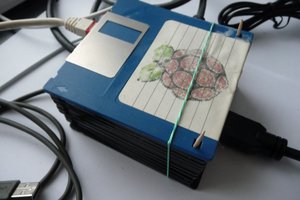

Design of the tachistoscope

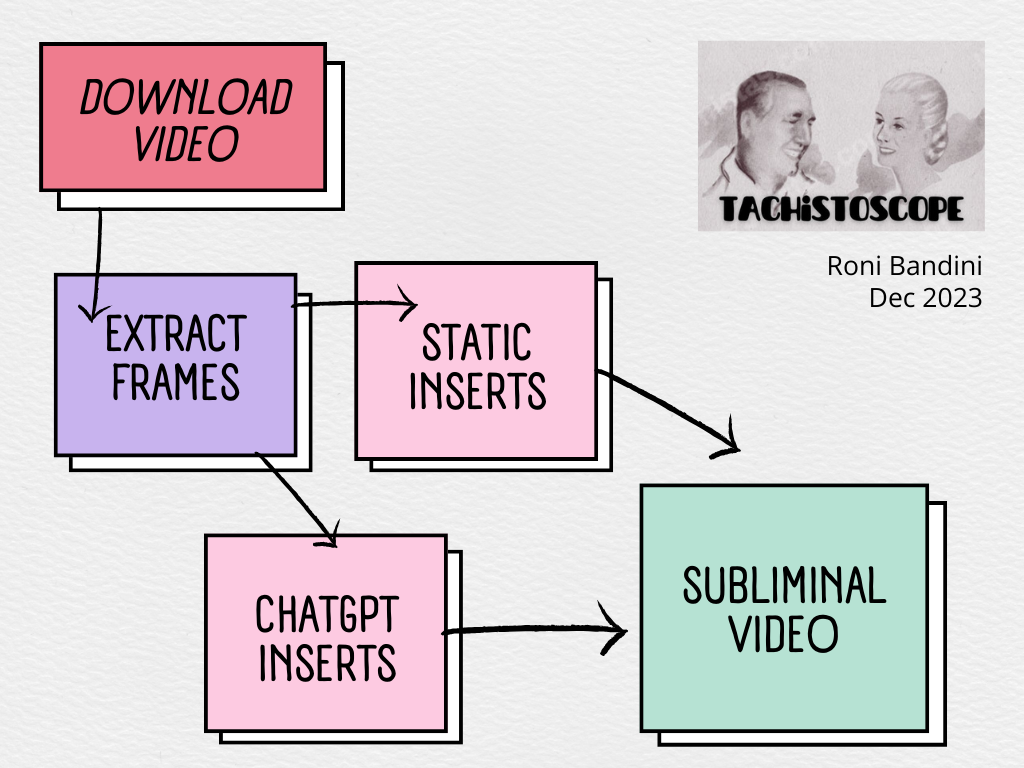

Although it is possible to manually download videos and use an editor to insert the subliminal frames, the idea is to automate the whole procedure. The machine will then have wifi connection, search for videos on YouTube, download them automatically. Then it will create a new video similar to the previous one, but inserting subliminal frames. This new video will be projected on a CRT screen, counting the emission time. The machine will also have the possibility of generating the subliminal frames automatically with ChatGPT.

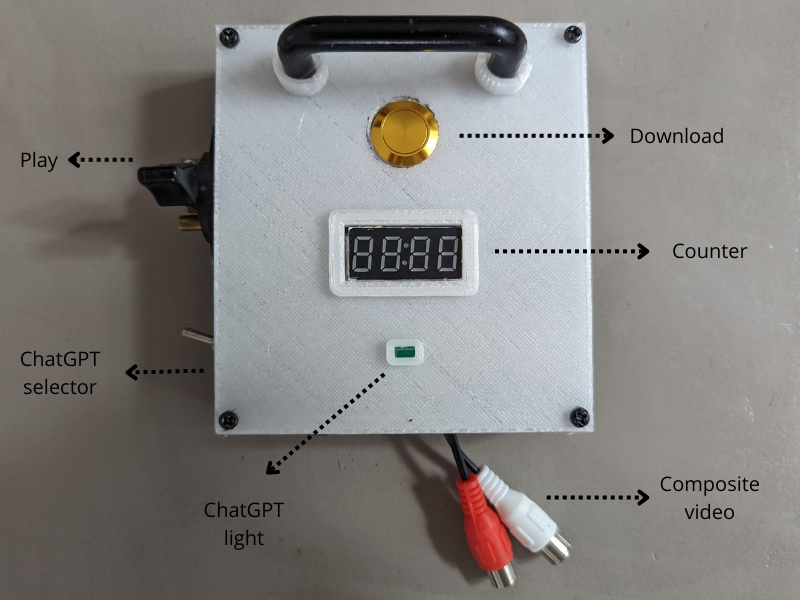

Parts

- Raspberry Pi2 with USB WiFi dongle (a modern Raspberry Pi will have better performance but I wanted to use a CRT monitor and this model has 3.5mm composite video output)

- 7 segment 4 digit display

- 2 x selector switch

- 1 x push button

- 3d printed enclosure

- 3.5 jack to RCA cable

- CCTV CRT monitor

- 3A Power Supply

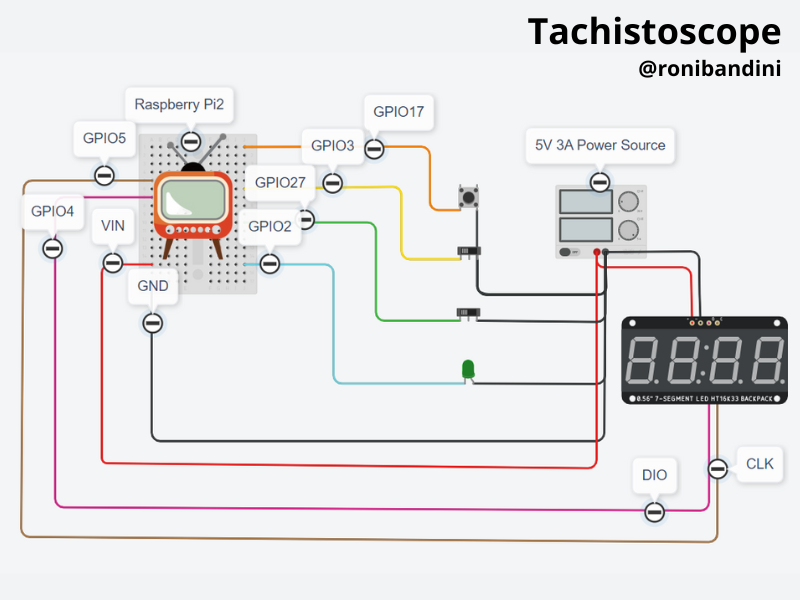

Circuit

- 7 seg display to GND y VCC. Clk pin to GPIO5, DIO pint to GPIO4

- LED to GPIO2 and GND

- Play switchPin to GPIO3 and GND

- Download button to GPIO17 and GND.

- ChatGPT switch to GPIO27 and GND.

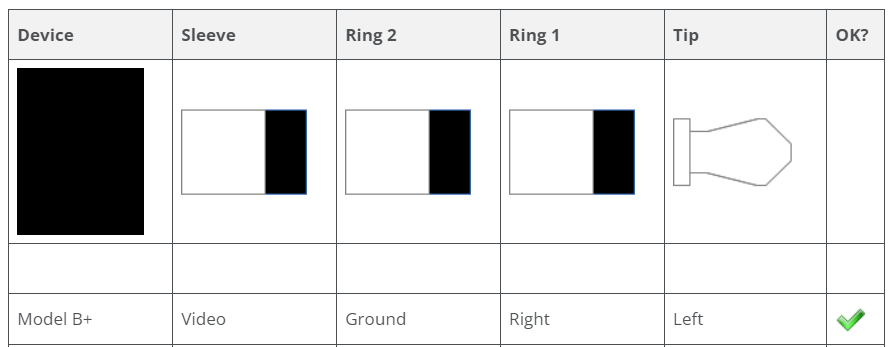

For Composite Video jack check Ground and video Signal according to the following schema.

Software setup

I did install Raspberry Pi OS Desktop 32 bits and I have added the following libraries:

sudo pip3 install openai

pip install raspberrypi-tm1637

python -m pip install pytube

pip3 install youtube-search-python

I did configure Composite Video:

sudo nano /boot/firmware/config.txt

enable_tvout=1

sdtv_mode=1

sdtv_disable_colourburst=1

dtoverlay=vc4-kms-v3d,cma-384,composite=1

video=Composite-1:720x480@60ie

dtoverlay=audio=on

dtparam=audio=on

I have configured the autoexecution, full screen mode and removed mouse pointer

I have signed up for OpenAI API and copied API key from https://platform.openai.com/api-keys

Programming the machine

The source code is developed in Python. At the beginning it reads a stats.csv file that contains data from previous projections and displays them on the 7-segment screen....

Read more » Roni Bandini

Roni Bandini

PixJuan

PixJuan

Marwene Selmi

Marwene Selmi

elkentaro

elkentaro

Dror Ayalon

Dror Ayalon

Amazing project... nice code. I got here for the Peronish story.