In order to compare and optimize different body movements, clothing, backgrounds, illumination etc. (also across different players) for the most precise pose estimation results, it is desirable to have a quality measure for the pose estimation results. However, this measure is not straightforward to obtain, since ground truth data is typically missing. In this log entry I present a procedure to obtain a quality measure for the pose estimation results without the need for ground truth data.

The concept relies on the fact that the input data to the pose estimation is always a video of contiguous movements, sampled with a high frame rate (typically 100fps). For each keypoint, it is therefor valid to apply a temporal low-pass filter (I use a gaussian filter) to the estimated positions. The low-pass filtering will increase the precision of the estimated keypoint coordinates. Then, the difference between the raw pose estimations and the low-pass filtered data can be regarded as a pose estimation error. The difference is a vector (x,y) and the length of this vector can be called residual and serves as a quality measure. The larger the residual, the lower is the precision of the individual pose estimation results.

Video 1 shows an example for the RIGHT_WRIST keypoint. In the image, blue dots indicate individual pose estimation results for the 500 frames of the 5 second recording. The red dot shows the pose estimation result for the current frame and the red cursor in the plots on the right indicates the current frame in the line plots of the row coordinate and column coordinate, respectively. The orange curves in the line plots show the low-pass filtered data. The line plot on the lower right shows the residual for each frame. As an example, a threshold at 3 pixels is shown (yellow horizontal line). All residuals above this threshold are highlighted with a yellow circle. The corresponding coordinates are also highlighted with yellow circles in the camera image.

Video 1: Tracking results for keypoint RIGHT_WRIST

It can be seen that the points with high tracking errors concentrate at a specific position, where the camera perspective is such that the RIGHT_WRIST coordinate is almost identical with the RIGHT_ELBOW coordinate.

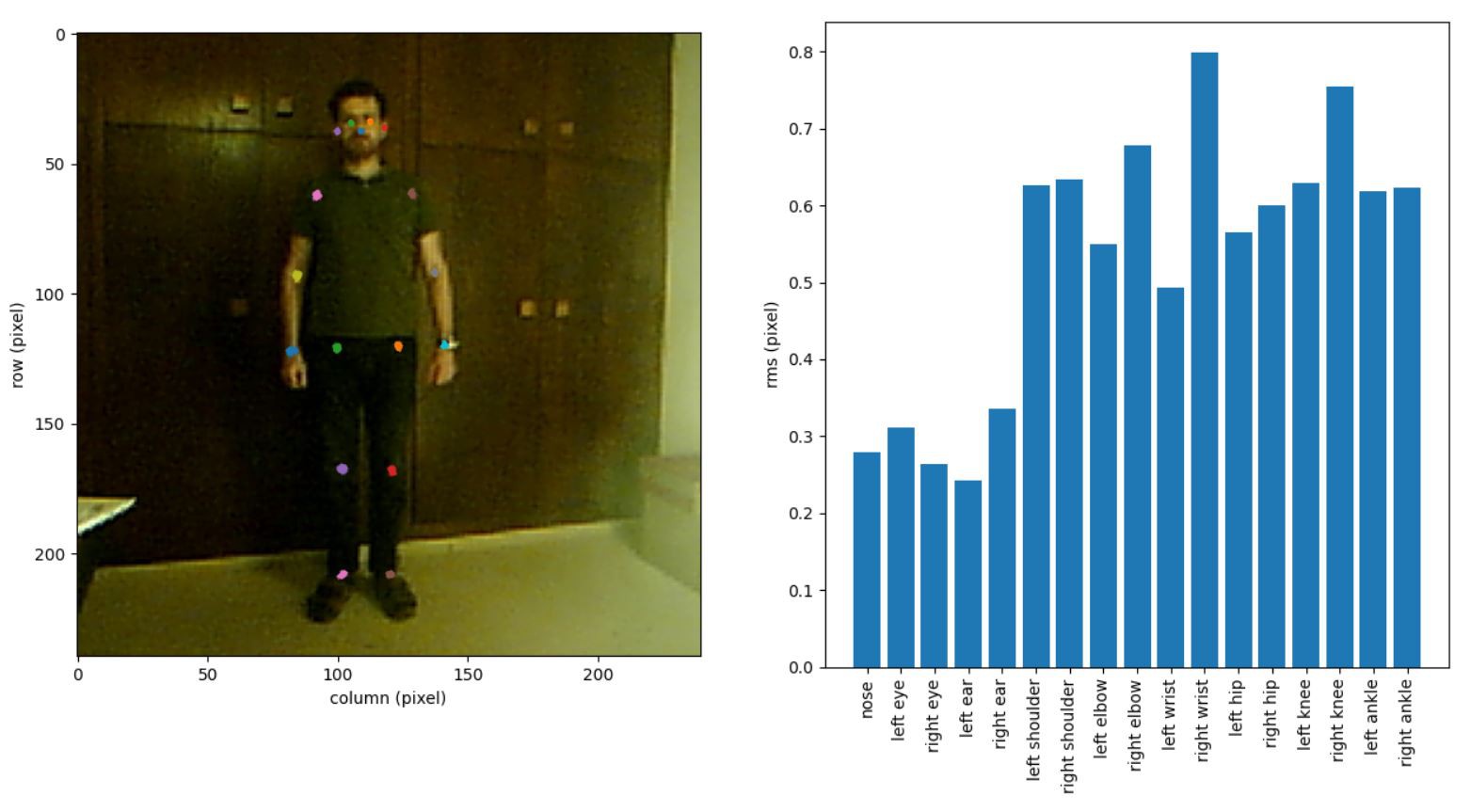

To get an impression of the lower of the lower bound of the residual, I recorded a 5 second video of a still person and computed the rms residual for the full 500 frames for each keypoint. The results are shown in figure 1. It can be seen that the typical rms value of the residual is below one pixel.

Figure 1: Tracking results for a still person

Video 2 shows an example for the LEFT_ANKLE keypoint, this time the image is slightly zoomed, as can be seen by the axes limits. Again, the points with high residual concentrate at a certain position. This time it is the upmost position of the ankle during the movement. Admittedly, the contrast between the foot and the background is very low here.

Video 2: Tracking results for keypoint LEFT_ANKLE

I think the proposed procedure is helpful to identify situations where the pose estimation precision is lower as usual. It should be possible to provide a live display of this information to the player.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.