In the previous logs I gathered the data, pre-procesed it, built a network, trained the network and did my best to tune the hyperparameters.

Now it is time for the fun part -> convert the model to something understandable by my arduino and eventually deploy it on the microcontroller.

Unfortunately, we won't be able to use TensorFlow on our target but rather TensorFlow lite. Before we can use it though, we need to convert the model using TensorFlow Lite Converter's Python API. It will take the model and write it back as a FlatBuffer - a space-efficient format. The converter can also apply the optimizations - like for example quantization. Model's weights and biases values are typically stored as 32-bit floats. On top of that, after normalization of my input image, the pixels values range from -1 to 1. This all leads to costly high-precision calculations. If we decide for quantization, we can reduce the precision of the weights and biases into 8-bit integers or we can go one step further - we can convert the inputs (pixel values) and outputs (prediction) as well.

Surprisingly enough, that optimization comes with just a minimal loss in accuracy.

# Convert the model.

converter = tf.lite.TFLiteConverter.from_saved_model("model")

# #quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_data_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

tflite_model = converter.convert()Note, that only int8 is available now in TensorFlow Lite (even though uint8 is available in API) - it took me quite some time to understand that it is not a problem of my code.

In the above snippet, you can see a representative_dataset - it is a dataset that would represent the full range of possible input values. Even though I have come across many tutorials, it still caused me some troubles. Mainly because the API expects the image to be float32 (even if for training you used a grayscale image in range from -128 to 127 and type of int8).

def representative_data_gen():

for file in os.listdir('representative'):

# open, resize, convert to numpy array

image = cv2.imread(os.path.join('representative',file), 0)

image = cv2.resize(image, (IMG_WIDTH, IMG_HEIGHT))

image = image.astype(np.float32)

# image -=128

image = image/127.5 -1

image = (np.expand_dims(image, 0))

image = (np.expand_dims(image, 3))

yield [image]

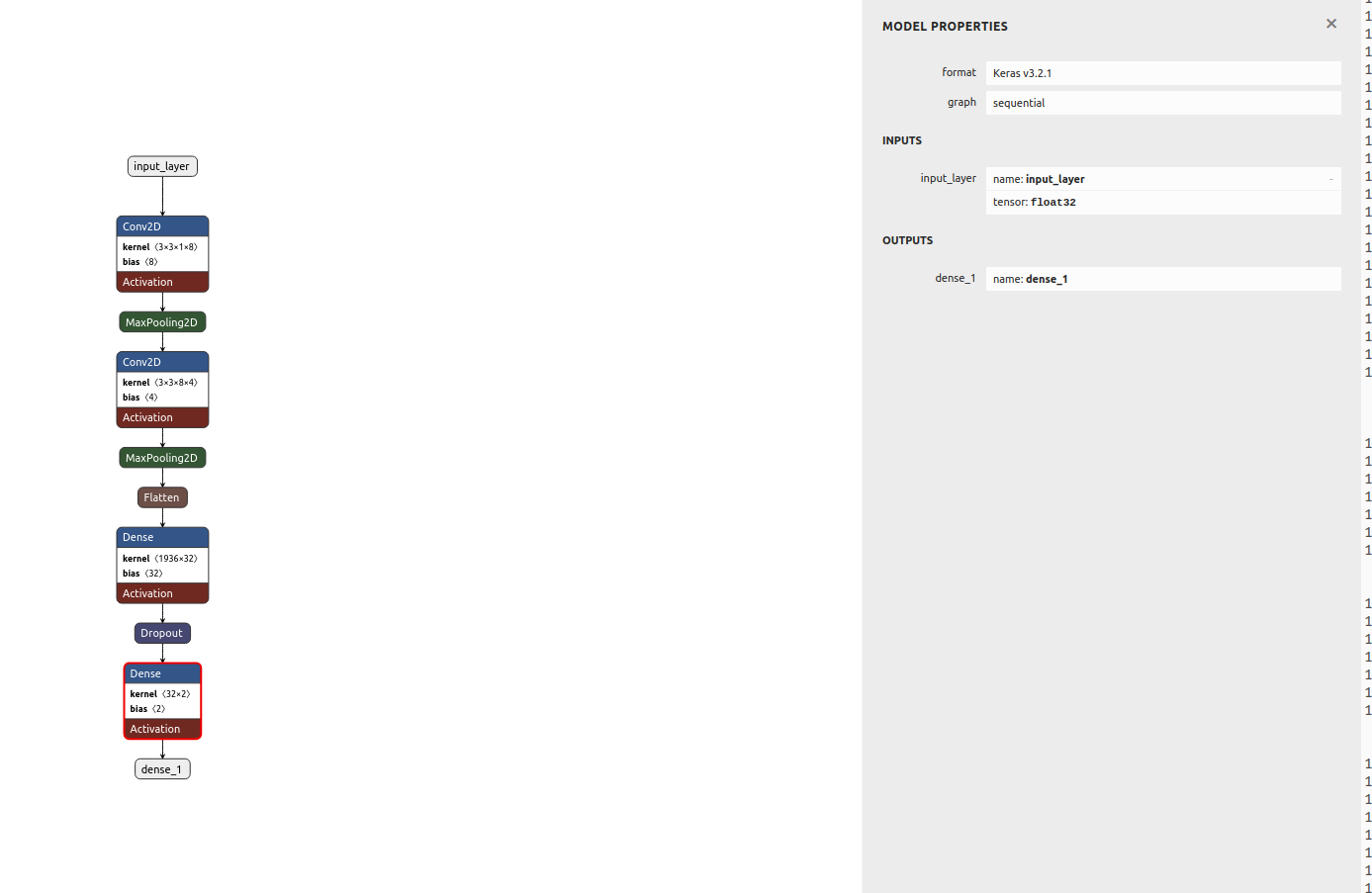

Let's see both models architecture using Netron. First basic model:

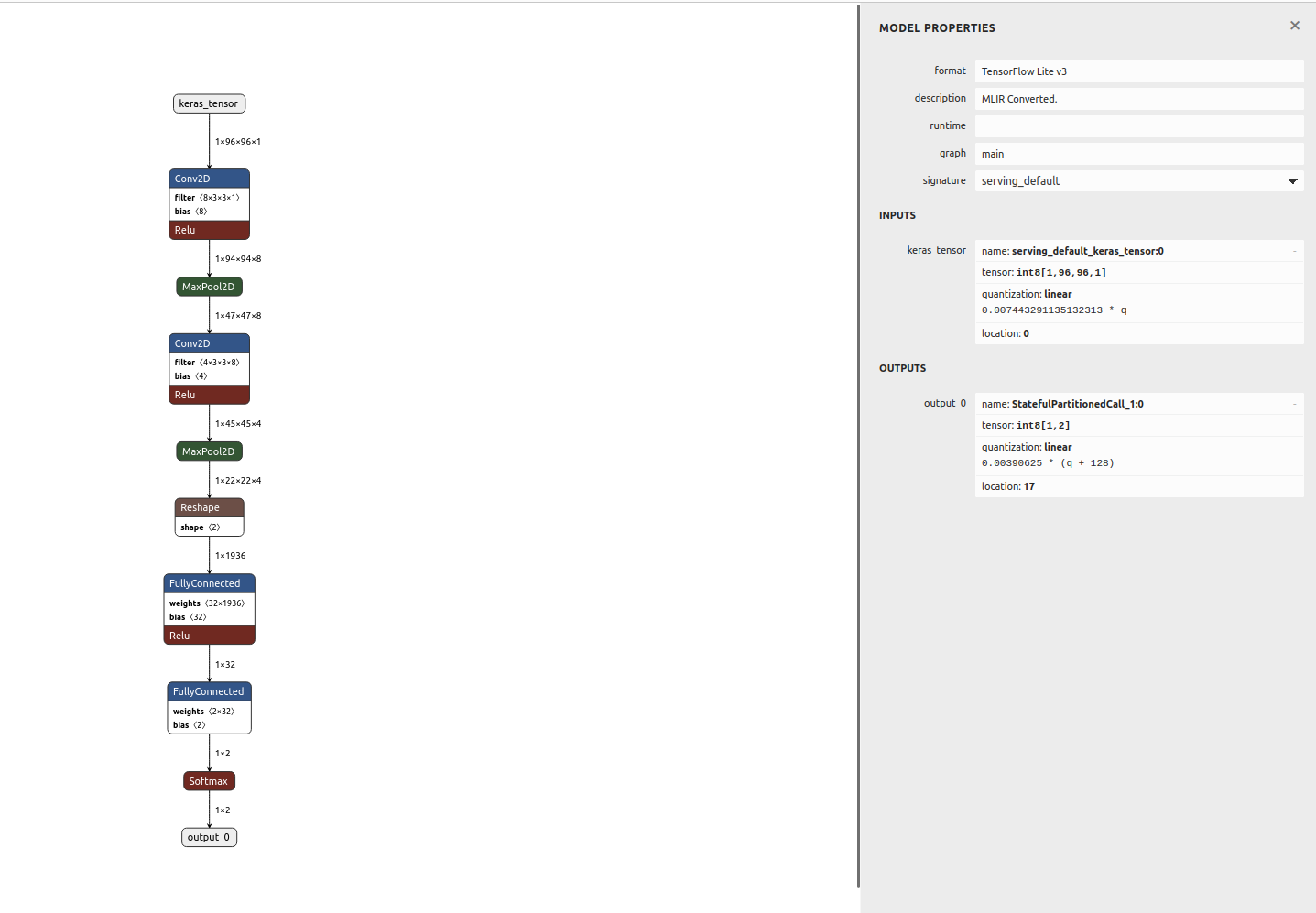

and quantized one:

I wanted to make sure that the conversion went smoothly and the model still works before deploying anything to microcontroller. For that I would make predictions with both models - the initial one and the converted and quantized one. It is slighlty more complex to use the Tensorflow Lite model as you can see in the snippet below. Additionally, we need to remember to quantize the input image with retrieved scale and zero point values from the model.

# Load the TFLite model in TFLite Interpreter

interpreter = tf.lite.Interpreter(tflite_file_path)

# Load TFLite model and allocate tensors.

interpreter = tf.lite.Interpreter(model_content=tflite_model)

interpreter.allocate_tensors()

# Get input quantization parameters.

input_quant = input_details[0]['quantization_parameters']

input_scale = input_quant['scales'][0]

input_zero_point = input_quant['zero_points'][0]

#quantize input image

input_value = (test_image/ input_scale) + input_zero_point

input_value = tf.cast(input_value, dtype=tf.int8)

interpreter.set_tensor(input_details[0]['index'], input_value)

# run the inference

interpreter.invoke()

Results of comparison:

Test accuracy model: 0.9607046246528625

Test accuracy quant: 0.9363143631436315

Basic model is 782477 bytes

Quantized model is 66752 bytes

The last thing that needs to be done is converting the model to C file. In Linux we can just use xxd tool to achieve that:

def convert_to_c_array():

""" Converts the TFLite model to C array"""

os.system('xxd -i ' + tflite_file_path + ' > ' + c_array_model_fname)

print("C array model created " + c_array_model_fname)

Et voila!

kasik

kasik

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.