Phase 1: Requirements Analysis and Initial Planning

The vision system development began with a comprehensive analysis of the project requirements. These requirements included high-precision tracking of patient movements and real-time feedback capabilities. An initial project plan was drafted, outlining the major milestones and timelines for the vision system development.

Phase 2: Camera Selection and Calibration

The next step involved selecting suitable cameras for the vision system. We opted for the laptop camera because wase the only one we have avalible. Key considerations included frame rate, field of view, and compatibility with our software. The camera underwent a thorough calibration process to ensure accurate measurement and alignment. Calibration involved using a series of known reference points to adjust the camera settings for precise tracking and measurement.

Camera Calibration Definition

Camera Calibration refers to the process of getting the internal and external parameters of a camera, where the internal parameters are:

• fx and fy, the focal length multiplied by the pixel density in the x and y axes, mx and my, respectively

• Ox and Oy, the image center points

And the external parameters are:

• Rotation matrix R, defining the orientation of the camera in the world frame

• Translation matrix T, defining the position of the camera in the world frame

If we have the camera parameters, then we can establish a transformation from 3D world coordinates to 2D image coordinates, and vice versa.

To calibrate the camera, we need to do so using an object of known dimensions; for instance, a known calibration pattern such as a chessboard

Then the steps are as follows:

- Take multiple images of the chessboard from different angles and positions. The pattern should be flat.

- Detect the corners or dots in the calibration pattern in each image. For a chessboard, this involves finding the intersection points of the black and white squares.

- Using the known geometry of the calibration pattern and the detected key points, an initial estimate of the intrinsic parameters can be made using the Direct Linear Transformation method (DLT).

- For each calibration image, compute the extrinsic parameters (R and T) that relate the 3D coordinates of the calibration pattern to the 2D image coordinates.

- Estimate the nonlinear distortion effects, such as the radial and tangential distortion coefficients, for better calibration accuracy.

Phase 3: Marker Tracking System Development

After calibrating the web camera, i.e. getting the internal and external parameters, we can now design an application that estimates the orientation of the chessboard in a live video stream and plot the readings in real-time.

The program is divided into two parallel threads using the threading library in python, the first thread is used to capture the live video stream using opencv library

After that, the camera parameters are passed to the cv2.solvePnP method to get the coordinates of the board, basically the PnP method determines the coordinates of the board using the 3D coordinates of n points on the chessboard, then from the coordinates of the board we get the orientation

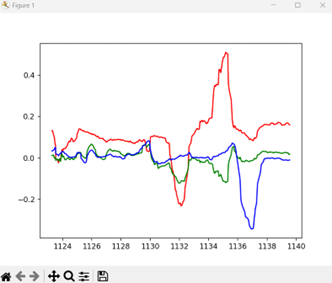

On the other thread, a function that plots the 3 angles of the orientation (roll, pitch, yaw) in real time is implemented.

Phase 4: Real-Time Processing and Feedback Mechanism

Real-time data processing capabilities were developed to ensure immediate feedback during therapy sessions. The vision system software was optimized to process data in real-time. A feedback mechanism was implemented. This phase also involved fine-tuning the algorithms to minimize latency and ensure smooth operation in to the game.

Ivan Hernandez

Ivan Hernandez

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.