My objective is to synthesize textures with artificial neural networks such as deep dream for virtual / augmented reality assets because it's cool.

At DEFCON I was showing off AVALON, the anonymous virtual / augmented reality local network in the form of a VR dead drop:

When connected to the wifi hotspot, the user could begin to explore a portfolio of my works and research in a Virtual Reality gallery setting. Upon connecting to the WiFi, users become automatically redirected to the homepage. This is the 2D front end. I will record a video to show how this connects to a 3D virtual reality back-end soon.

The website for AVALON and the VR back-end shall go online in the next couple weeks for demo purposes after I fix some bugs.

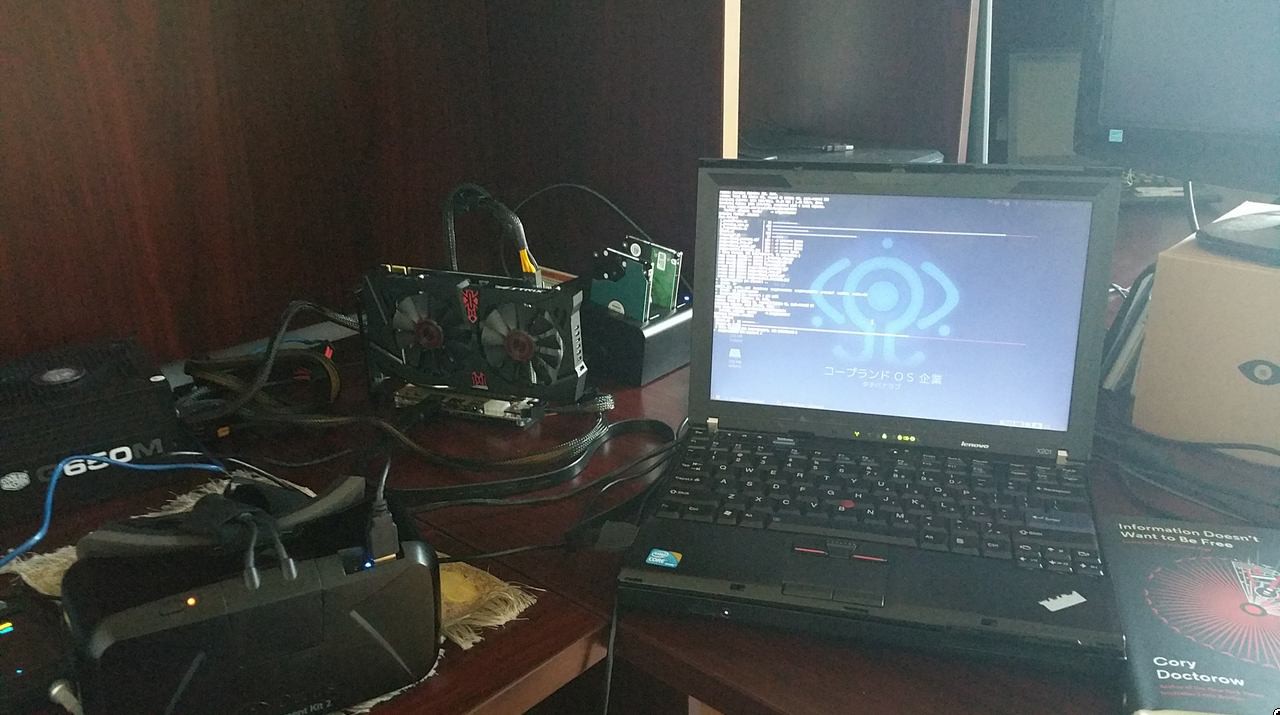

I have made a substantial amount of progress in the past month that I must begin to share. First off, I'd like to continue off the last log's experiments with deep dream. I woke up one day and found $180 bucks in my pocket after laundry, it was the perfect amount I needed for a graphics card to give me a 20x boost in artificial neural network performance. A few hours later, I have returned with a GTX 960 as an external GPU into my Thinkpad X201 and configured a fresh copy of Ubuntu 14.04 with drivers and deep dream / Oculus Rift VR software installed. Here's a pic of the beast running DK2 @ 75 FPS and Deep Dream. I have a video to go along with it as well: Thinkpad DeepDream/VR

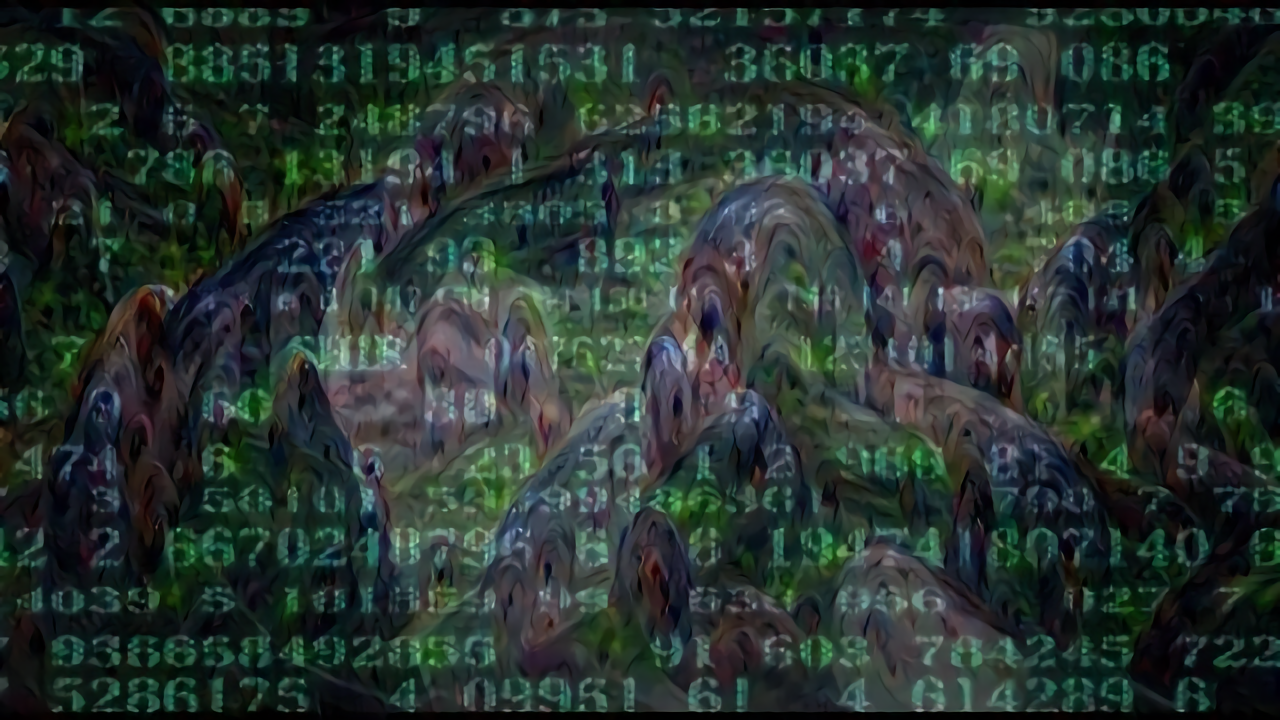

I've been doing some Deep Dream experiments with DeepDreamVideo

The results have been most interesting when playing with the blend flag, which takes the previous frame as a guide and accepts a float point for how much blending should occur between the frames. Everything I created below was made using the Linux command line.

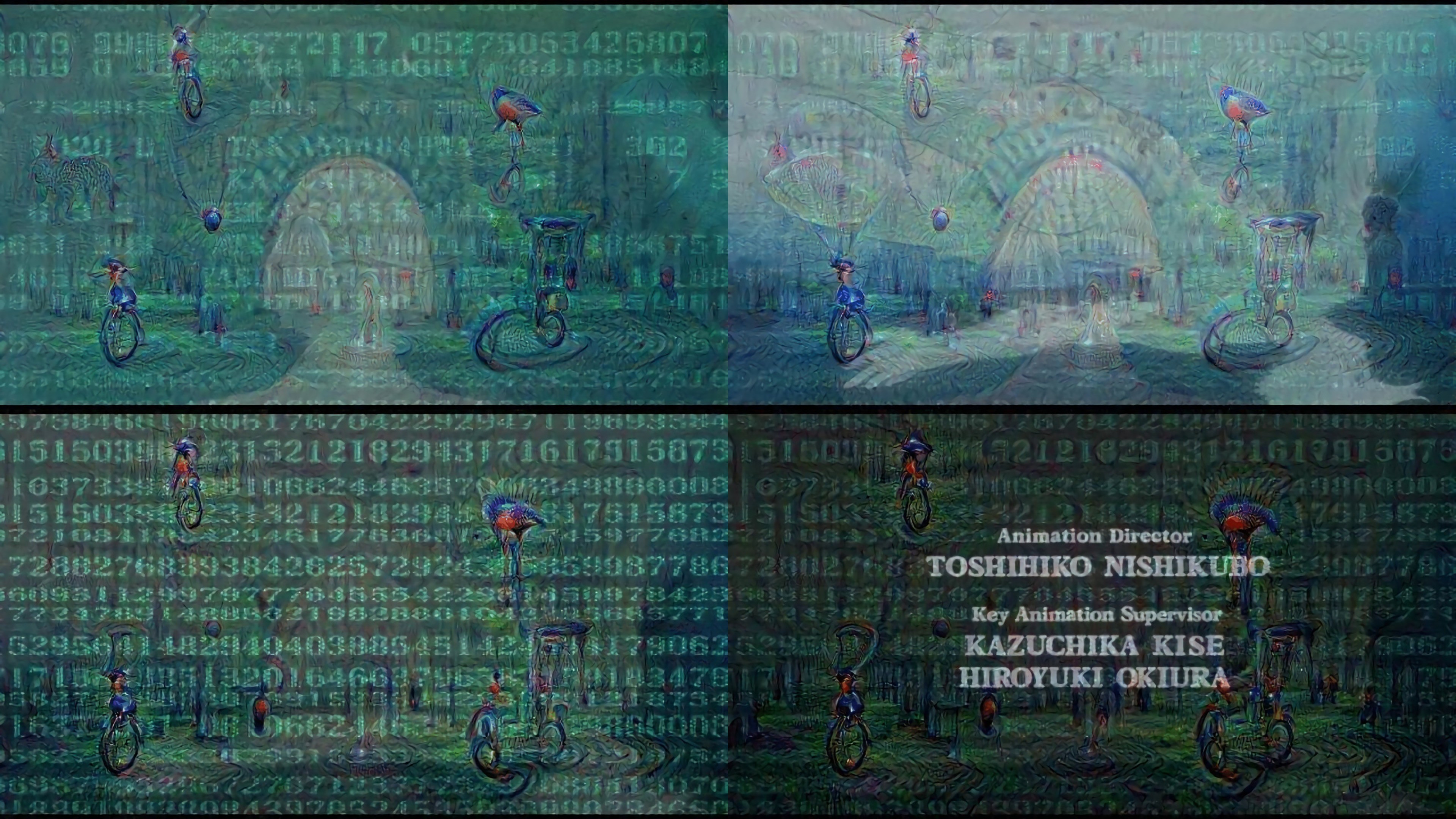

The video I took was the original 1995 Ghost in the Shell Intro. I used FFMPEG to extract frames every X second from the video in order to create test strips. When I went to go test, I got some unexpectedly pleasing results! In this test, I extracted a frame every 10 seconds.

You can see the difference between them from the raw footage to the dream drip:

I used 0.3 blending and inception_3b/3x3 for this sequence. I really like how it came out.

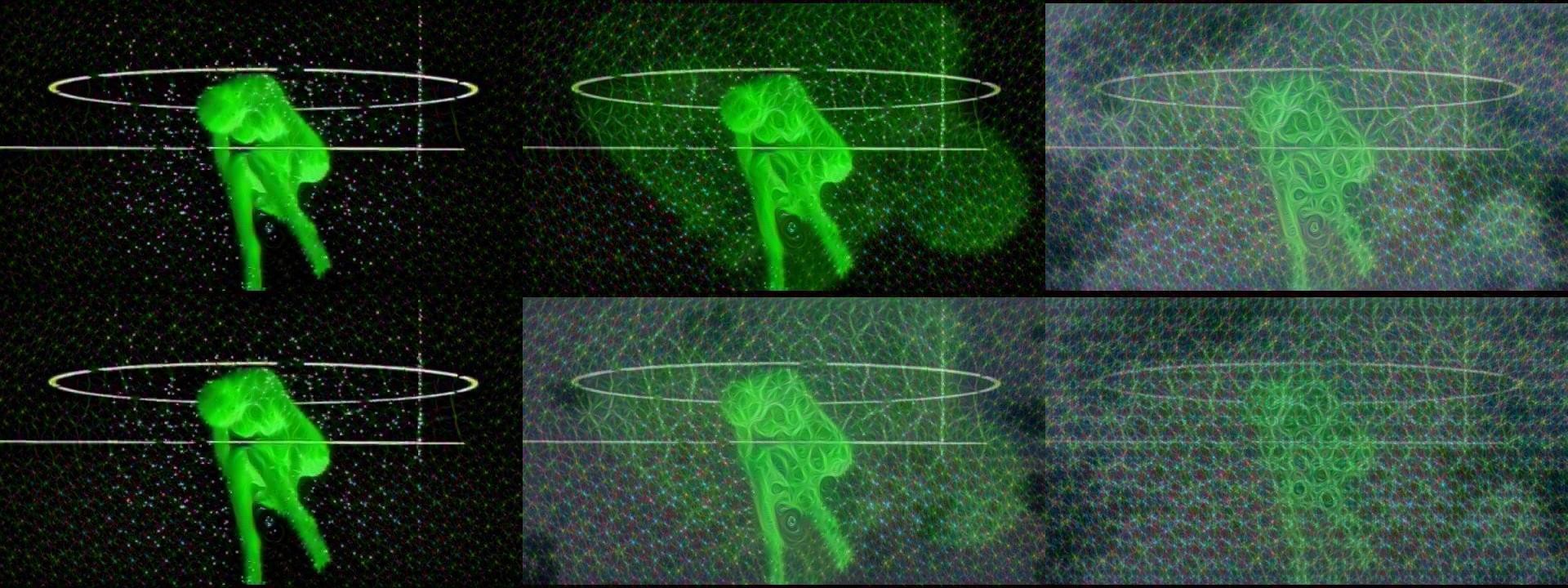

I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:

I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:

# Drain all the colors and create the fading effect

for i in {10,20,30,40,50,60,70,80,90}; do convert out-20.png -modulate "$i"0,0 fade-$i.png; done

# Loop the gif like a yo-yo or patrol guard

convert faded.gif -coalesce -duplicate 1,-2-1 -quiet -layers OptimizePlus -loop 0 patrol_fade.gif

I think this would be cool if it could update every X hour or react semantically to voice control. So the next time someone says "Pizza", the room will start dreaming about pizza and will reflect the changes within the textures of various objects.

Here is the finished Ghost in the Shell Deep Dream animation:

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Like!

Are you sure? yes | no