Overview and Goals

Hello, interested reader. I have benefited so much from other people's blog posts, both in my hobbies and my professional life, that I decided it was high time I contributed a little. I hope that someone finds it useful.

I've been interested in remotely operated vehicles for some time - projects like those on DIY Drones, OpenROV, and plenty of others are absolutely fascinating to me. I like the idea of being able to send a machine into inaccessible areas for science or exploration. I decided to focus on a network-driven rover at first, with the intent of learning what it takes to drive something over a network.

My intend is to document that process here. I've seen similar projects on the web, but I've never seen the design process documented - the tradeoffs, the software architechture, the naive assumptions that proved wrong. So that's what I'll try to do.

The idea was to send commands from a computer or tablet to an Android phone, which would interface to the very cool IOIO board. Android phones have the advantage of packing a lot of processing power into a small, low power package, along with numerous sensors. That makes it a pretty attractive platform for a remotely operated vehicle (ROV) or robot.

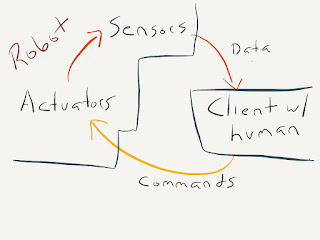

I wanted to be able to send back sensor data from the rover, such as video, voltage levels, accelerometers, GPS data, etc. and display them on a simple console. So on the network, the command traffic would look like:

rover sends current sensor data to console

console sends back commands (turn on motors, etc)

repeat

Video would be handled on a separate connection.

This seems simple. If you've ever done socket and thread programming, it probably is. If you've never done that, or written a program for Android, it's not so simple, and it's an excellent learning experience. It's also a ton of fun.. getting an LED to turn on in response to a command over the network the first time is seriously cool.

Android Development and IOIO Basics

When I first started the project, I had never written an Android program before. I've built simple Atmel-AVR and Basic Stamp robots, and usually the first thing you do is make sure you can blink an LED from the microprocessor - it's "Hello, world" for micros. First, I needed to get the compiler up and running for Android, and understand something about how Android works.

I started with the excellent Android dev tutorials and worked through the first few programs. I then followed the tutorial at Sparkfun to get the IOIO sample projects working, and tinkered with them a bit. I found that the IOIO worked great on my Galaxy Nexus and Kyros 7127. My older Droid X2 needs the USB connection set to "charge only" to work. I plan on using the Droid for the rover. I am pretty impressed with the design of the development kit for Android, and extremely impressed with the IOIO. A great deal of thought has gone into making it easy to program for and interface things with.

I first experimented with the Hello IOIO application, which runs a GUI application and a thread to talk to the IOIO. It worked fine, and is a great way to test the IOIO on your phone. The Hello application let's you turn an LED on the IOIO on and off from the phone.

The sample programs also include a sample service, that runs in the background and only communicates with the user via statuses. I decided this was the way to go for controlling a rover. Android apps have life cycle that you must track to deal with incoming calls, screen rotation, and other events. That actually tears down the program and restarts it, and your program has to deal with it. This is not ideal for a realtime control application.

The service makes it a little trickier to provide feedback and debug, but you can use the logging feature to get what you need. A robot or rover will be talking to the user over the network anyway, right? :-)

The next step was to decide how to handle the networking aspects, which proved to be very interesting. That will be the topic of the next section.

Software Design and Network Communication Approach

Once I had the IOIO working from the sample application, I started looking into how to control it across the network. Fairly early on, I needed to decide if the phone was going to act as the server, or the client. I figured there were advantages each way:

- If the phone was the server, it would be pretty easy to allow it to accept connections from a client anywhere on the internet. My home router could pass the connection through to the port the phone was listening on, and you'd be off and running.

- If the phone was the client, it would be easier to make work over the 3G/4G cell network. It would reach out using whatever network connection it had (cell, wifi, either one) and connect to a fixed server, which would control it. It would be difficult or impossible to use the cell network if the phone was running the server, since the carriers almost certainly have firewalls in place.

I eventually settled on option one.. the phone would be the server. A client would connect to it and recieve sensor data, and issue commands to the phone to use actuators on the rover.

Next came a client, and control protocols. I wanted something fast - sensor data and control commands should be sent promptly to give a smooth driving experience. That ruled out a simple CGI/webserver sort of arrangement. A web browser could act as a client only if something like Ajax was used to stream the commands. It would be slick to do that - a client computer likely already had a web browser. But I decided against it on several grounds:

- I don't know anything about Ajax. Like, nothing at all.

- It would complicate writing the server

- I wanted something as fast as possible, and I figured I could write a tighter custom protocol that would require less parsing

So I settled on a simple socket server running on the phone, and tested it with a Telnet client on Linux. I figured a proper client program could come later.

With that decision made, I started looking at socket programming in Android and Java. I had never done sockets before, so it was a good opportunity to learn some client/server stuff. It became apparent that I would need to break my network communications off into another thread to avoid blocking the server when it was waiting for input. If you happen to be using a GUI, this is particularly important since your GUI will stop responding during the blocking operation. Android will actually clobber your app if it blocks the GUI thread for more than a fraction of a second to ensure a good user experience.

So I decided on three threads:

1) The "master" thread, based on the HelloIOIOService example code

2) The IOIO looper thread

3) The network communication thread.

Threads in Java are actually pretty easy to spawn off, and child threads can access variables and functions of the parent. I am fairly new to Java, but it's not difficult using examples on StackOverflow and tutorial sites. It was fairly easy to spawn a thread, open a socket, listen on a port, and then exchange data with the master thread.

The tricky part was to make it work reliably. I wanted a continuous stream of sensor data and commands flowing between the client and server, a few times a second. So several issues you run into are:

1) You need to properly handle a client politely disconnecting and wait for new connections.

2) You need to handle a client just vanishing, rudely, detect it, and set up for when the client comes back.

3) You need to update the master thread so it can tell the IOIO looper what to do.

4) You need to properly handle the network dropping out from under the whole mess.

The first two proved challenging. I ran into a problem where the client could connect, and begin bouncing data back and forth, and run for several minutes. It would then crash. I eventually figured out that the Droid X2 has a weird problem - when running version 2.3.5, it will disconnect from my WiFi every 5 minutes or so, for about 5 seconds. I flashed it with a new 2.3.5 ROM called Eclipse, which is very nice. The problem persists, however. I'm convinced that it's an issue in the 2.3.5 kernel, which I can't change because of the Droid's encrypted boot loader. Thanks, Motorola.

On reflection, though, I realized this was actually a great way to make sure my code was robust - both the client and server needed to detect when the wireless connection dropped out and recover, gracefully and quickly. That's working fairly well now - both ends usually detect it and they reconnect after a few seconds. This would be a real problem for an aerial or underwater vehicle, but a ground rover can just stop and wait for the connection to come back up. Either way, it's excellent practice for coding on the unreliable internet.

Communications and Control

I've built some simple robots, mostly of the "drive around and use IR or ultrasound to not run into things" variety. Once I had a basic back-and-forth network socket program working, it was time to think about overall program flow.

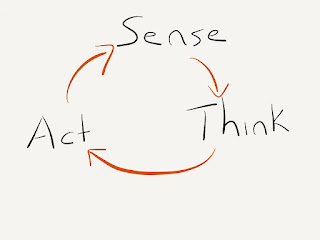

A traditional robotics paradigm is the "Sense, Think, Act" cycle. A robot takes input from it's sensors, performs processing on them to try to identify the best course of action, and then commands the robot's actuators to do something. The process then repeats.

At the moment, I'm not building a robot in the typical sense. That's because a human is in the loop, making the decisions based on sensor input. I wanted to make sure that the platform could be used as a robot, just by changing the software on the phone, but right now I'm interested in building a reasonably robust remotely operated vehicle. I'll continue to use "robot" because it's convenient. :-)

On reflection, I decided that a remotely operated vehicle can follow the same sense-think-act cycle. The primary difference is that the thinking is done off-vehicle, by the human operator.

With that understanding, I started thinking about how the communications will work. I intend to use the excellent IPCam program to stream the video from the phone. It works great, is robust, can run as a service, and can auto-start when the phone boots up.

The rest of the program will run in a program based on the IOIO service example, described in a previous post. Thus, the video stream will be separate from the command and sensor stream.

I've run a test with the IOIO service and IPCam, and found that streaming video and sending sensor/command data back and forth at the same time works fine. I just used a browser for the video stream, and my little Java program for the sensor/command data.

I did find that neither the browser or VLC will attempt to reconnect to the video server on the phone if the connection drops. I may decide to integrate a simple MJPEG viewer into the Java client to make it reconnect automatically, as the command/sensor connection does. Doing so would also be a good step towards allowing control from a phone or tablet, rather than a laptop.

Dirt Simple Video Streaming

For the first implementation of the video stream, I just used the most excellent IP Webcam application for Android.

While experimenting with IP Webcam video server for Android, I found that the standard web browser and programs like VLC were intolerant of disconnects of the WiFi. Each time it disconnected, it would require manual intervention to reconnect, so I started looking at what it would take to make a very simple viewer in a dedicated client program.

I found that even a very crude Java client could pull images from the Android phone's IP Webcam application at 320x200 fast enough for acceptable video. Good video streaming is a whole complex subject in itself, but I was surprised that this produced functional results.

WIFI Signal Strength Indicator

I decided it would be nice to be able to tell if you were about to drive off past your WIFI range, so I looked at how it can be measured.

I had added a WifiManager object earlier to lock the Wifi so that it would not be throttled back by the phone. It quite reasonably does this to save power, but you don't want that to happen if you are using the wireless link to drive around, so the application requests a lock at startup and releases it on shutdown.

If you have a WifiManager, it's easy to ask it the current state of the link, and it will return a bunch of information including signal strength in dB. If you want it to report "bars", it has a function to compute how many bars you are getting on whatever scale you prefer. Since I want a simple color coded indicator on the client control panel, I just went for a 0-5 bar scale.

This is the first sensor value to be returned from the rover.

PWM Throttle

In implementing the PWM throttle to vary the speeds of the motors, I ran into an interesting problem. I changed the relevant digital IO pins to PWM outputs, set the duty cycle in each function that controls the motors, and.... nothing. Nothing at all. Yeah...

Some troubleshooting led me to the chart at https://github.com/ytai/ioio/wiki/Getting-To-Know-The-Board

that shows which pins can be used as PWM outputs. I made a basic error - I connected one of my 4 motor driver pins to pin 8, which is not PWM capable. The interesting part was that it didn't just disable the motor controlled by pin 8 - by trying to set that pin to be a PWM output in my program, it disabled ALL PWM functionality.

As soon as I commented out the references to pin 8, the other motors spun up just fine, and are nicely speed controlled. It's easily fixed

I realized that changing the motor controller input to a pin capable of PWM was as simple as flipping the connector that plugs into the motor board and then remapping it in software. It didn't even require lighting up the soldering iron. :-)

The throttle control works great at 1000 hz PWM. It makes the rover capable of reasonably fine control.

Android Client Video Streaming

After a brief hiatus for summer in Ohio, some travel, and a whole lot of work, I've returned to the rover project and decided to write an Android client to drive the rover from. I started with trying to get the video feed working first.

You might recall that I'm using a very crude way of pulling video from the rover. The Android phone that controls the rover is running IP Webcam, and the client just pulls static JPEG images and displays them. This was very easy on the PC Java client, but there was a little bit of a challenge to overcome getting it to work on another Android phone.

The GUI components on Android run in a standard Activity. You can't do any time consuming work in the GUI thread or the OS will shut it down to enforce a reasonable user experience. My initial thought was to launch a new thread and repeatedly download the image in another thread and display it. I quickly found out that you can not update an ImageView from any thread except the main GUI thread, so I started looking at other approaches.

I settled on AsynchTask, which is made for precisely this sort of thing. If you want to do a time consuming background task that then interacts with the GUI, AsyncTask is a good place to start. It abstracts away the work of thread handling for you.

I started with an AsyncTask to download and display an image. I used a function that I found (reference given in the code sample below). This worked - it pulled a single image and displayed it. At that point I just needed to figure out how to wrap it in a loop, and I was good.

The trick to running AsyncTasks sequentially is to know that you can launch a function upon completion. I just had the AsyncTask call a launcher function to start another instance of itself as it completes.

If you try something like:

while (true)

run_asynctask;

it won't work - it will try to launch them in parallel, which is not allowed. If you instead call the launcher function from the onPostExecute() of the AsyncTask, it runs sequentially. You can then add a conditional in your launcher to switch the feed on and off.

This code was tested and streams 320x240 JPEG frames from one phone to the other fairly smoothly, just like the PC client.

Code:

All this goes in the GUI thread: I start with a call from onCreate():

vidLoop();

Launcher function:

void vidLoop()

{

if (connected == 1)

{

ImageDownloader id = new ImageDownloader();

id.execute(vidURL); //vidURL is a String with the URL of the image you want

}

}

Async Task Code. This downloads an image from a URL and displays it in an ImageView called imageView1, then calls vidLoop() upon completion to do it again.

//this very useful chunk of code is from http://www.peachpit.com/articles/article.aspx?p=1823692&seqNum=3

private class ImageDownloader

extends AsyncTask{

protected void onPreExecute(){

//Setup is done here

}

@Override

protected Bitmap doInBackground(String... params) {

//TODO Auto-generated method stub

try{

URL url = new URL(params[0]);

HttpURLConnection httpCon =

(HttpURLConnection)url.openConnection();

if(httpCon.getResponsepre() != 200)

throw new Exception("Failed to connect");

InputStream is = httpCon.getInputStream();

return BitmapFactory.depreStream(is);

}catch(Exception e){

Log.e("Image","Failed to load image",e);

}

return null;

}

protected void onProgressUpdate(Integer... params){

//Update a progress bar here, or ignore it, it's up to you

}

protected void onPostExecute(Bitmap img){

ImageView iv = (ImageView)findViewById(R.id.imageView1);

if(iv!=null && img !=null){

iv.setImageBitmap(img);

//start next image grab

vidLoop();

}

}

protected void onCancelled(){

}

}

Current Status and Source

I am currently working on rebuilding the rover's control server to include an integrated video server, to eliminate the need for the IP Webcam software. It streams back the phone's preview frames when it receives an HTTP GET request for each frame.

Currently planned improvements:

1) Add some sensors - hook up the IOIO's ADCs to measure current and pack voltage, and add a Sharp IR distance sensor.

2) Make use of the rover's GPS to experiment with navigating to a series of waypoints.

Jason Bowling

Jason Bowling