I was a little disappointed with how long TMD-2 took to capture the state transition table symbols via OCR. I had mentioned in my previous log that the pytesseract library "executes" the whole Tesseract engine for each call, essentially using the command line interface, and I was making a call once for each table cell. My planned switch to tesserocr, which integrates directly with Tesseract's C++ API using Cython, didn't pan out due to segmentation fault integration issues.

So I began looking at how I might use Tesseract itself to speed things up. Tesseract has a lot of options. One in particular --psm allows you to define what the image you are passing in represents. Here is the full list of options:

0 = Orientation and script detection (OSD) only.

1 = Automatic page segmentation with OSD.

2 = Automatic page segmentation, but no OSD, or OCR. (not implemented)

3 = Fully automatic page segmentation, but no OSD. (Default)

4 = Assume a single column of text of variable sizes.

5 = Assume a single uniform block of vertically aligned text.

6 = Assume a single uniform block of text.

7 = Treat the image as a single text line.

8 = Treat the image as a single word.

9 = Treat the image as a single word in a circle.

10 = Treat the image as a single character.

11 = Sparse text. Find as much text as possible in no particular order.

12 = Sparse text with OSD.

13 = Raw line. Treat the image as a single text line,

bypassing hacks that are Tesseract-specific.

I had been using option 10 - "Treat the image as single character. ". I started thinking about what it would take to use option 8 - "Treat the image as a single word. ". With "words", I knew it would be important to preserve the spacing between symbols so that they could be written into the correct cells.

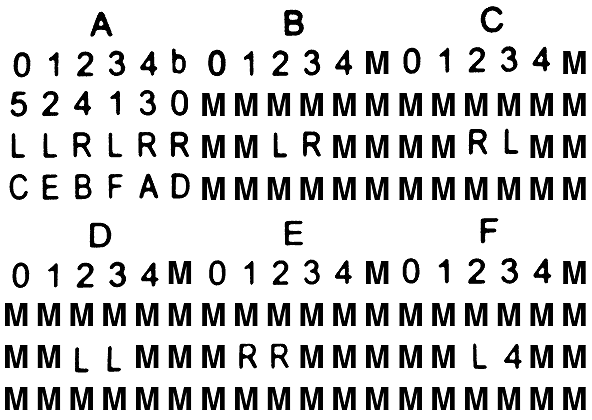

The first thing that I tried was to "read" whole rows from the table across the three state panels. I was hoping that the cells with squares would register as a period (.) or dash (-) or even a space( ), but no such luck. So I took the image of the state table and substituted an M for each empty cell. Here is what a final image that is used for OCR looks like:

And when I OCR each row (skipping the state headers) as a "word" I get the following results back:

01234b01234M01234M 524130MMMMMMMMMMMM LLRLRRMMLRMMMMRLMM CEBFADMMMMMMMMMMMM 01234M01234M01234M MMMMMMMMMMMMMMMMMM MMLLMMMRRMMMMML4MM MMMMMMMMMMMMMMMMMM

I tried using other characters as space substitutes like X and +, but M seems to work the most reliably. I do some reasonableness checks on the retrieved text, like the "word" should be 18 characters in length and should only ever contain characters from the tile set. If this is not the case, I mark the character or row with X's. When retrieving a cell value from the "table" above, if the value is an X, I OCR for just the character since single character recognition seems to work better than word based OCR.

Here is my new capture process in action:

I have gotten the whole table refresh process down to under 10 seconds. I'm pretty happy with that.

Michael Gardi

Michael Gardi

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.