-

Roz - Dead Reckoning and Navigation

01/27/2021 at 23:38 • 0 commentsI wanted to give Roz the capability to (using dead reckoning) navigate to a waypoint. This is a small part of being able to navigate from one place to another, using a non-raster based map. Most robots these days use super-accurate scanning LIDARs and 3D cameras to build up reasonably accurate raster-based maps of the locations that the robot navigates through. I think this is a bad idea, because it simply isn't scalable. We certainly don't navigate that way as humans, and before everyone had GPS maps in their car we didn't navigate that way driving either.

The way we navigate is to follow paths from one visual landmark to another. We only have a rough idea of where we are at any given point in time, certainly not down to the closest centimeter or even sometimes the closest meter. When you're trying to go from one room in a house to another, it doesn't matter if you're 4 meters from the door or 3 meters - you keep walking until you reach the door. When you go through the door, you follow another path that leads to the next landmark you are looking for, and so on. It doesn't matter exactly where the doorway is, because once you can see it you head towards it until you reach it. You can navigate through a house like that, or from one location to another thousands of kilometers away. It is a very scable navigation and mapping technique.

For now, Roz will only be doing visual landmark recognition using fiducial tags on doorways and other interesting landmarks. Eventually, I would like Roz or a future version to be able to do sufficient real world landmark recognition with a camera to be able to do away with fiducial tags.

To get started, however, doing dead reckoning from one location to another (given a heading and a rough distance) is a good place to start. Roz does odometry right now by estimating distance travelled by a combination of the gait speed and the compass heading from the IMU in his head. Its very crude, but for what I'm trying to accomplish I don't need super high accuracy.

We start at the location (0, 0), and are given a compass heading and the number of mm we should travel. We calculate the (X, Y) end location, and then set up a PID loop, with constants that seem to give reasonably good results.

self.start_location = self.robot.odometer.position radian_heading = math.radians(self.segment.heading) self.end_location = XYPoint(self.start_location.x, self.start_location.y) self.end_location.x += self.segment.distance * math.cos(radian_heading) self.end_location.y += self.segment.distance * math.sin(radian_heading) # we've reached the goal when we're less than 150mm from the end location self.reached_goal_distance = 150 end = XYPoint(int(self.end_location.x), int(self.end_location.y)) log('End location: {}'.format(end)) self.delta_heading = 0 self.kp = 0.007 self.ki = 0 self.kd = 0.0005 log('Kp: {} Ki: {} Kd: {}'.format(self.kp, self.ki, self.kd)) self.pid = PID(self.kp, self.ki, self.kd, setpoint=self.delta_heading, output_limits=(-0.5,0.5))Once everything is set up, we run in a loop, grabbing the current heading, and calculating the heading offset, which we force to be between -180 and 180 degrees. We feed that delta heading into the PID loop, and get the output value, which is radians per step cycle, and feed that into the robot's inverse kinematics system to tell the robot to turn at that speed as it is walking forwards.

We also do obstacle detection and avoidance, but I've removed that code from this example for clarity.

heading = self.robot.imu_sensor.yaw heading_to_goal = self.robot.odometer.position.heading_to(self.end_location) self.delta_heading = heading_to_goal - heading # change delta_heading to be between -180 and 180 if self.delta_heading < -180: self.delta_heading += 360 elif self.delta_heading > 180: self.delta_heading -= 360 new_r_speed = self.pid(self.delta_heading) log('|R_Speed,{},{},{}'.format(new_r_speed, heading, self.delta_heading)) self.robot.set_rotation_speed(new_r_speed)All of this is implemented in a finite state machine, so the first code segment above is run during setup of the dead reckoning state machine, and the second segment above is in the walking state handler, so it is called iteratively until something happens to make it change state:

distance_to_goal = self.robot.odometer.position.distance_to(self.end_location) if distance_to_goal <= self.reached_goal_distance: log('Reached goal, finishing') self.state_machine.transition_to(self.finish_state)The idea is, once we get to the end location, we can start looking for a visual landmark, and servo the robot to that landmark. We then will get the next segment in the path towards our goal, and start the process over again.

Here's a quick video showing the dead reckoning navigator working:

-

ROZ WORKSHOP

01/17/2021 at 14:39 • 0 commentsI made a new video, that describes a lot about how Roz works, what parts go into the robot, and so on...

-

ROZ LIDAR SCAN

01/13/2021 at 13:30 • 0 commentsDid some work last night on doing a LIDAR scan by rotating the head and using three of the VL53L1X sensors (left, front, and right-facing) to build up a 270 degree scan. Here's a short video showing the scan:

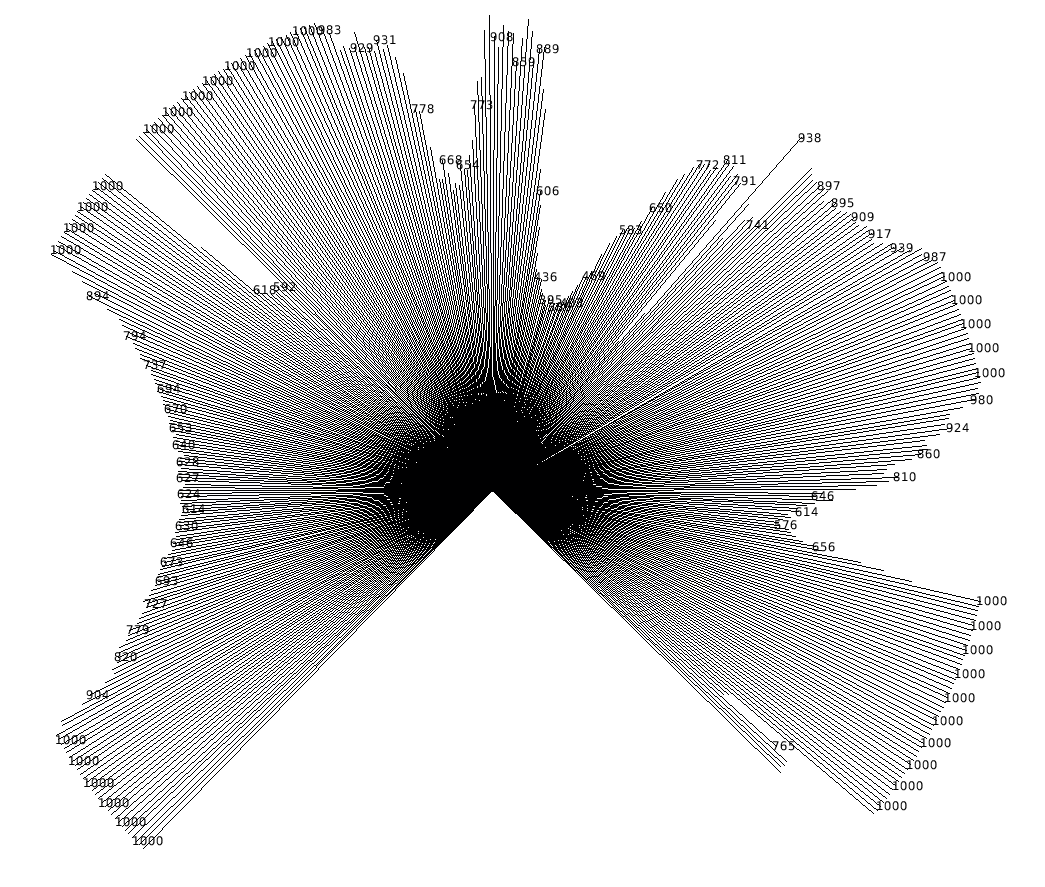

The scan runs at a resolution of about 0.58 degrees, since that is a function of the servo rotation range (0-300 degrees) and the servo resolution (10 bits). I'm skipping the servo position by 2 to make it run in a reasonble amount of time, so I get 462 sensor readings over a 270 degree arc. As I mention in the video description, Roz only needs to rotate his head 90 degrees to get the full 270 sweep, since the sensors are spaced 90 degrees apart. I'm maxing the results at 1 meter, even though the sensor reads much further than that, because for obstacle avoidance I don't care about stuff farther than that right now.

You can also see in the video how he shifts his body forward a bunch during the scan, so the side-facing sensors don't end up seeing the front legs at the extreme ends of the scan. This is done using the body IK, basically by adjusting a variable (forward body offset).

Here's the output:

![]()

Roz is supposed to end up pointing his head in the direction of the largest opening at the end, but I don't think that is quite working yet, since the largest opening is the one on the bottom right, and its not phsyically possible for him to turn his head that far. Regardless, he knows where it is, so he will be able to rotate himself in place to face that direction.

I did something similar with an earlier version of Roz back in 2015, but I only had Sharp IR sensors, and I was only using the front one, so the resolution was pretty terrible. Same overall concept applies though, and I'm very happy with how this one turned out.

Jon Hylands

Jon Hylands