A good OCR system requires the following:

- Capable of agile iterative algorithm models, including model training for new scenarios and online deployment of models. Reduce the cost of continuous deployment

- The lens can be changed to suit the needs of various scenes

- The complete camera system, supporting global exposure, different resolution, and frame rate, to facilitate deployment

- Open source system, easy to develop and integrate their own applications

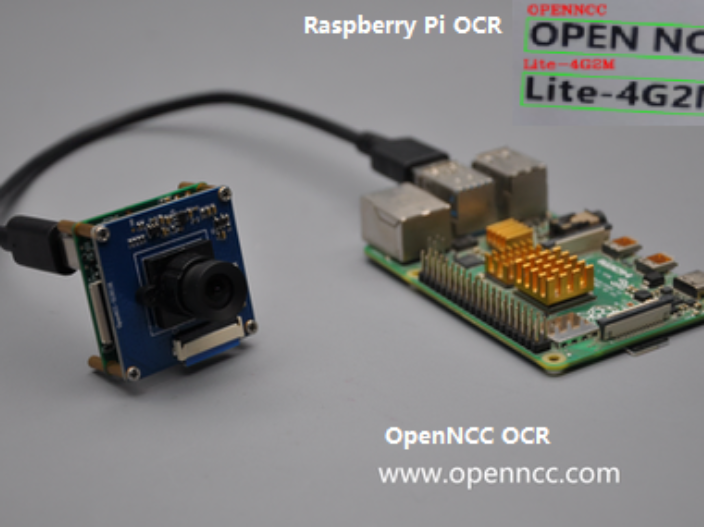

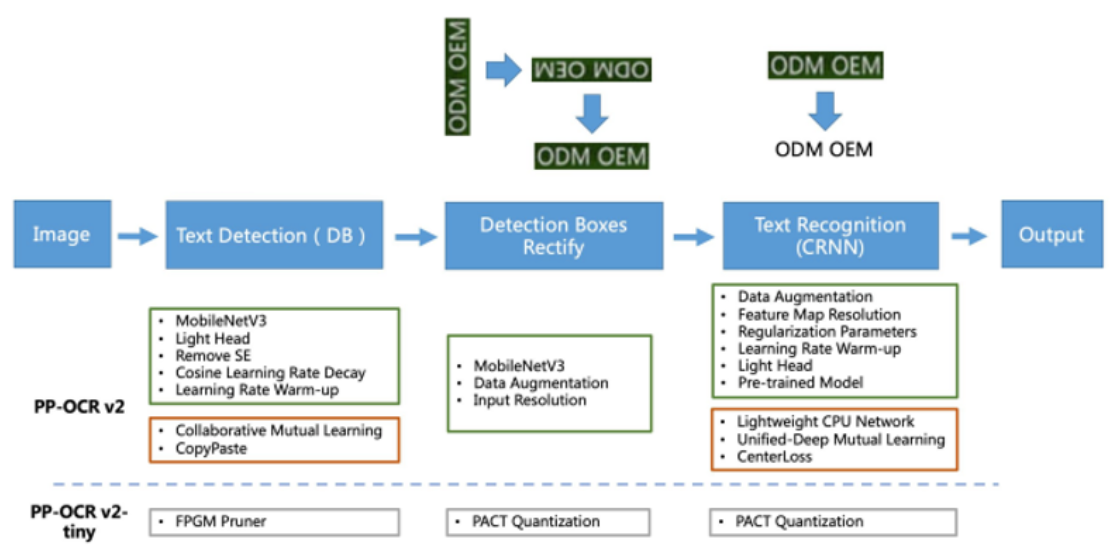

The combination of OpenNCC and Raspberry Pi is selected to realize the OCR system, which has the above advantages. The OpenNCC OCR solution is using PaddleOCR and Intel OpenVINO. PaddleOCR aims to create multilingual, awesome, leading, and practical OCR tools that help users train better models and apply them to practice.

We designed the load reasonably, deployed some inference models on OpenNCC and Raspberry PI respectively, and converted the PaddleOCR model into OpenVINO support model through ONNX, which was deployed to run on OpenNCC. Of course, considering the good support of Raspberry Pi with OpenCV, we deployed part of image preprocessing and model inference on Raspberry PI to achieve best practices.

Now, let's start developing the OCR solution.

Prepare the hardware

- OpenNCC Edge AI camera

- Raspberry PI 4B

- USB cable

- 5v Power adapter

- Ethernet cable

Connecting OpenNCC and Raspberry Pi

- Connect raspberry PI 4 Model B and OpenNCC with USB3.0

Configuring the raspberry PI

The following commands are operated on the board of Raspberry Pi. Need to connect raspberry with a monitor, mouse, and keyboard.

- Install libusb, opencv and ffmpeg on Raspberry Pi

$ sudo apt-get install libopencv-dev -y

$ sudo apt-get install libusb-dev -y

$ sudo apt-get install libusb-1.0.0-dev -y

$ sudo apt-get install ffmpeg -y

- If you want to use python

$ sudo apt-get install python3-opencv -y

- Clone OpenNCC OCR repo onto Raspberry Pi, it used PaddleOCR based on Paddle-Lite platform

$ git clone https://gitee.com/eyecloud/openncc_hub.git

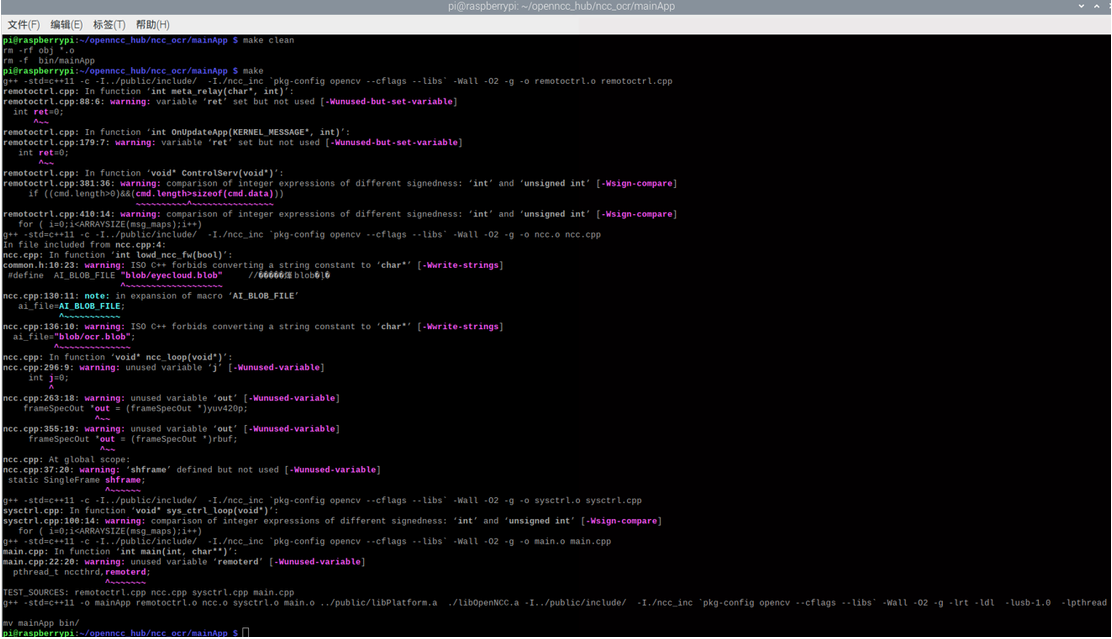

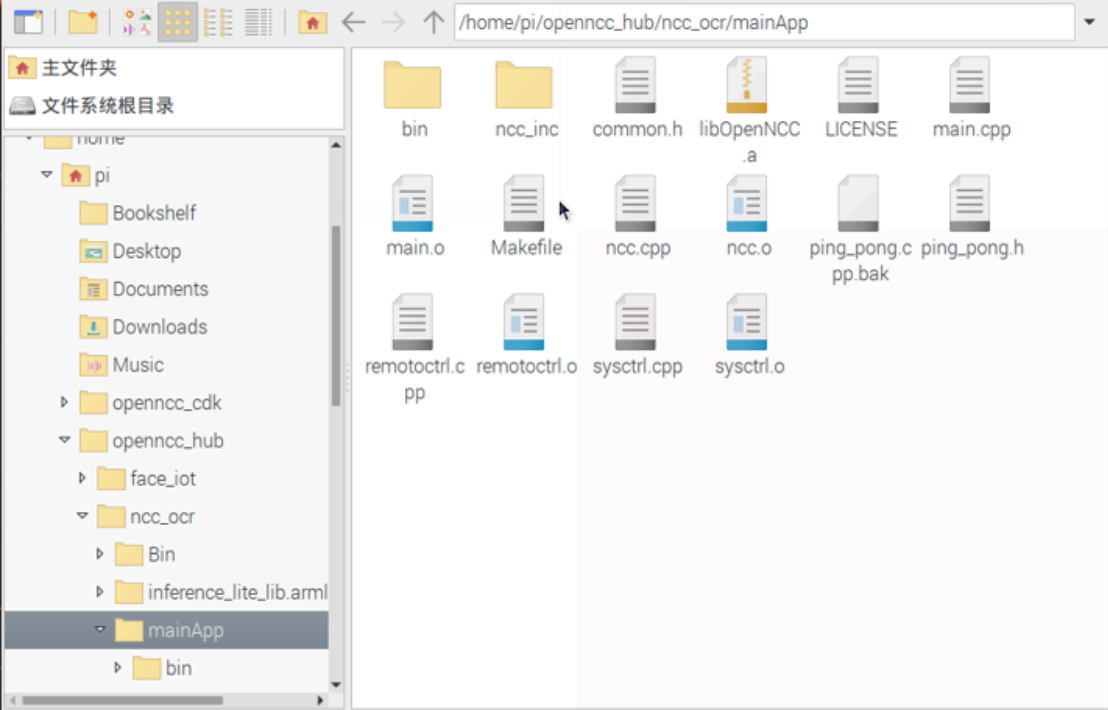

- Build the demo

$ cd openncc_hub/ncc_ocr/mainApp

$ sudo make clean

$ sudo make

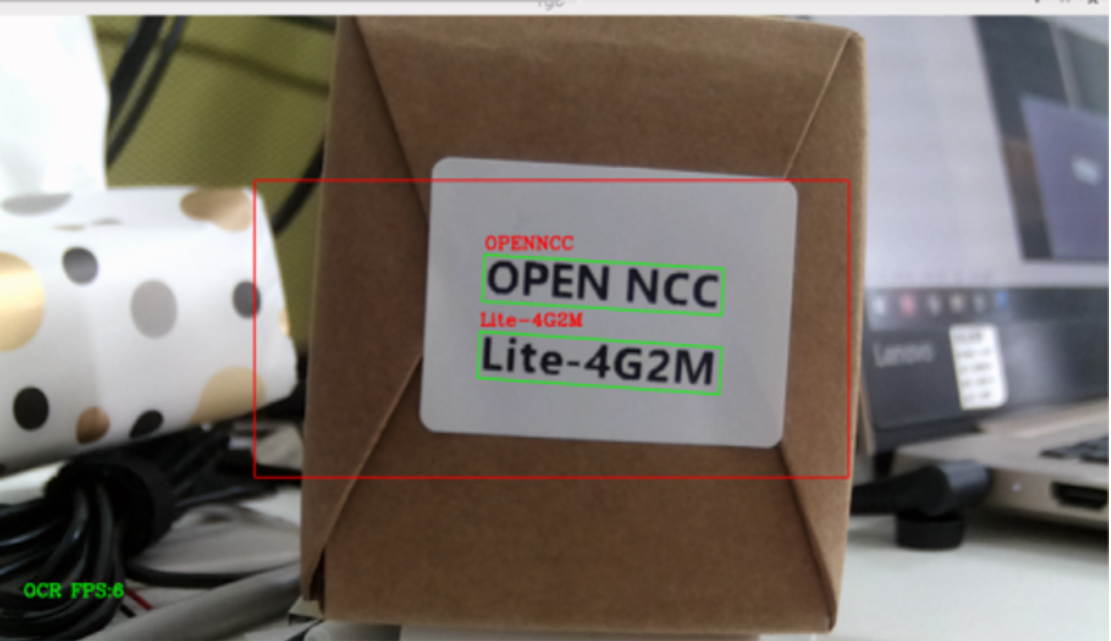

Run the main application to deploy the first AI-model to the OpenNCC camera,and capture streaming to Raspberry Pi.

$ cd openncc_hub/ncc_ocr/mainApp/bin

$ sudo ./mainApp

- Enter inference_lite_lib.armlinux.armv7hf/demo/cxx/ocr,to build the PaddleOCR thread application.

$ cd openncc_hub/ncc_ocr/ inference_lite_lib.armlinux.armv7hf/demo/cxx/ocr

$ sudo make clean

$ sudo make

- After successful built it, type:

$ cd openncc_hub/ncc_ocr/ inference_lite_lib.armlinux.armv7hf/demo/cxx/ocr/debug

$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib: ‘your_debug_dir

$ ./ocr_db_crnn

We point the camera at the target's text, could see the recognition results with the corresponding location in the video stream.

Johanna Shi

Johanna Shi

Dmitry

Dmitry