Technical Details:

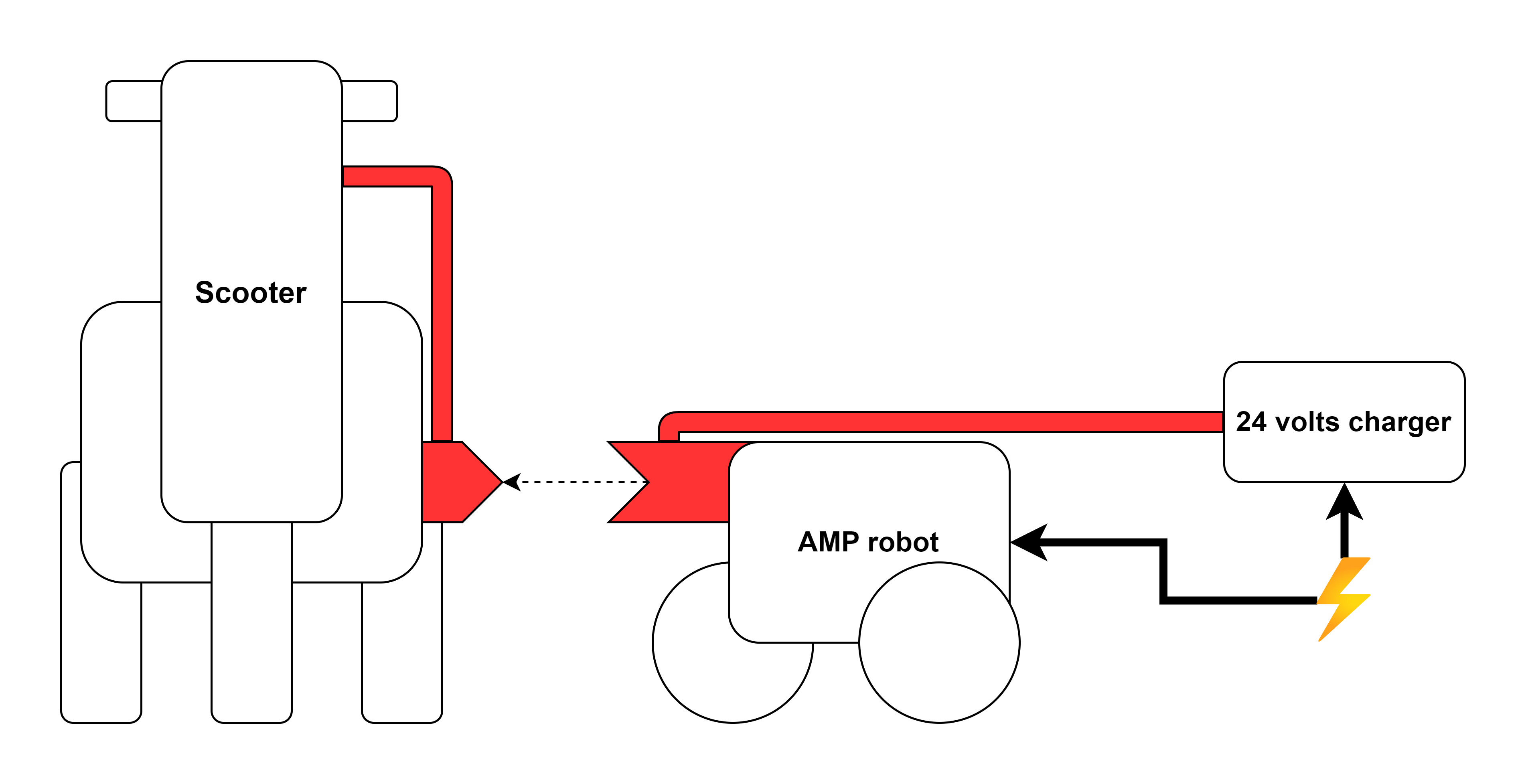

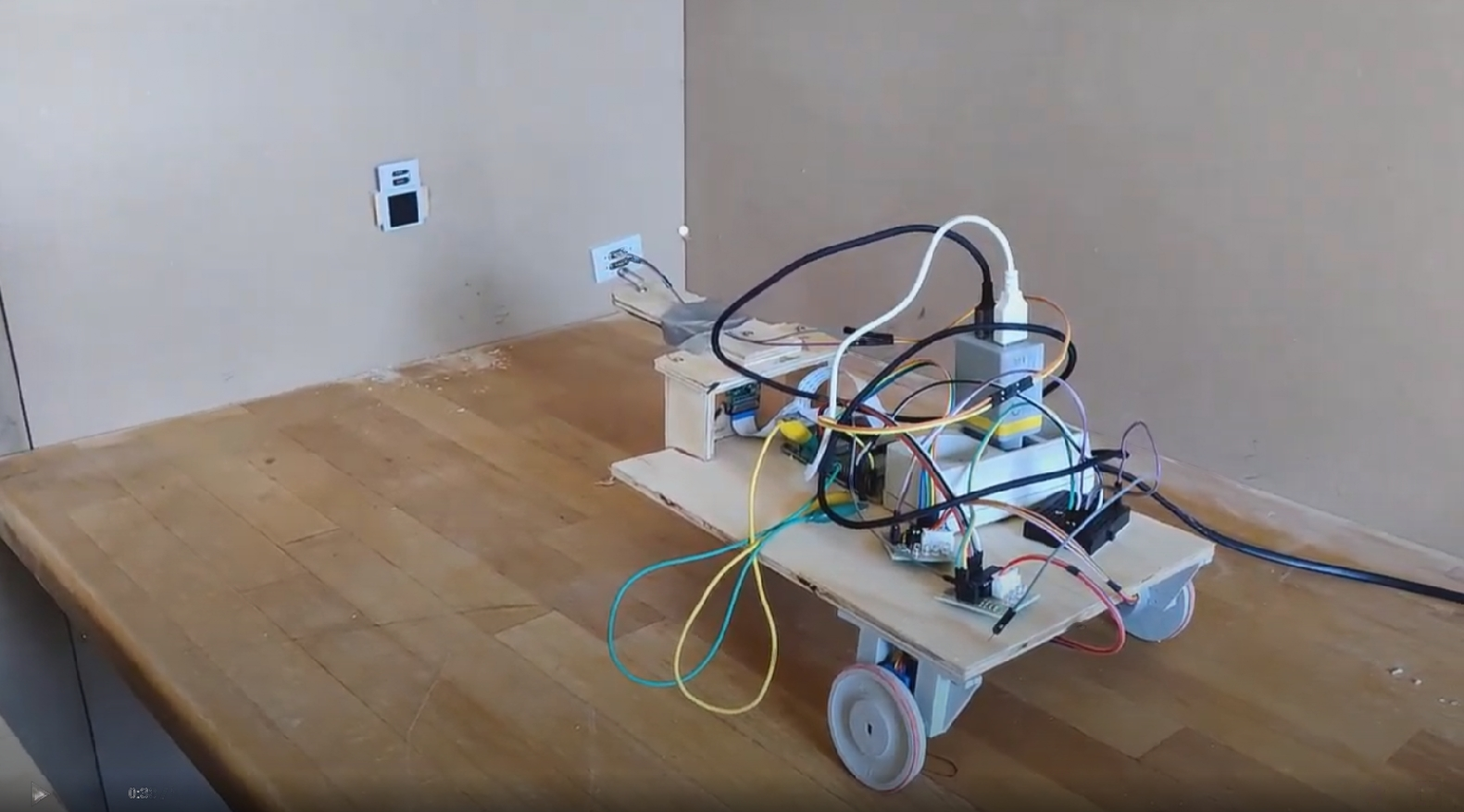

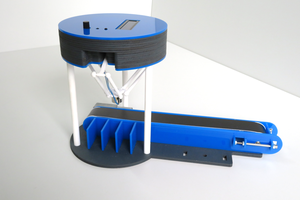

AMP Robot is built around a Raspberry Pi 3, incorporating a Raspberry Pi camera module, 28BYJ-48 stepper motors with ULN2003AN Stepper Motor Modules, and custom 3D-printed parts. Using Python and OpenCV, the robot employs computer vision techniques for autonomous navigation towards the mobility scooter. A custom-designed connector, coupled with magnetic pogo stick connectors, establishes a secure power link between AMP Robot and the scooter's XLR male and female ports.

- Reliable Power Connection: The custom-designed connector and magnetic pogo stick connectors ensure a robust and secure power link.

- User-Friendly Operation: AMP Robot requires no or minimal user input for initiating the power connection process.

- Expandability: The modular design allows for future enhancements and customization to accommodate different scooter models.

BOM:

Raspberry Pi 3: $70 (est.) Raspberry Pi Camera Module: $30 28BYJ-48 Stepper Motors (2): $8 ULN2003AN Stepper Motor Modules (2): $4 Magnetic Pogo Stick Connectors: $10 XLR Connectors: $5

total: $130 (est.)

Niklas Frost

Niklas Frost

Ted Huntington

Ted Huntington

Gaultier Lecaillon

Gaultier Lecaillon

Will Donaldson

Will Donaldson

Pascal Buerger

Pascal Buerger