Two things that inspire an electronics hacker are cheap micros and GRB leds like the WS2812. There are many drivers for them, including WCH's CH32V003 RISCV chip (about $0.14 for the SOP 8 version in 500's).

But, most drivers I have seen use bit-banging on a pin tying up the processor while it outputs the required encoding at about 1.5 us / bit. What a waste!

I had an idea that SPI could be used to do the encoding, and the CH32V003 has ... DMA!

The result was an encoding which uses 12 bytes for the 24 bits required. Wrapping my head around the concept of DMA buffers versus absolute led encoding took some time but I triumphed.

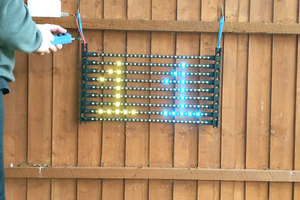

For the Zippy Worm test in the video here are some scope timings using the MounRiver / Eclipse IDE for a CH32V003 Dev board. 192 leds were programmed in the string.

The Zippy Worm was a 16 led animation pattern which required an update after writing the string.

Percentage of time spent loading the DMA buffer versus in a wait loop:

- 32.5% of the total processing time compiled in 'fast' optimization.

- 87% with No optimization.

- 43% with Optimize (O)

I then modified the code in setLed() to remove the unnecessary amplitude multiply, as the pattern already has amplitudes for each color.

* 17.5% with Optimize (O) removing the (not needed) amplitude multiply in setLed()

* 14% as above in optimize fast

I think it would be interesting to use the extra time available to control the patterns using the I2C interface. There is some time available to do that.

Have fun!

AjG

A. J. Griggs

A. J. Griggs

LordGuilly

LordGuilly

Hulk

Hulk

ndGarage

ndGarage