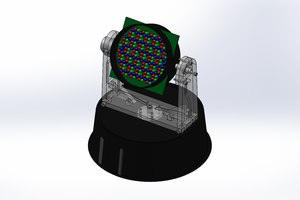

The figure shown on the project board is my first attempt to demonstrate the multispectral computer vision plant biofeedhack system concept. The picture was taken with a modified LED grow light and Raspberry Pi noir camera. The picture shows different images of a five week old basil plant leaf illuminated by different LED wavelengths. Top left is a computer vision enhanced image. Top right is white light image. Images below are recorded with ultraviolet (uv1,uv2), blue(blu), green (grn), red and infrared (ir) LED light. Remaining pictures are processed composite images that highlight color contrast. Computer vision software (SimpleCV) extracts the leaf image (colored dark green) and measures number of pixels in the area, length, width and perimeter (bottom text). The picture shows that a measurement system can be built to characterize plant growth and multispectral leaf reflectance. The measurements can then be used to tailor the LED light for different growth conditions.

Update Mar 2019.

Several related computer vision projects using opencv are posted at:

Sergio Ghirardelli

Sergio Ghirardelli

Tim Gremalm

Tim Gremalm

The Technocrat

The Technocrat

Celine

Celine

The investment community has begun to respond, if not in practise than at least in words, to Ross's declaration that 2019 is "the year the world woke up to climate change." Concerns regarding environmental, social, and governance (also known as ESG) are increasingly widely recognised as having an effect on the decisions made in the capital market. ESG considerations are now routinely incorporated into investment analyses conducted by fund managers and private wealth managers, investment banks, pension plans, and individual investors. Additionally, businesses regularly publicise their ESG credentials. By the year 2020, "68 percent of UK savers wanted their investments to evaluate the impact on people and planet alongside the financial performance," as stated by Ross.

More than just a Glance at the Whole

The book "Investing to Save the Planet" is geared toward private investors as its target demographic. It does an excellent job of accomplishing its aim, which is to present a condensed, clear introduction to environmentally responsible investing while omitting no essential material.

The chapters go quickly and combine an incredible amount of information with the author's personal anecdotes and experiences. This is not merely something that will make you feel wonderful. It is likely that readers who are interested in this book value ESG for deeply personal reasons, and the personal narratives that are covered in this book put the quantitative facts in a context that is rich and complicated.

At the end of each chapter is a practical piece of advice labeled "What Should I Do?" As a way to demonstrate that Ross has a deep understanding of the needs of her audience, she presents different lists of proposed actions for low-, medium-, and high-risk investors.if you want to get more information

https://pureclimatestocks.com/book-review-investing-to-save-the-planet-alice-ross/