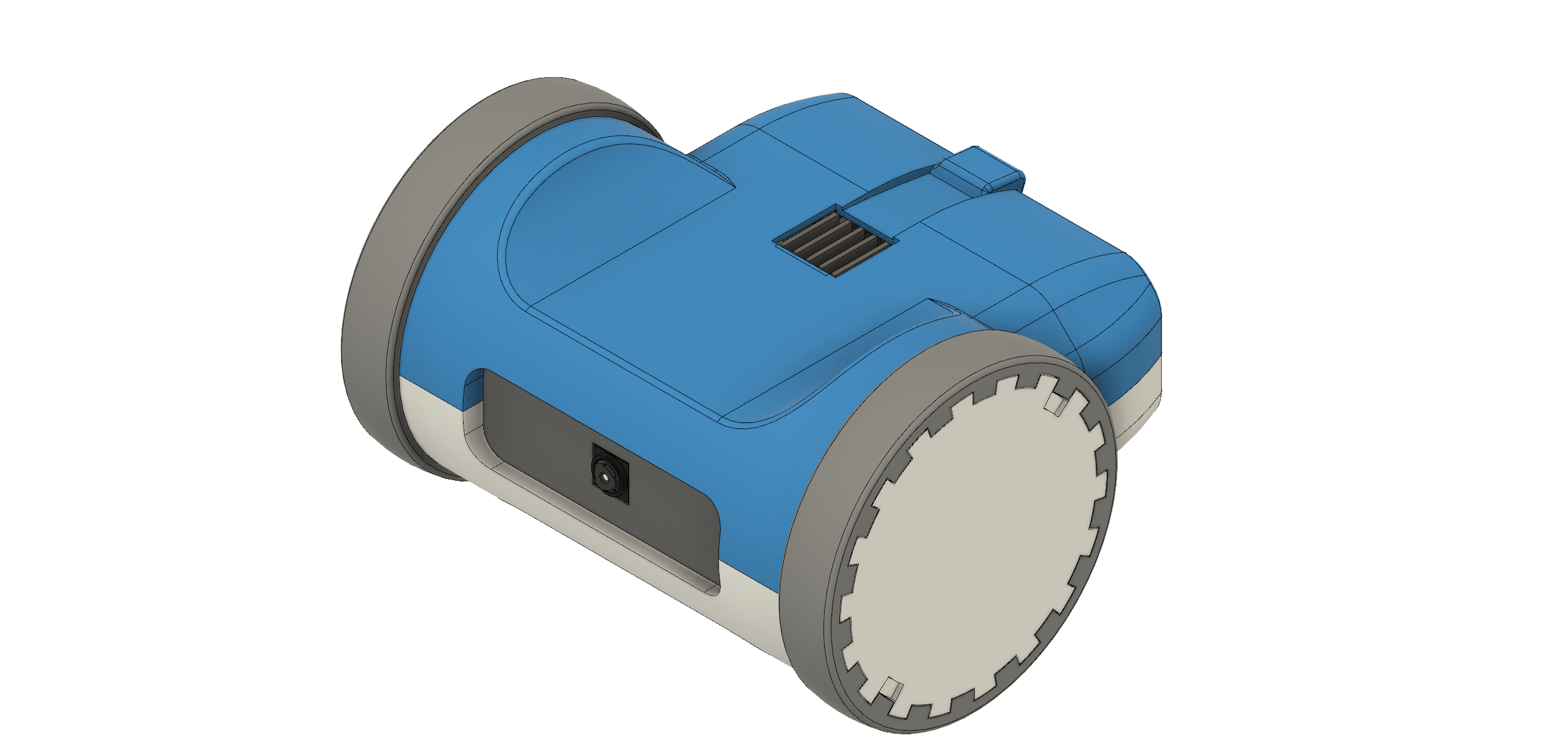

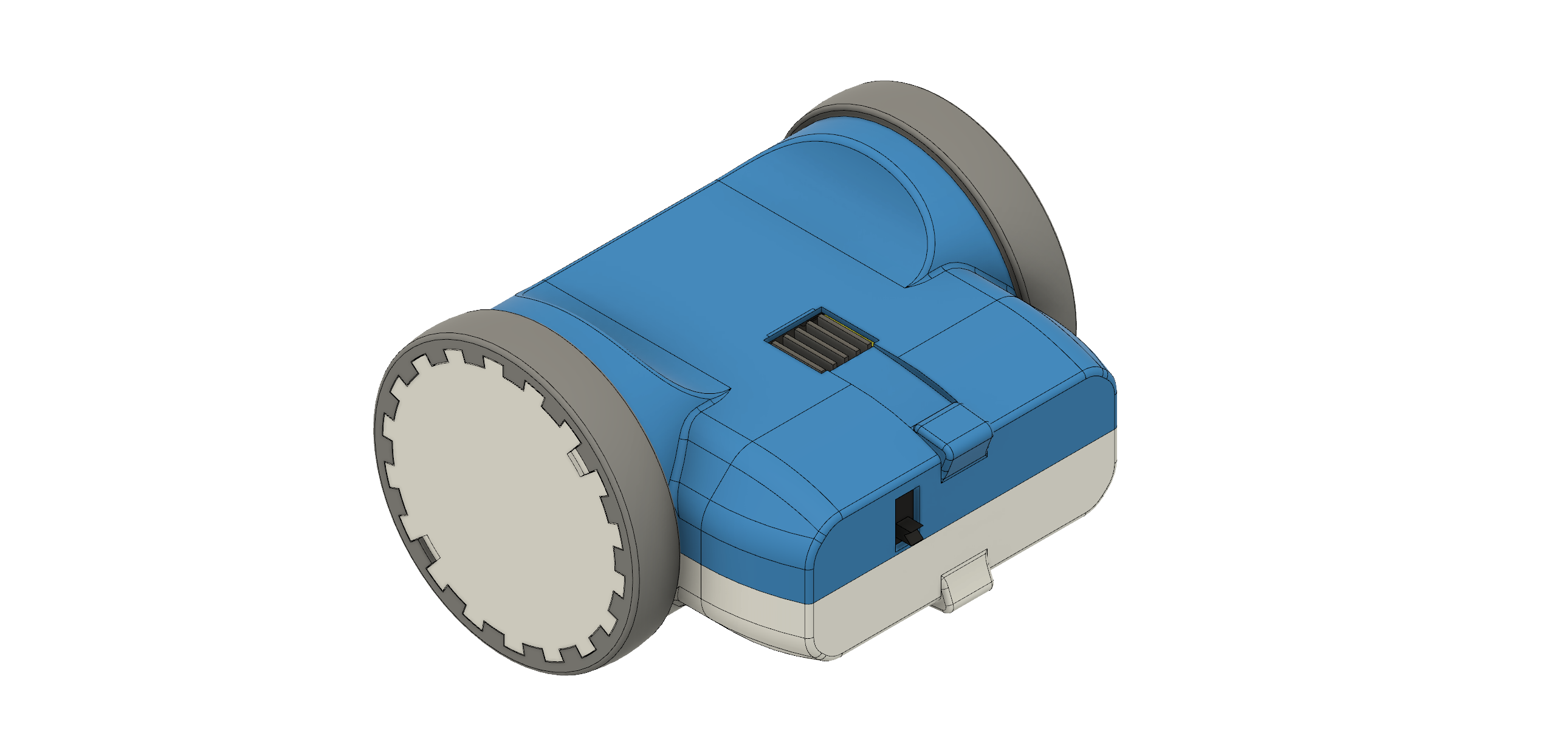

ZeroBot - Raspberry Pi Zero FPV Robot

Raspberry Pi Zero 3D Printed Video Streaming Robot

Raspberry Pi Zero 3D Printed Video Streaming Robot

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

For the Zerobot robot there are different instructions and files spread over Hackaday, Github and Thingiverse which may lead to some confusion. This project log is meant as a short guide on how to get started with building the robot.

Where do I start?

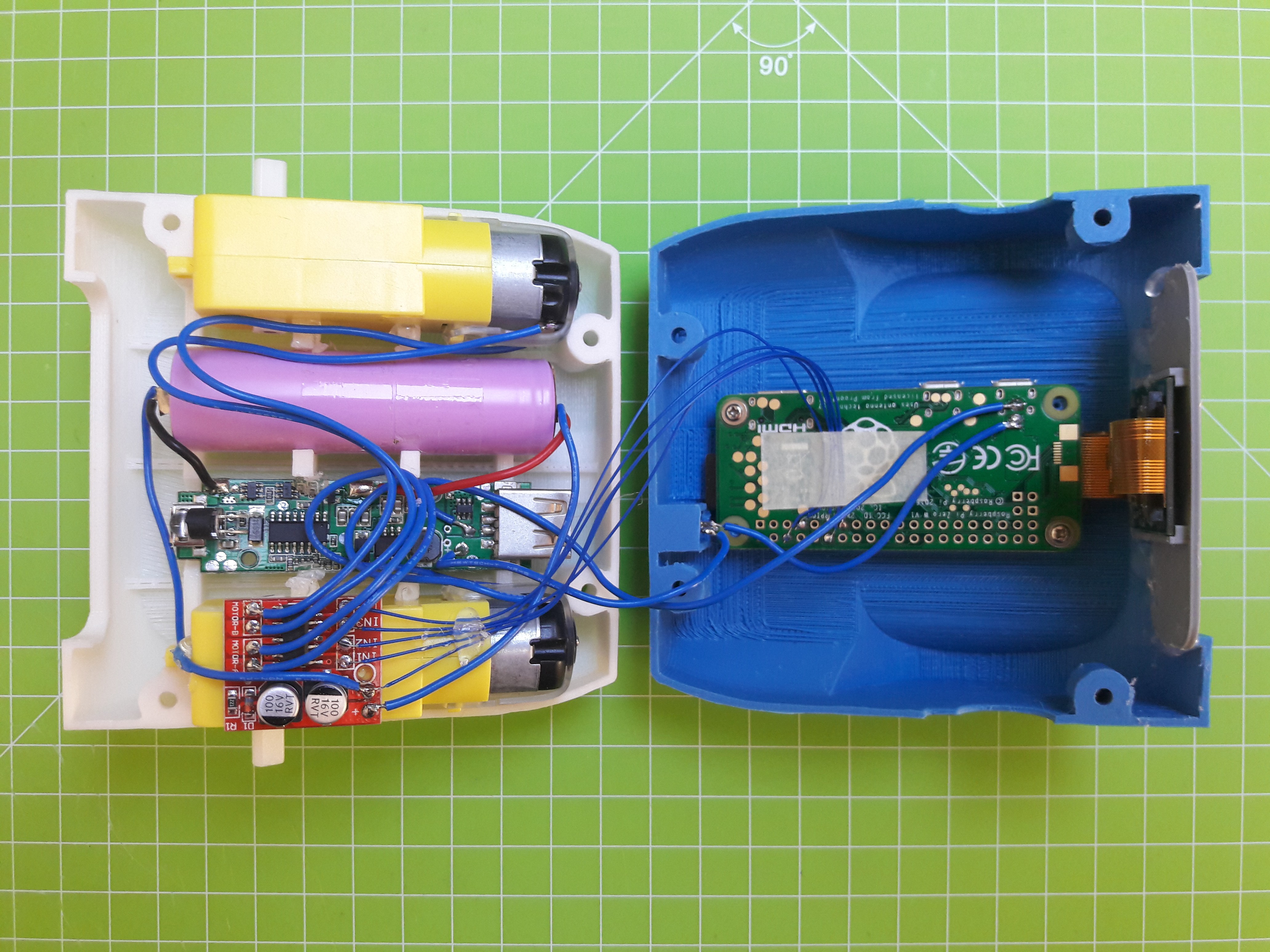

- Raspberry Pi Zero W - 2x ICR18650 lithium cell 2600mAh - Raspberry camera module - Zero camera adapter cable - Mini DC dual motor controller - DC gear motors - ADS1115 ADC board - TP4056 USB charger - MT3608 boost converter - Raspberry CPU heatsink - Micro SD card (8GB or more) - 2x LED - BC337 transistor (or any other NPN) - 11.5 x 6mm switch - 4x M3x10 screws and nuts

The robot spins/ doesn't drive right

The motors might be reversed. You can simply swap the two wires to fix this.

I can't connect to the Zerobot in my browser

The Raspberry Pi itself with the SD card image running on it is able to display the user interface in your browser. There is no additional hardware needed, so this can't be a hardware problem. Check if you are using the right IP and port and if you inserted the correct WiFi settings in the wpa_supplicant.conf file.

I see the user interface but no camera stream

Check if your camera is connected properly. Does it work on a regular Raspbian install?

Zerobot and Zerobot Pro - What's the difference?

The "pro" version is the second revision of the robot I built in 2017, which includes various hardware and software changes. Regardless of the hardware the "pro" software and SD-images are downwards compatible. I'd recommend building the latest version. New features like the voltage sensor and LEDs are of course optional.

Can I install the software myself?

If you don't want to use the provided SD image you can of course follow this guide to install the required software: https://hackaday.io/project/25092/instructions You should only do this if you are experienced with the Raspberry Pi. The most recent code is available on Github: https://github.com/CoretechR/ZeroBot

All new features: More battery power, a charging port, battery voltage sensing, headlights, camera mode, safe shutdown, new UI

The new software should work on all existing robots.

When I designed the ZeroBot last year, I wanted to have something that "just works". So after implementing the most basic features I put the parts on Thingiverse and wrote instructions here on Hackaday. Since then the robot has become quite popular on Thingiverse with 2800+ downloads and a few people already printed their own versions of it. Because I felt like there were some important features missing, I finally made a new version of the robot.

The ZeroBot Pro has some useful, additional features:

If you are interested in building the robot, you can head over here for the instructions: https://hackaday.io/project/25092/instructions

The 3D files are hosted on Thingiverse: https://www.thingiverse.com/thing:2800717

Download the SD card image: https://drive.google.com/file/d/163jyooQXnsuQmMcEBInR_YCLP5lNt7ZE/view?usp=sharing

After flashing the image to a 8GB SD card, open the file "wpa_supplicant.conf" with your PC and enter your WiFi settings.

After a few people ran into problems with the tutorial, I decided to create a less complicated solution. You can now download an SD card image for the robot, so there is no need for complicated installs and command line tinkering. The only thing left is getting the Pi into your network:

If you don't want the robot to be restricted to your home network, you can easily configure it to work as a wireless access point. This is described in the tutorial.

EDIT 29.7. Even easier setup - the stream ip is selected automatically now

The goal for this project was to build a small robot which could be controlled wirelessly with video feed being sent back to the user. Most of my previous projects involved Arduinos but while they are quite capable and easy to program, there are a lot of limitations with simple microcontrollers when it comes to processing power. Especially when a camera is involved, there is now way around a Raspberry Pi. The Raspberry Pi Zero W is the ideal hardware for a project like this: It is cheap, small, has built in Wifi and enough processing power and I/O ports.

Because I had barely ever worked with a Raspberry, I first had to find out how to program it and what software/language to use. Fortunately the Raspberry can be set up to work without ever needing to plug in a keyboard or Monitor and instead using a VNC connection to a remote computer. For this, the files on the boot partition of the SD card need to be modified to allow SSH access and to connect to a Wifi network without further configuration.

The next step was to get a local website running. This was surprisingly easy using Apache, which creates and hosts a sample page after installing it.

To control the robot, data would have to be sent back from the user to the Raspberry. After some failed attempts with Python I decided to use Node.js, which features a socket.io library. With the library it is rather easy to create a web socket, where data can be sent to and from the Pi. In this case it would be two values for speed and direction going to the Raspberry and some basic telemetry being sent back to the user to monitor e.g. the CPU temperature.

For the user interface I wanted to have a screen with just the camera image in the center and an analog control stick at the side of it. While searching the web I found this great javascript example by Seb Lee-Delisle: http://seb.ly/2011/04/multi-touch-game-controller-in-javascripthtml5-for-ipad/ which even works for multitouch devices. I modified it to work with a mouse as well and integrated the socket communication.

I first thought about using an Arduino for communicating with the motor controller, but this would have ruined the simplicity of the project. In fact, there is a nice Node.js library for accessing the I/O pins: https://www.npmjs.com/package/pigpio. I soldered four pins to the PWM motor controller by using the library, the motors would already turn from the javascript input.

After I finally got a camera adapter cable for the Pi Zero W, I started working on the stream. I used this tutorial to get the mjpg streamer running: https://www.youtube.com/watch?v=ix0ishA585o. The latency is surprisingly low at just 0.2-0.3s with a resolution of 640x480 pixels. The stream was then included in the existing HTML page.

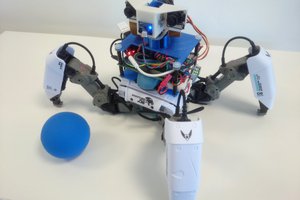

With most of the software work done, I decided to make a quick prototype using an Asuro robot. This is a ancient robot kit from a time before the Arduino existed. I hooked up the motors to the controller and secured the rest of the parts with painters tape on the robot's chassis:

After the successful prototype I arranged the components in Fusion 360 to find a nice shape for the design. From my previous project (http://coretechrobotics.blogspot.com/2015/12/attiny-canbot.html) I knew that I would use a half-shell design again and make 3D printed parts.

The parts were printed in regular PLA on my Prusa i3 Hephestos. The wheels are designed to have tires made with flexible filament (in my case Ninjaflex) for better grip. For printing the shells, support materia is necessary. Simplify3D worked well with this and made the supports easy to remove.

After printing the parts and doing some minor reworking, I assembled the robot. Most components are glued inside the housing. This may no be professional approach, but I wanted to avoid screws and tight tolerances. Only the two shells are connected with four hex socket screws. The corresponding nuts are glued in on the opposing shell. This makes it easily to access the internals of the robot.

For...

Read more »DISCLAIMER: This is not a comprehensive step-by-step tutorial. Some previous experience with electronics / Raspberry Pi is required. I am not responsible for any damage done to your hardware.

I am also providing an easier alternative to this setup process using a SD card image: https://hackaday.io/project/25092/log/62102-easy-setup-using-sd-image

https://www.raspberrypi.org/documentation/installation/installing-images/

This tutorial is based on Raspbian Jessie 4/2017

Personally I used the Win32DiskImage for Windows to write the image to the SD card. You can also use this program for backing up the SD to a .img file.

IMPORTANT: Do not boot the Raspberry Pi yet!

Access the Raspberry via your Wifi network with VNC:

Put an empty file named "SSH" in the boot partiton on the SD.

Create a new file "wpa_supplicant.conf" with the following content and move it to the boot partition as well:

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

network={

ssid="wifi name"

psk="wifi password"

}

Only during the first boot this file is automatically moved to its place in the Raspberry's file system.

After booting, you have to find the Raspberry's IP address using the routers menu or a wifi scanner app.

Use Putty or a similar program to connect to this address with your PC.

After logging in with the default details you can run

sudo raspi-config

In the interfacing options enable Camera and VNC

In the advanced options expant the file system and set the resolution to something like 1280x720p.

Now you can connect to the Raspberry's GUI via a VNC viewer: https://www.realvnc.com/download/viewer/

Use the same IP and login as for Putty and you should be good to go.

sudo apt-get update sudo apt-get upgrade sudo apt-get install apache2 node.js npm git clone https://github.com/CoretechR/ZeroBot Desktop/touchUI cd Desktop/touchUI sudo npm install express sudo npm install socket.io sudo npm install pi-gpio sudo npm install pigpio

Run the app.js script using:

cd Desktop/touchUI sudo node app.js

You can make the node.js script start on boot by adding these lines to /etc/rc.local before "exit 0":

cd /home/pi/Desktop/touchUI sudo node app.js& cd

The HTML file can easily be edited while the node script is running, because it is sent out when a host (re)connects.

Create an account to leave a comment. Already have an account? Log In.

I am able to use the 3B with a rover chassis with it so far. Check Pishop.us They currently have a zero W in stock and their stock seems to be pretty decent these days if you keep an eye on it. I was also able to get a pi4 for retail price on sparkfun recently. They currently have a zero W in stock as well (the zero 2 is hard to find anywhere) Shipping is free if you order over 100$ of things

hey everyone. I have ran into an issue. I'm not sure if the node source 17 still works. I keep receiving "sudo: npm: command not found

hallo, sehr schönes Projekt. Folgendes Problem: beim einschalten dreht ein Motor an gpio 4, bis der pi hochgefahren und mit WiFi verbunden ist. Danach funktioniert alles.

problem gelößt. gpio ist beim start auf high. ich habe es auf gpio 23 geändert.

I got a Problem, everything worked just fine i could connect via Phone and controll it. I could take Pictures, controll the Lights and read the Temparature and Voltage. Now my Problem is everything works only the Stream doesn't show. I still can take Pictures that show an Image of what i should see. I tried another Pi but it didn't work and i reinstalled everything and tried the provided Image but that also didn't help.

(fixed) Is the provided SD card image still working? (It is, just double check that the wpa_supplicant file information saved). I can't seem to ping my PI or find it on my browser.

A few notes, some npm packages are missing to run the app, you need to run:

sudo npm install coffee-script

sudo npm install node-ads1x15

Now I have had problems with the ads1x15 module, so I had to disable it (I'll come back if I fix it), but to run the app.js just change this line of code:

if(!adc.busy){ ---> if(false){

basically we prevent the ads1115 to be read since it was causing problems for me.

The camera stream had also some problems:

You need to change the path for the CMakeList.txt for the raspicam plugin. Basically this changes: https://github.com/jacksonliam/mjpg-streamer/pull/339/commits/b90dbb89987c6ebe0725b9589e1932e8f4bb6aa4

And you need to enable legacy camera:

https://github.com/jacksonliam/mjpg-streamer/issues/176#issuecomment-1024719781

https://www.raspberrypi.com/documentation/accessories/camera.html#re-enabling-the-legacy-stack

Good luck!

Figured out the reading voltage problem, the I2C was not enabled in the raspberry, use this to enable:

https://pi3g.com/2021/05/20/enabling-and-checking-i2c-on-the-raspberry-pi-using-the-command-line-for-your-own-scripts/

Just a few notes on how to make the raspberry as wifi AP so you don't need to connect the robot to any wifi, you can connect to the robot's wifi and run it from your phone the same way.

Use this instructions, but you can skip the steps 6, 7 and 8 (no need for packet forwarding):

https://thepi.io/how-to-use-your-raspberry-pi-as-a-wireless-access-point/

For some updates on the hostapd you will probably have to run these commands:

sudo systemctl unmask hostapd

sudo systemctl enable hostapd

Similar to this instructions https://howchoo.com/pi/setup-a-raspberry-pi-wireless-access-point

Hello Max, I only have access to the new Raspberry Pi Zero W 2 and I found that the default image doesn't boot on the new Zero W2 so Proceeded with your instructions to get the project using Github ,but it's giving me an access denied when I try to connect to the Zerobot via web. I also seem to having an issue with the PI camera not being recognized, but I'm guessing that's not related but thought I would mention it as well in case anybody has any suggestions.

Update on Camera issue:

Looks like there is a known issue with Pi Zero W2 and they are working on a fix see thread here https://forums.raspberrypi.com/viewtopic.php?t=323462

Hi Gary, my hexapod project is using similar software and hardware as the ZeroBot. I recently upgraded it to a Pi Zero 2 using a Raspbian Buster image. There seem to be many changes in Bullseye that break old functionality. There is a new legacy firmware because of this: https://www.raspberrypi.com/news/new-old-functionality-with-raspberry-pi-os-legacy/

Maybe you will have more luck with this instead of waiting for the bullseye fix.

Thanks for the quick reply and I will give the Buster image a try and update with the progress. I currently have a working Zerobot, tracked version, with your original image and an older Pi Zero W but wanted to build your original wheeled version and didn't want to wait until the older Pi Zero is in stock again.

Not sure if it's completely working, but when I go the IP address of the Pi on port 3000 the camera is working and the webpage looks correct, but the shutdown button doesn't do anything, so I think I have some more work to do. I do not have the motors hooked up yet, but I plan to do that tomorrow and I will provide an update.

This is what worked for me for the Raspberry Pi Zero W 2 using the "Rasbian Pi OS (Buster) image (Doesn't work at all with Bullseye at all)

sudo apt-get update

sudo apt-get upgrade

sudo apt install apache2 -y

curl -sL https://deb.nodesource.com/setup_17.x | sudo -E bash -

sudo apt install -y nodejs

git clone https://github.com/CoretechR/ZeroBot Desktop/touchUI

cd Desktop/touchUI

sudo npm install express

sudo npm install socket.io

sudo npm install pi-gpio

sudo npm install pigpio

I also had to remove the required module lines in the "app.js" file because I could not get the model for the ADS1115 to load on the Pi Zero W 2 for some reason.

I then followed the rest of your instructions to the letter for the rest of the install.

It's a start and I think I should be able to figure the rest out and provide complete instructions of how I got it working the Pi Zero W2 shortly.

Thanks again for your help on this Max!

hey check my comments, I figure out the solution for most of the problems.

Although I have read that for a new raspberry pi 64 bits, there might be extra problems with the camera stream:

https://github.com/jacksonliam/mjpg-streamer/issues/296

hello Max. i watched your project, and it was very impressive. i want to make it but the URL of motor driver, power bank and camera is unavailable. i have no idea that what should i use. can you help me?

hi Max, thank you for this great project! Do I need protected or unprotected 18650 batteries? I'd prefer protected by of course but I do not know if the extra PCB would somehow interfere with the other parts...

I have only used unprotected cells when I made the robot. If I did the project today I would go for protected batteries as well, it's just safer. There should be just enough room, depending on where the PCB is mounted.

Hey Max - thanks for creating Zerobot. It looks like a fantastic project. I've just bought the components. Question - did you dremel / snap off the axel from the yellow motor on one side? It looks like it gets in the way of the battery otherwise.

Sorry for the late reply, you probably solved the problem yourself by now.

Anyway, yes, the inner axle has to be cut off. Its made from relatively soft plastic so it should be easy.

Thanks for getting back. Yes, I got it working - it's been a fun project. The controlling method is great, especially since it works from a phone or computer. I've ordered a fisheye lens in order to make driving easier. I'm nervous about the hot glue not being strong enough around the usb port, so I just always leave a little usb cable plugged in. Do you just use hot glue for the usb port? One issue I've had is that if the robot leaves wifi it just keeps driving at its original bearing. I saw another project where the creater had a "health check" which pings the controller every 2 seconds and stops the motor if health check fails. Anyways, great work. I'd like to try out your mini version using ESP32, but it would be nice to adapt the enclosure for an ESP32-Cam, which seem much more available.

Hot glue might not be ideal, but it worked well for me so far.

A health check is a great idea to make the connection more reliable. And it should not be too difficult to implement. I am working on a similar project right now where this will come in handy. Thanks!

HI Max, Its been great following and building this project. Unforntunatly Ive stumbled at the last post and found that the battery does not have enough juice to power the PI/camera/adc. Maybe perhaps because I chose to power the PI through the micro USB port as I wasnt willing to take a risk without any safety features, I cant afford to loose a board. Will this affect the current/power drawn from the batteries for the camera and ADC ? Thanks.

Of course you can power the Pi through its USB port as well. I soldered directly to the board because that was more convenient. I think the 5V solder pad on the bottom is directly connected to the USB port.

After burning a few Pi boards I have decided to go a different way and power the Pi with a sepparate battery. This way I have more control over the power that goes each way.

Now I am trying to modify the code so I can change the two wheel steering method you use and add a servo or another dc motor for using front wheel steering. Do you have any guide to changing your code, before I go into a trial and error learning?

Thank you very much for all the information you gave here.

There is a function "tankDrive" in the Touch.html that you might want to start with. It maps the user inputs to two PWM outputs. You could try hooking up a servo to one of the PWM pins to see if that works already. If not, you need to change the app.js file.

Hi, I'm wondering if you know the cost of 3D printing each robot body? Or, at least the weight of the material used? Thanks.

I'm having trouble with flashing the images. They do install, but will not boot. Installing a Raspian image, works fine, boots and connects. Then when trying to do the manual install, packages won't install, or errors show up. Clearly, something doesn't match ! Does anyone have this working on a 3B+ ? If so, can you share a working image i can reconfigure for my network? I hate building it, and not being able to use it !!! Thanks everyone !

Did you ever find a solution? I was able to flash to an SD card and boot up on a 3B+, but then I fried the 3B+ (unrelated error) and replaced it with another. I can't get the other to boot up to the image. However, I can flash the RP Desktop to a card and have it boot with no problem.

Should this work on a full sized Raspberry Pi 3 B+? I don't have a spare Zero, and the full sized would be better for the camera feed. I tried but it just gets stuck on the colourful boot screen.

I also tried the manual install but had a whole bunch of errors.

Yes, I am sure it should work. I've developed the software on a Pi 3 before moving it to the Zero. Maybe there was a problem with flashing the image file? Did you follow the latest tutorial?

hello. First of all I would like to thank you for sharing this. It is a great project. It am quite new to this world but with your instructions I have managed to get all this done in no time.

I have managed to get most of it working with the exception of the motors. Once the robot starts I can hear the motors trying to move but they don't seem to have enough power. I have 5.5v coming out of the booster but only 0.12v get to the motors. Does anyone know where could this issue come from?

Thank you.

It seems that the voltage on your motors is too low. Maybe it's a faulty motor driver. Are you using the same components and have verified your wiring with the schematic?

Hello Max. I went through the wiring and noticed the - "minus" on the motor driver came loose. I soldered that back on the - "minus" of the battery and it all worked well. For a short while at least. The Pi turned itself off and I thought the battery might have run dry. So I charged them back up and when I turned it back on one of the motors started for a few seconds while the Pi was booting then it all went off. Now I have another burned Pi. Could some have discharged while turning on and burned the Pi? I'll order another one and hopefully I won't break this one too (second one so far).

Hey max would you mind updating the Components list with your latest recommendations for components? I ordered a PiZero but find myself wandering this long forum to order parts that may not be right to start this project. Appreciate all the work you've done.

Did not need to adjust the ports or IP address. although I did change it for the AP. I want to give it another test using the same IP... seeing as how it will be the only thing on the AP it should not conflict with anything. I did get it working though. Apparently there is one last network upgrade that was done at the very end that changed something. What was being changed... I am not sure. I set it up without the upgrade and it worked. Now I just need to add a button to your interface to enter WiFi credentials. This will allow the user to access the bot from their phone and play , then enter WiFi credentials and reboot and be on the network. :) its been a long day and my eyes hurt from reading to find my issue, but for the most part it works!

Other then adding new WiFi ssid's and clearing the wpa supplicant to be able to freely swap between WiFi and ap, the next part that I am not sure how to do is how to get the local IP assigned when adding it to a new network , w/o having access to the router.

I hope that made sense

Would you like a working sd card image to play with once i finish it?

Great work! I have not been actively working on this project for a while but I would definitely be interested in an SD image.

I took your advice and checked the port and where we are getting the IP address. The only thing I can find in the touch.html file is where its getting the stream from. If the stream was broken, the UI would still appear because its coming directly from the HTML page, right?

I am going to load another sd card and do some more testing to see if I can figure out what I did that made it stop working properly. Ill get back to you asap. I really hope to get this working, because having the ability to reassign the wifi ssid and password from your phone, or just opt to connect to the phone directly would be absolutely awesome.

I could have sworn I saw a place that explained what to do to swap the bot to ADHOC to directly connect to a phone. Am I not seeing it?

It's been a while since I tried that but this looks like a good place to start:

https://howtoraspberrypi.com/create-a-wi-fi-hotspot-in-less-than-10-minutes-with-pi-raspberry/

You might have to adjust the ip address inside the touchUI html file too.

So I had to run the update and upgrade. Once done I ran the script from https://github.com/idev1/rpihotspot . The hotspot seems to work just like its supposed to. When I type in the ip address I get the "Almost there! redirecting to port 3000" message, then when the redirect happens, I get site cannot be reached the ip address refused connection.

I also tried to access the app from the pi itself using the local host command and I get the same thing. I am thinking the update/upgrade changed something but I am not sure. I am not really sure on where to go from here to find out what is going on. If you have the time, mind helping me figure it out? Once done I would be happy to pass along a completed updated image, so others can use the hotspot function I am wanting.

I am able to connect to the pi via vnc and ssh just like before I added the hotspot ap script.

Have you tried other ports/ip addresses? The touchUI.html file might have the wrong IP for the stream. Port 9000 is usually the stream that you can try to access directly in the browser.

Become a member to follow this project and never miss any updates

M.Frouin

M.Frouin

Robbie

Robbie

Wes Freeman

Wes Freeman

Dennis Johansson

Dennis Johansson

Has anyone tried an alternative to the Raspberry Pi Zero W?

You can't getem'