Introduction

Paralyzed people lack the ability to control muscle function in one or more muscle groups. The condition can be caused by stroke, ALS, multiple sclerosis, and many other diseases. Locked-in Syndrome (LIS) is a form of paralysis where patients have lost control of nearly all voluntary muscles. These people are unable to control any part of their body, besides eye movement and blinking. Due to their condition, these people are unable to talk, text, and communicate in general. Even though people that have LIS are cognitively aware, their thoughts and ideas are locked inside of them. These people depend on eye blinks to communicate. They rely on nurses and caretakers to interpret and decode their blinking. Whenever LIS patients do not have a person to read their eye blinks available, they have no means of self expression.

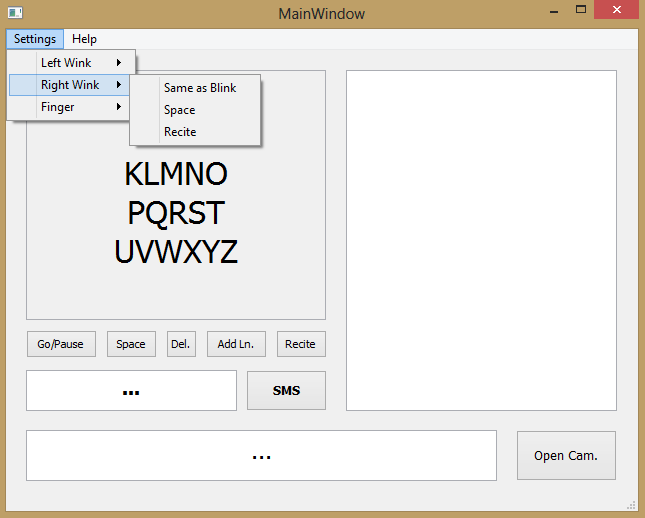

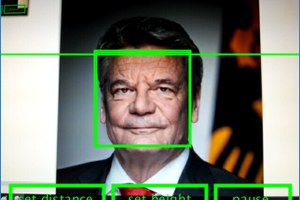

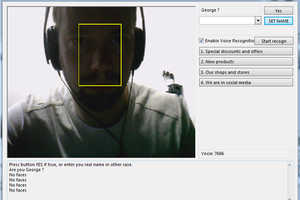

BlinkToText offers a form of independence to paralyzed people. The software platform converts eye blinks to text. Every feature of the software can be controlled by eye movement. Thus, the software can be independently operated by paralyzed people. Using the software, patients can record messages, recite those messages aloud, and send the messages to others. The software can be run on any low end computer, from a Raspberry Pi to an IBM Thinkpad. The software uses computer vision and Haar cascades to detect eye blinking and convert the motion into text. The program uses language modelling to predict the next words that the user might blink. The software can be easily customized for each patient as well. BlinkToText is free open source software. It is distributed under the MIT Permissive Free Software License.

Key Objectives

Allow paralysis victims to communicate independently.

Many paralysis victims already use eye blinks as a form of communication. It is common for nurses and caretakers to read a patient’s eye blinks and decode the pattern. The ALS association even offers a communication guide that relies on eye blinks. BlinkToText automates this task. The software reads a person’s eye blinks and converts them into text. A key feature of the software is that it can be started, paused, and operated entirely with eye blinks. This allows patients to record their thoughts with complete independence. No nurses or caretakers are required to help patients express themselves. Not only does this reduce the financial burden on paralysis patients, but this form of independence can be morally uplifting as well.

Be accessible to people with financial constraints.

Many companies are developing technologies that are controlled by eye movement. These technologies rely on expensive hardware to track a user`s eyes. While these devices can absolutely help LIS victims, they are only available to people that can afford the technology. BlinkToText focuses on a different demographic that are often ignored. Twenty-eight percent of U.S. households with a person who is paralyzed make less than $15,000 per year[1]. BlinkToText is free and open source. The software runs on wide variety of low end computers. The only required peripheral is a basic webcam. Not only is this software accessible to paralyzed people, but paralyzed people of almost all financial classes as well.

Open Source and Licensing

Blink to Text is free open source software. It is distributed under the MIT Permissive Free Software License. All of the code is available on Github. A majority of the software is written in Python. Specifically, the image processing is done with Python’s OpenCV library and the GUI was made with PyQT. Python is an open source programming language. This means that all .exe and other distributables of the software can be decompiled. All of the Haar Cascades were obtained from OpenCV’s repository of free and open source Haar Cascades.

https://github.com/mso797/BlinkToText

Platform Features

BlinkToText offers a range a tools to help paralyzed people communicate. Below is an explanation of the...

Read more » Swaleh Owais

Swaleh Owais

Max.K

Max.K

Cassio Batista

Cassio Batista

Anhong Guo

Anhong Guo

BlinkToText is free software that allows you to type in any text, with your eyes only. It doesn't require any special eyeglasses or hardware. It works on any computer, laptop, tablet, or smartphone that can run the Google Chrome browser. It is necessary to seek helpful resources like this to guide and assist in learning skills employers look for in college graduates. Such tools can truly help us in achieving our goals.