Here's a video of the hexapod with its newly working eyes.

I used pre-existing "objects" (Propeller version of libraries) for the servo pulse generation (produced by one cog). I also used the super awesome "F32" floating point object. This object runs in its own cog (one of the eight processors on the Propeller) and acts as a floating point coprocessor. I ended up using two instances of the F32 code (each in its own cog) in order to keep the IK calculations running fast enough to generate the 18 servo angles each 20ms control cycle.I have a smaller hexapod which I had previously writing code for. I used this same code on the larger hexapod but found the large servos don't move the same as the small servos. Apparently the different servos have a different angular range giving identical pulse signals. Obviously the code needs some tuning.

Using the IK formulas posted by a member of Let's Make Robots, I wrote my own inverse kinematics algorithms. It turned out generating the IK angles for the various servos was relatively straight forward, what gave me trouble was calculating the location each foot should occupy based on the input from the wireless Wii Nunchuck. This is why I needed two instances of the floating point coprocessor. One would calculate the angles of the servos while the other would calculate the desired location of each foot.

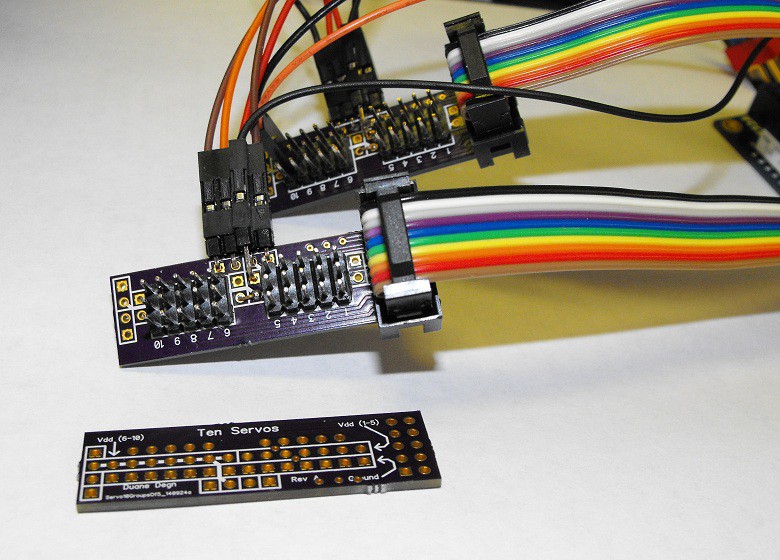

The only processor used on this robot is the Parallax Propeller. The extra PCBs used with the servos are passive and are used as a way to conveniently add power to the servos. The Propeller microcontroller is directly controlling all 22 (18 + 4) servos.

The present code uses seven of the Propeller's eight cogs (processors). All but 588 bytes of the 32K RAM have been used. There are several ways I could reclaim some of this memory so I believe I could still add some obstacle avoiding algorithms to the program.

I think this is a great project to show what the Propeller can do but I think I'm getting close to maxing out the microcontroller's resources.

I personally really enjoy this sort of programming (inverse kinematics) but I will confess to feeling like my brain was about to explode on more than one occasion.

This has been in immensely fun project so far. I have had other commitments which have lately prevented my completing the programming this robot deserves. I hope to make time to complete the programming soon.

Update of sorts (30 January 2015): This project has been screaming for attention since I've uploaded it to hackaday.io. I thought I'd add a check list to this section of the project.

1. Animate eyes (a bunch of tasks involving the eyes).

1a. Code to allow arrays to display the eye images and animations. Done 15.01.29 (year.month.day)

1b. Code to drive eye servos aligned with walk direction. Done 15.01.31

1c. Code to add random behavior to eye movement (servos).

1d. Code to add random behavior to eye animations. Done 15.01.31

1e. Code to control eye expressions from Nunchuck. Progress 15.01.31

2. Loctite screws.

2a. The screws holding the servo horns to the servos keep coming loose. The need Loctite.

3. Find place for wireless Wii Nunchuck receiver.

3a. The Nunchuck receiver needs a home. The receiver needs to be accessible since the I2C signals can interfere with loading code to the Propeller's EEPROM (which shares the I2C bus).

4. Install switch securely.

4a. The power switch presently dangles from the back of the hexapod. I'd like to find a good place to mount the switch. The switch should be easy to access so the hexapod can be powered off quickly.

5. Improve general behavior.

5a. Tame the bucking bronco gait. (Some progress has been made on this as of 15.01.29.)

5b. Improve the way the robot waits for commands. Presently the robot robot will sit with legs raised in the air until a command to continue to move is given.

5c. Code transitions to and from an "at ease" state.

5d. Code transition to and from a low power state. Have the robot pull its legs in and rest the majority of the robot's weight on the ground when no command has been received (and when not in autonomous mode). Make the movements between this rest state and a walking gait smooth.

6. Code tilt movements.

6a. Presently the inverse kinematics algorithm assumes the robot is on level ground and the robot remains level through its gaits. Adding pitch and roll to the control algorithm will improve the robot's maneuverability.

7. Add accelerometer sensor.

7a. An accelerometer should allow the robot to detect when a foot contacts the ground prematurely. The early contact will cause the robot to start to tilt. The code should be able to sense this tilt and compensate for the obstacle.

7b. It would be nice if the robot would detect when it is being lifted and gently move its legs to an easy to handle position.

8. Add other sensors.

8a. It would be nice if the robot's "eyes" could actually see. I'd like the robot to be able to detect the location of humans in a robot and respond appropriately to the humans.

8b. Basic obstacle detection would also be nice.

Duane Degn

Duane Degn