Voice controlled personal assistants have made their way into our daily lives through software embedded in today's smartphones. Siri, Google Voice, Cortana, Alexa are few recognizable names.

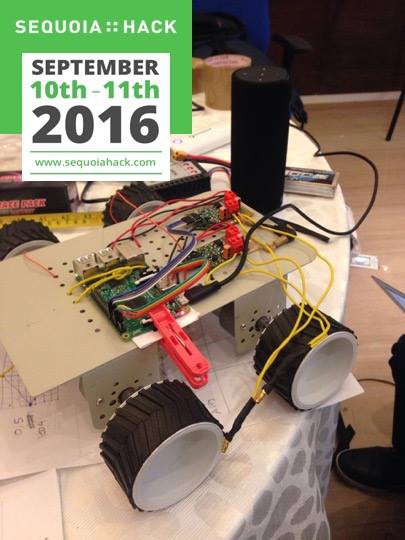

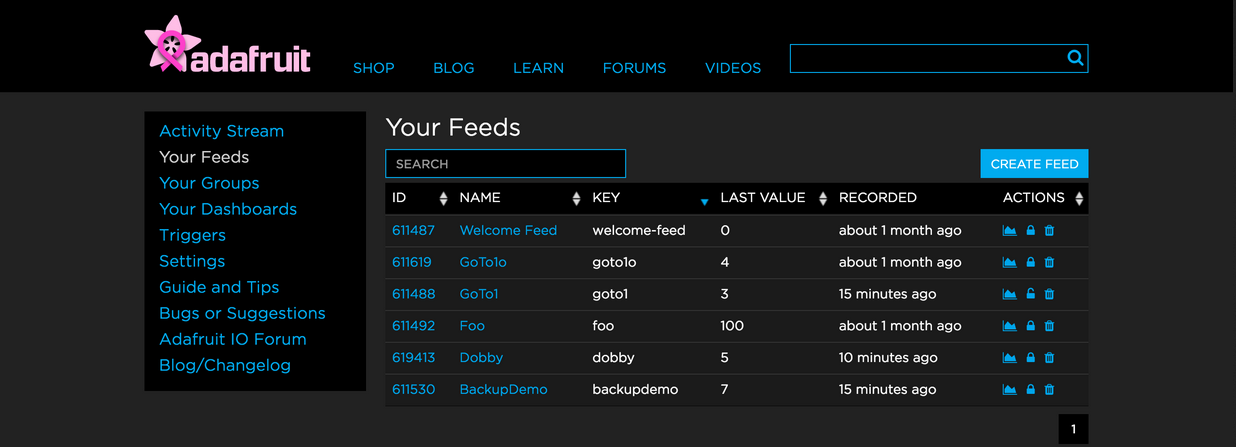

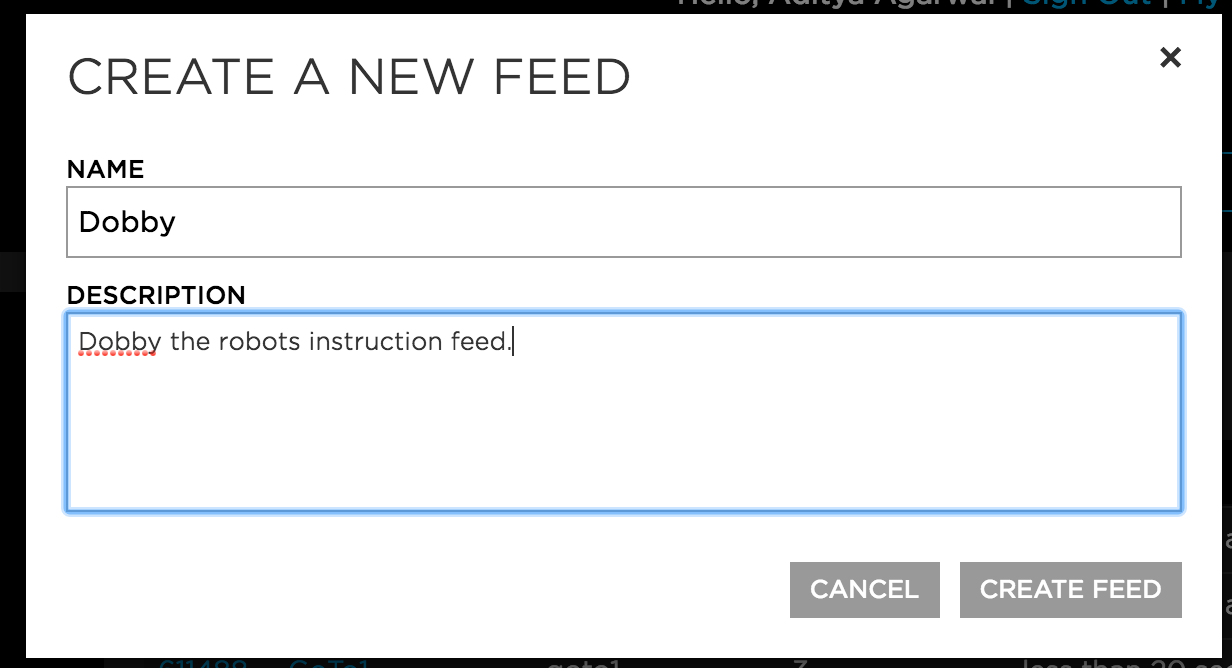

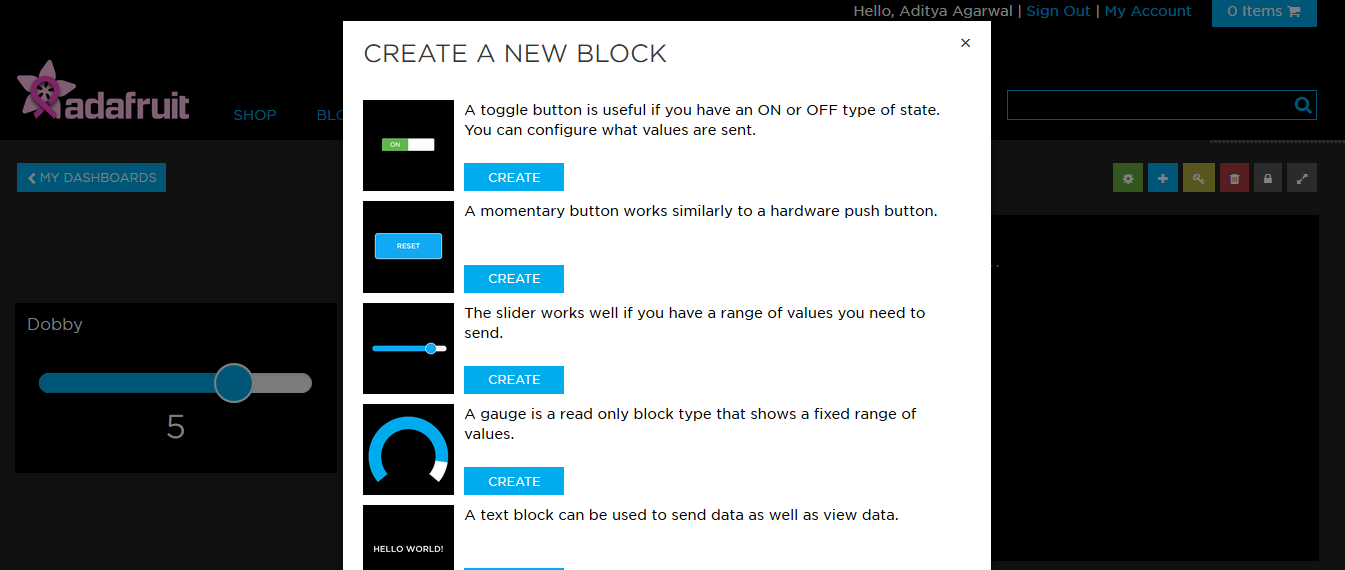

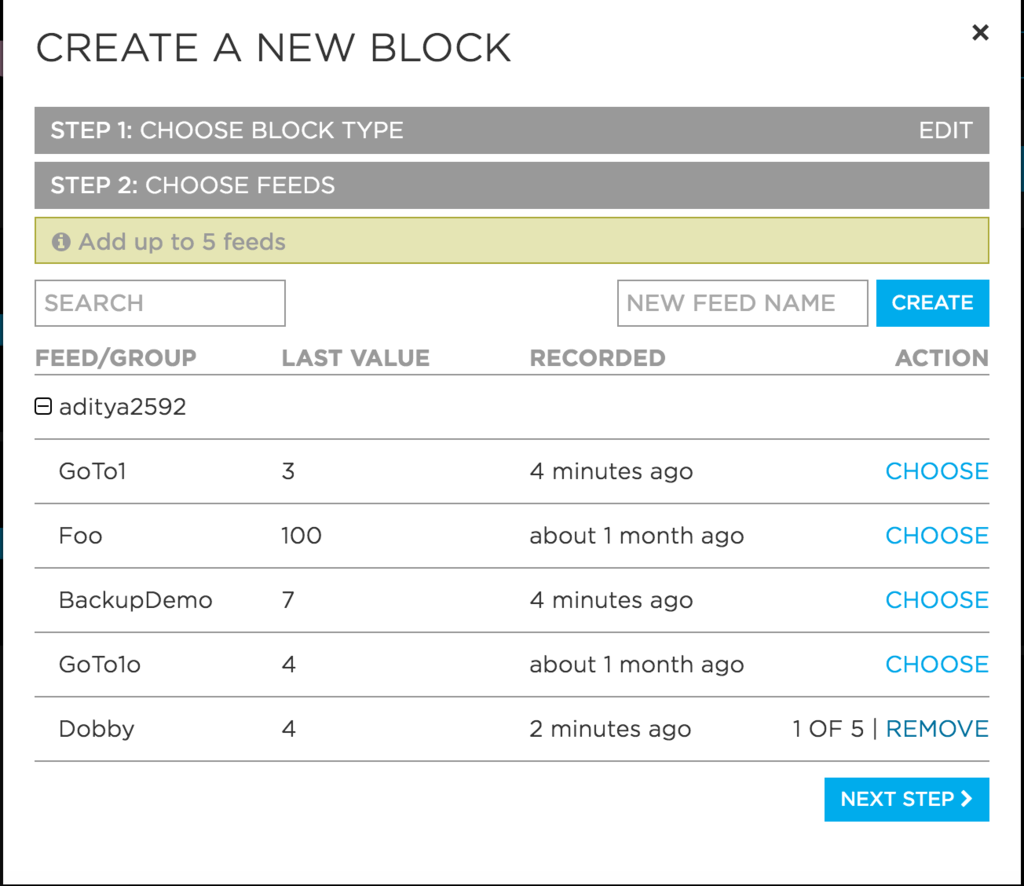

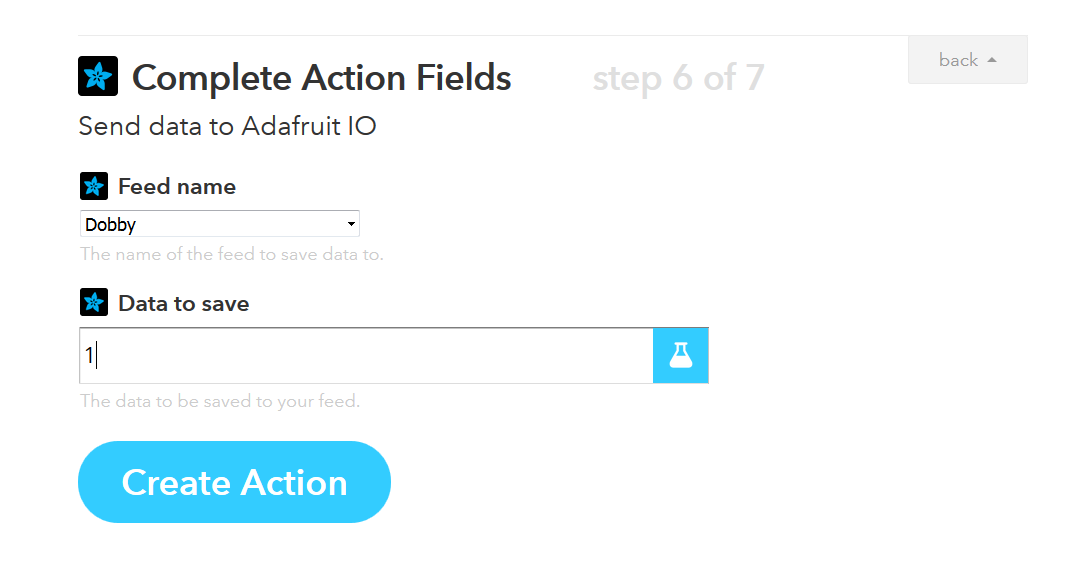

Project Dobby extends the benefits of voice recognition and speech processing to robotics. Dobby follows a user's voice commands and can carry out per-programmed physical actions. It can be a waiter, a warehouse assistant or a nurse. At home, it can get you coffee from the kitchen, get your morning newspaper from the front door or take a selfie when you are partying with friends. The hardware and software can easily be adapted to any new environment.

In the future we plan to extent human following capabilities in Dobby so that it can serve as an assistant in an unknown environment. It can carry luggage on airports and supermarkets, ferry people in wheelchairs and kids in prams, all without human-effort and while avoiding obstacles.

Dementor

Dementor

John Marvie Biglang-awa

John Marvie Biglang-awa

eelco.rouw

eelco.rouw