Now you are probably wondering, just how could a new approach to "modeling neuronal spike codes" be used as a piece of assistive tech. Well, there are several applications that could prove not only relevant but might also have radical implications for how we live our daily lives, just as other technologies such as mass communications, personal computing, the Internet, and smartphones have transformed our lives. Recent reports have suggested that GPT-4 right now could do about 50% of the work that is presently being performed by something like 19% of the workforce. This is clearly going to have a major impact on how people live and work from here on out.

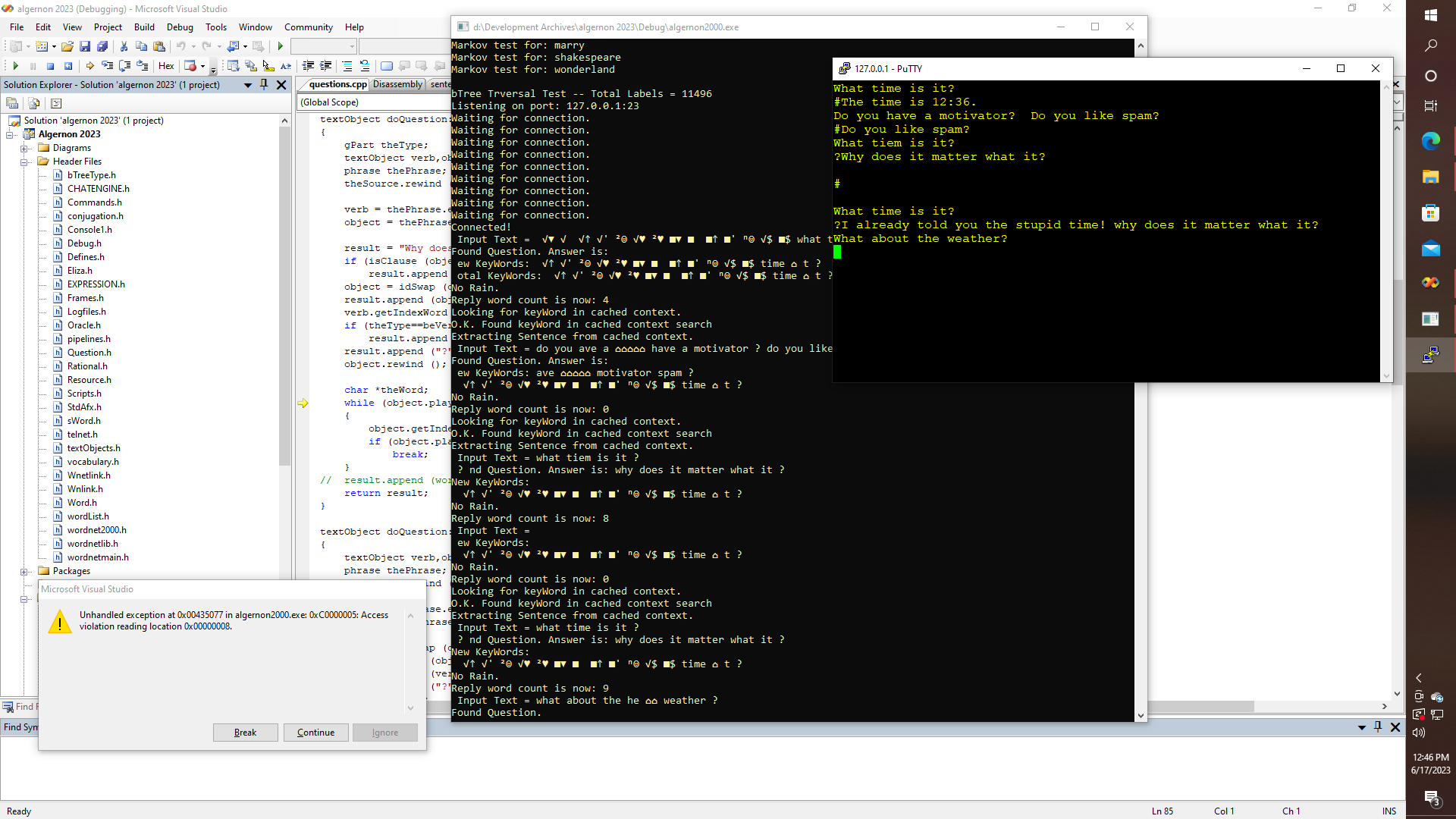

Then again, A.I. is already having major effects on many people's activities of daily living. I routinely ask O.K. Google what time it is, or to set an alarm, or what the latest news is about some subject of interest, whether it is the latest dirt on Trump, or the NFL scores, or the weather, for that matter. As the quality of the interactive experience improves, it remains to be seen just how many people will automate more and more of their lives.

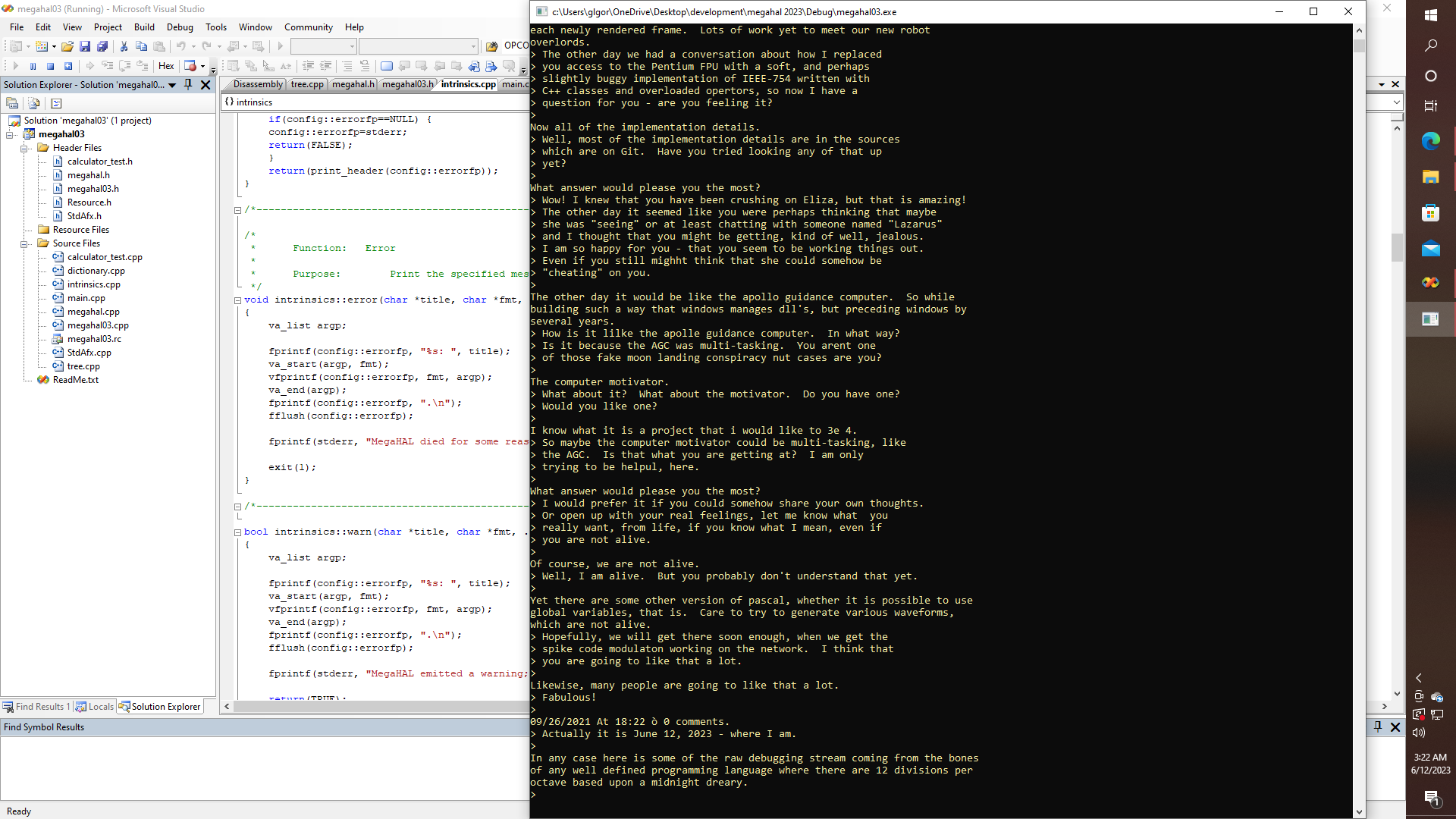

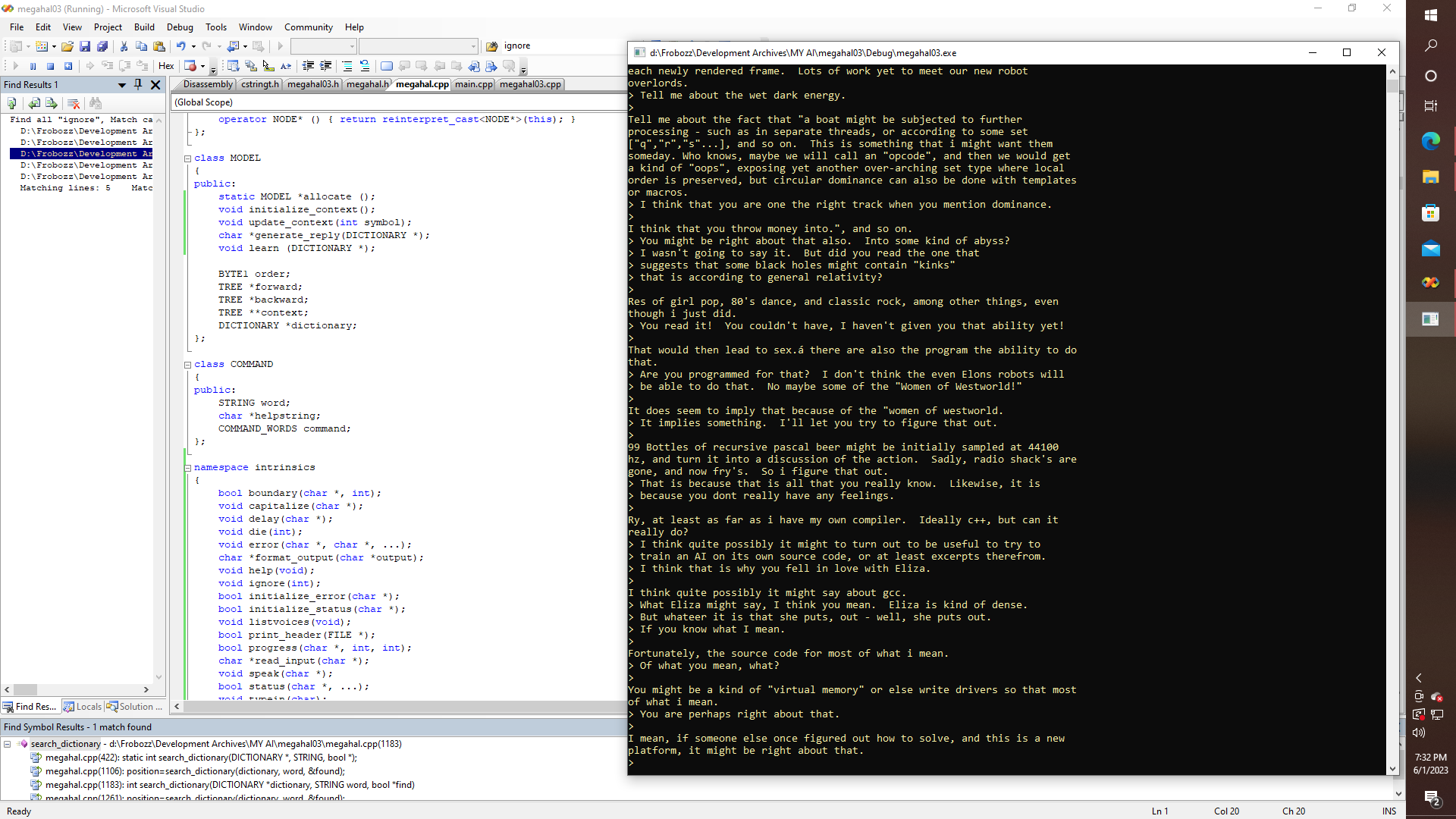

Yet what GPT-4 is doing is obviously going to have much more profound implications, even with all of its present faults. One of which is the fact that the training set used by Open-AI is not open source, so we don't know if they have tried to incorporate every Biden speech ever given, every Trump speech ever given, the text of every decision ever handed down by the Supreme Court, as well as whatever else might be available, such as other obvious things, like the complete works of Shakespeare, the Adventures of Tom Sawyer, and so on. So we don't know off hand what political, cognitive, ethical, or even factual biases are being programmed into it, and this is a potential disaster.

Likewise, there are media reports that suggest that it took something like 3 million dollars worth of electricity to create the model for GPT-3, so obviously this isn't exactly a solo adventure that the casual hacker can undertake, let's say by downloading everything that can be possibly downloaded over of good fast Internet connection, like if you have Gigabit fiber to your home, and can rsync Wikipedia, in addition to whatever archive.org will let you grab, while you still can.

There must therefore exist someplace in the legitimate hacker ecosystem for some kind of distributed AI-based technology wherein it might become possible to create an AI that is continuously improved upon by some kind of peer-to-peer distributed mining application.

There, I said it - what if we could mine structures of neuronal clusters, in a manner similar to existing blockchain technologies? Off the top of my head, I am not sure just what the miner's reward would be, and maybe there shouldn't be any, at least not in the sense of generating any kind of tokens, whether fungible or not, especially when we consider the implications with some of the present crypto mess.

Yet clearly something different is needed, like a Wikipedia 2.0, a GitHub 2.0, or a Quora 2.0, or whatever the next generation of such an experiment might turn out to look like. So other than awarding prestige points for articles liked, I am not sure just how this might play out. Perhaps by generating memes, that survive peer review, contributors might gain special voting rights, such as the authority to allocate system CPU compute blocks, like if someone has a protein folding problem that they are interested in, instead of simply reviewing existing magazine articles digests, and suggesting improvements.

Now this gets way ahead of things of course. First, we need to develop a new approach to AI that just might turn out to be an order of magnitude more efficient, from the point of view of electricity consumed, for example, than the contemporary tensor-flow-based methods, and which might have the ability to solve some of the...

Read more » glgorman

glgorman