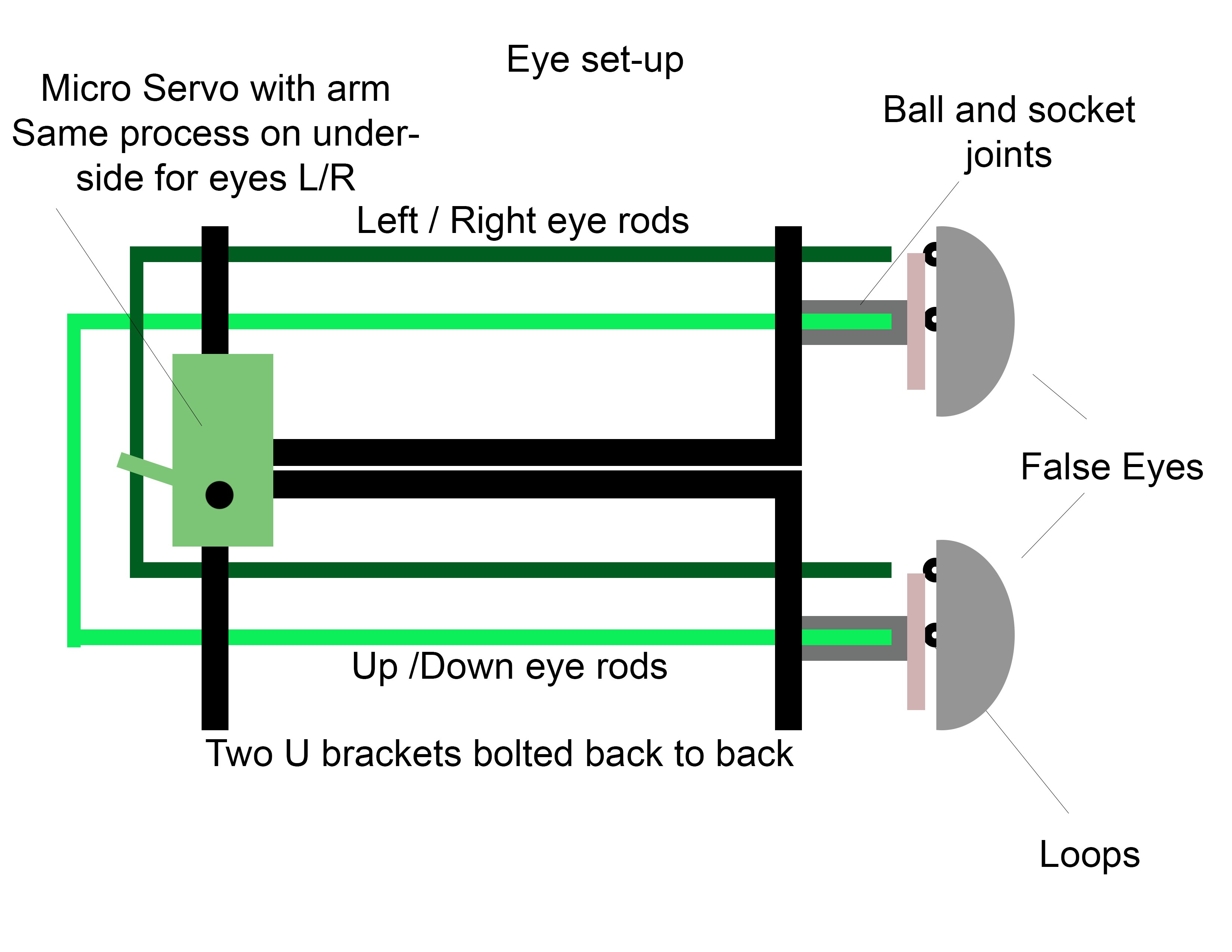

The title of my final year Multimedia Design project (Actuality and Artificiality) is an adaptation of the post-modernist philosopher Jean Baudrillard’s ideology, Simulacra and Simulation: Simulacra as object and Simulation as process. The robot can track movement and respond to questions via a wireless keyboard, however, voice recognition can be implemented using macs dictation and speech function in system preferences in-putted via the xbox kinect's internal quad mic set up. The robot uses an adapted version of the Eliza algorithmic framework (siri derivative) to respond to participant questions, The 'script' is then out-putted to apple scripts voice modulator so it can be heard through the robots internal speaker system. The application of the project is as an interactive exhibit, but this project is highly adaptable and would be suited for museum displays, help desks or interactive theatre. The Eliza framework can be changed to mimic any individual or language (Currently based on Marvin from Hitchhikers Guide to the Galaxy) and also answer complex questions, making this a highly interactive and knowledgeable system. The voice can also be outputted and modelled on specific individuals and the mouth and lips react to the sound coming into the computer's audio output so it is always more or less in time. The system tracks peoples movement via a Kinect module, the current system uses an open software library that tracks the nearest pixel to the sensor, however, the script also includes skeleton tracking output which can be activated via un-commenting the skeleton tracking code and commenting the point tracking library. This means that multiple individuals can be tracked and interacted with at once, allowing for larger audiences.

Abstract: Theory

Beginning with Tom Gunning’s theoretical model of cinema as a machine for creating optical visceral experiences, the core proposition of this study stipulates that pure CGI characters no longer have the ability to accurately simulate consciousness and materiality, or to meet the expectancies of the modern day cinematic audience. It has been claimed that the movement towards Hybrid systems (Motion Capture / Live action integration) provides a form of mediation between actuality and virtuality, adding depth 'soul / consciousness' and a kinaesthetic grounding of external operations in an attempt to solidify and reify the virtual image into something organic. However, it is suggested here that hybrid systems have problematic issues concerning inaccurate approximation of: surficial reflection, portrayal of additional appendages, incomplete character formation (interaction / performance) and encapsulating / staying true to an actor’s performance during editing. The imprecision of these elements become increasingly apparent over time - especially at close proximity, where it becomes discernible via the evolving critical eye of the average modern day cinematic observer.

This projection positions Hybrid systems not merely as mediation between physical reality and holographical dimensions but as a means of returning to the greater substantial and grounded animatronic character systems. Modern animatronic characters / puppets exhibit greater detailed aesthetic verisimilitude and organic simulative, external and internal operations at close proximity in comparison to the most advanced CGI and Hybrid systems as they are augmented via the parameters of the physical world. This research explores a possible return to animatronic special effects in the future of film as the primary medium for character creation, overtaking CGI and other virtual hybrid systems which lack the ability to propagate visceral optical experiences, fine detail / nuance and genuine/responsive characters to meet the evolving critical expectations of the cinematic observer. Technological advancements in animatronic interactive control systems allow accurate tracking of movement, autonomous extemporaneous expressions...

Read more » Carl Strathearn

Carl Strathearn

TTN

TTN

Ben Nortier

Ben Nortier

agp.cooper

agp.cooper