Dandelion don't tell no lies

Dandelion will make you wise

Tell me if she laughs or cries

Blow away dandelion

( Rolling Stones, 1967 )

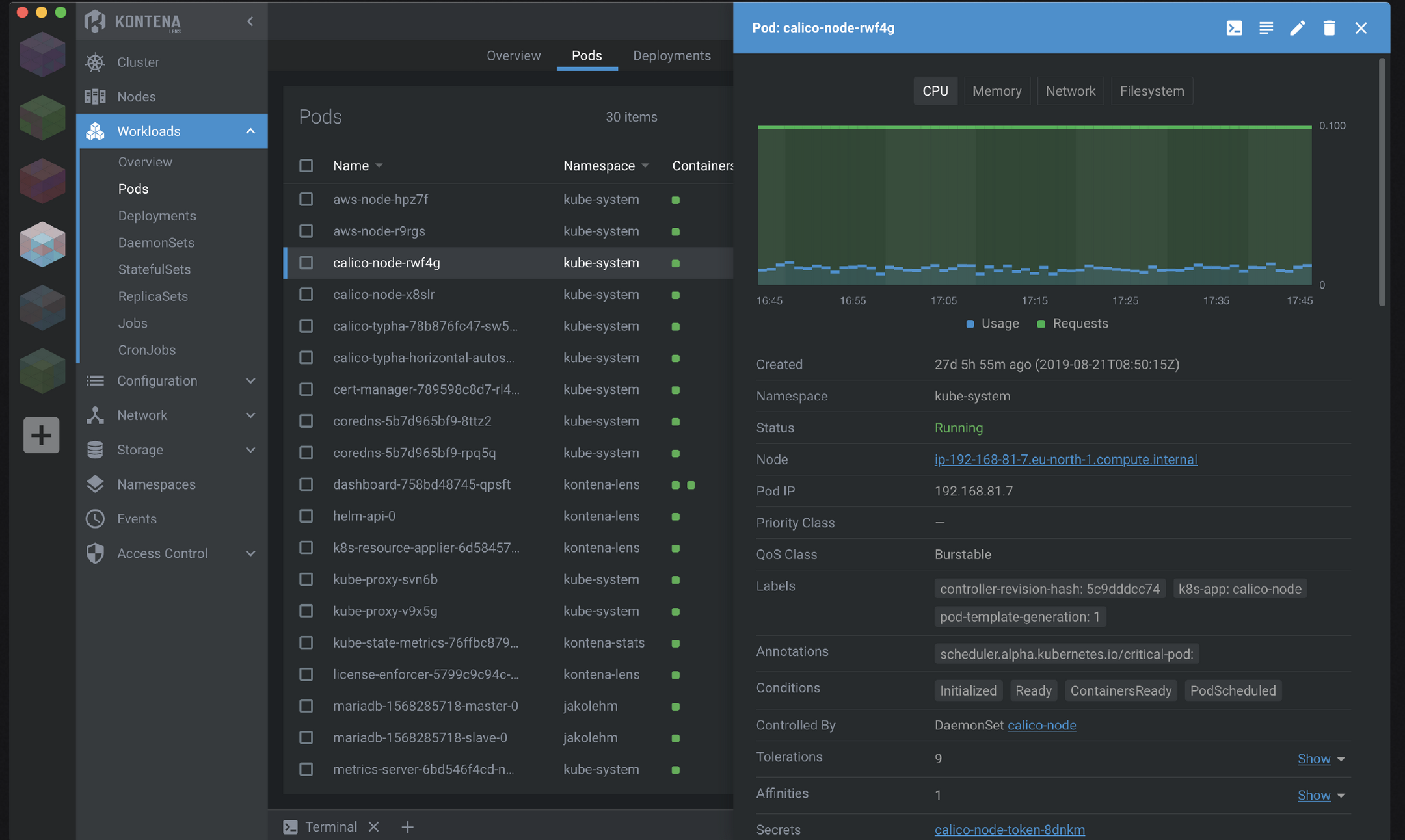

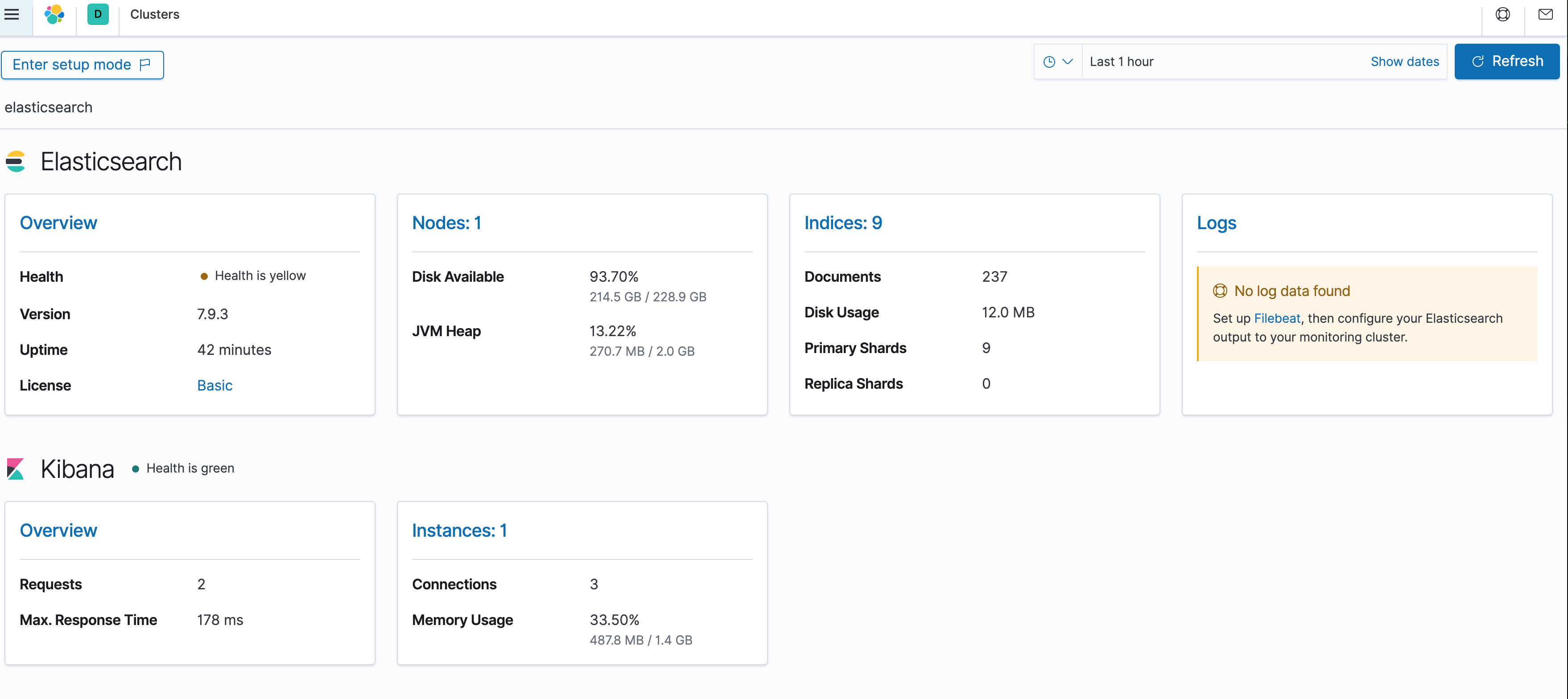

Ever since I first learned Kubernetes, I wanted to have my own cluster, that didn't bleed my hard earned income into the cloud. My butt puckers a little every time I deploy in the cloud - wondering what that's gonna cost? The important things to explore and manage are costly there - logs and observability. So runtime is the valuable quality to the Kubernetes student - containers are ephemeral but your cluster shouldn't be. Churning a cluster configuration ( tear-down and rebuild ) is only good training for startup debugging. It doesn't teach you the lifecycle or the value of knowing how to find where the bodies are buried (logs and metrics). It is not cheap to leave a multi-node cluster running for long periods at cloud vendors.

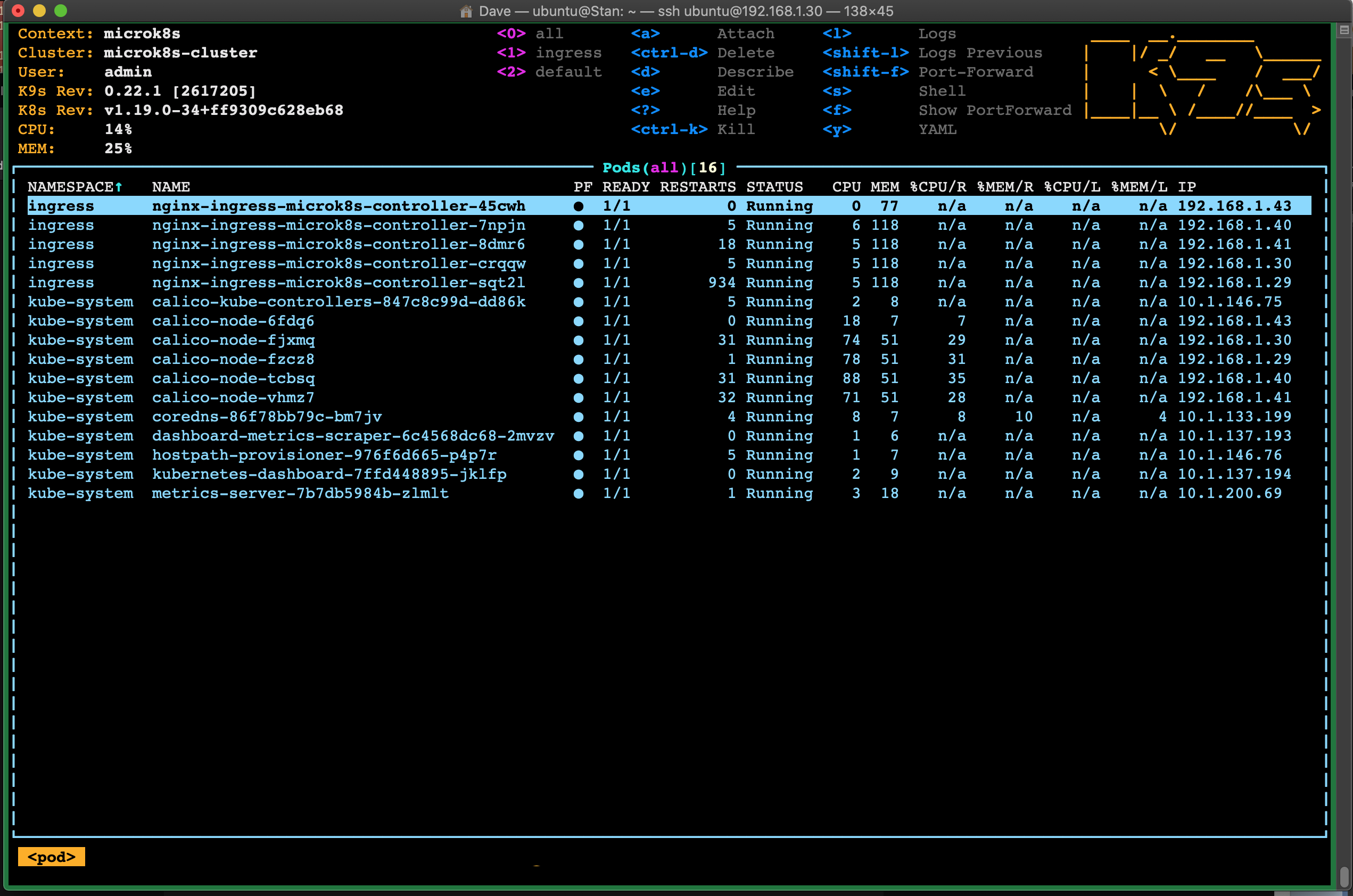

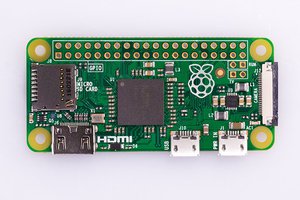

Kubernetes is a rather expansive thing - it becomes more of a practice than a unit of knowledge due to the velocity of development. Project Dandelion is a way to explore Kubernetes wisdom in a contained environment. The actual point is a software project - that needs a hardware bootstrap to run. This project will cover my approach to creating a core private cloud appliance with Microk8s HA on ARM with a x86 node or two. I use Pi 4/8G nodes and Flash SATA storage over UASP compatible USB adapters.

One thing they don't go deep on is the ARM environment. And the Pi is a toy platform, they say. So was the 8086, at one time. Microk8s is intended to be used as a way to build edge server systems that integrate more naturally with the upstream services of the cloud. This is a project for folks that bore easily with IFTTT or who desire to attempt more complex integration using container applications.

I've done some of the steps differently. This project is to document those differences and inspire others to explore Kubernetes in a bare-metal environment. As a technology Kubernetes is quite abstract and is usually deployed in a popular abstraction for hardware ( the cloud ). This project allows a certain satisfying physical manifestation and a safe place to play, inside your firewall and outside your wallet. I'll put in logs for interesting things that happen and details for the successful experiments.

Prince or pauper, beggar man or thing

Play the game with every flower you bring

CarbonCycle

CarbonCycle

helge

helge

Colin O'Flynn

Colin O'Flynn

Ken Yap

Ken Yap

wismna

wismna