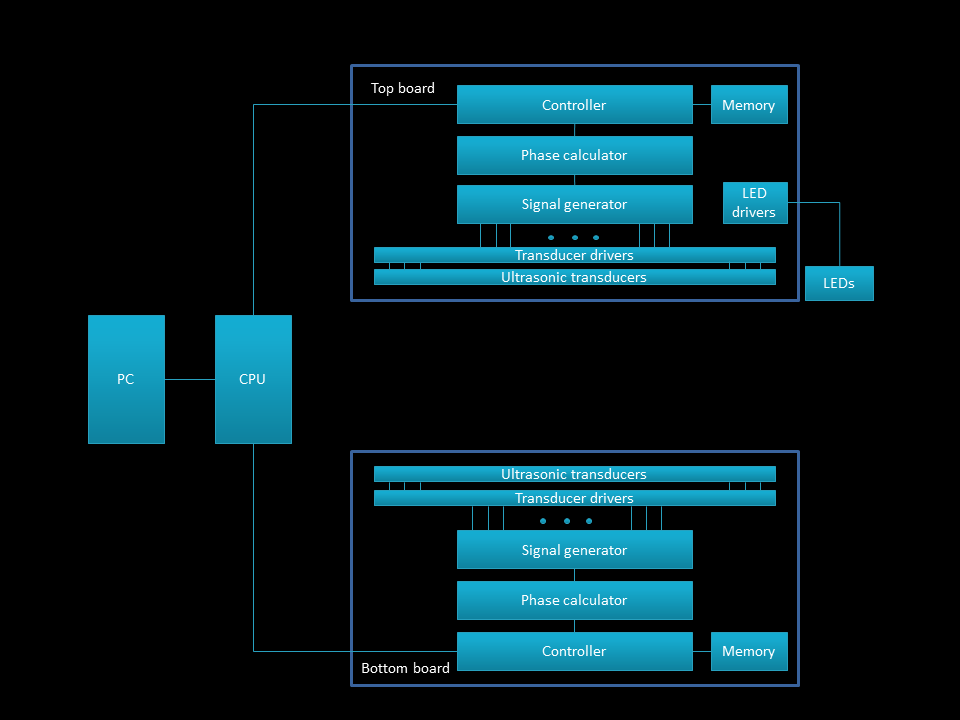

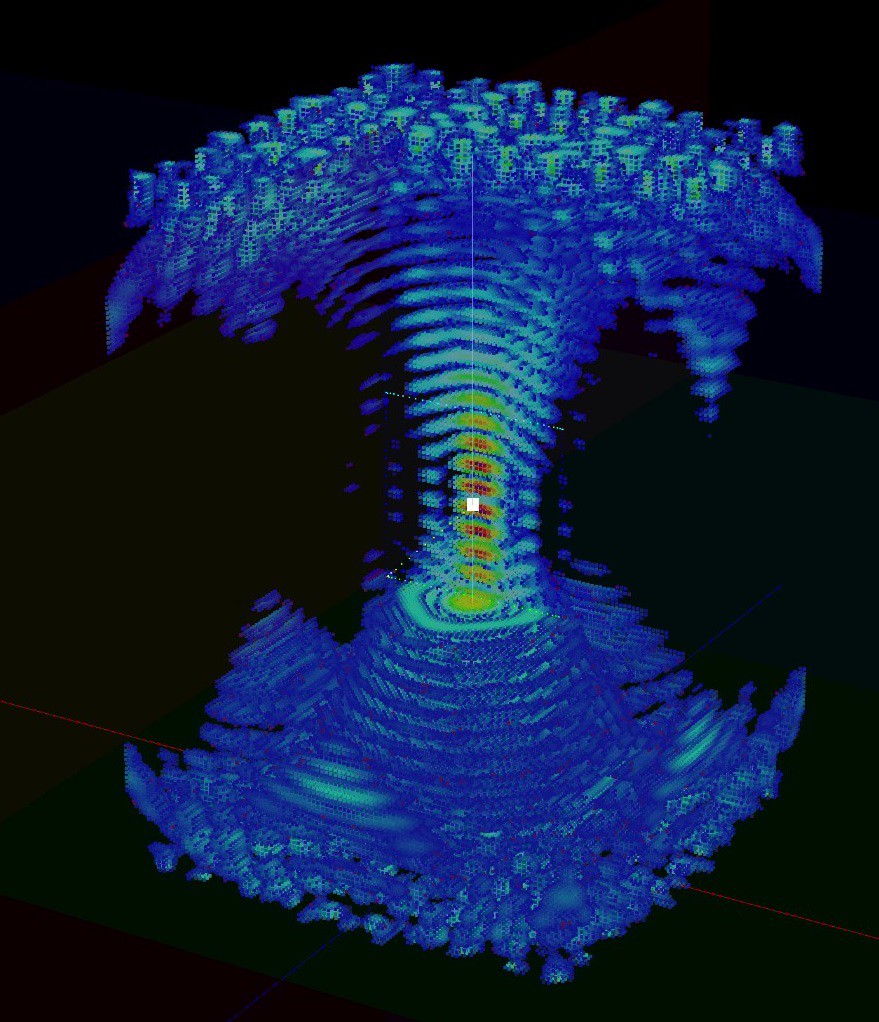

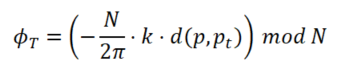

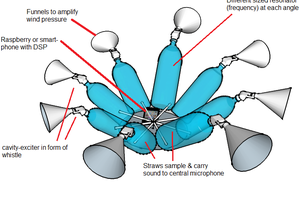

This project shows that it is possible to create floating 3D images. Imagine being able to see life-sized (albeit small) models of the item you were buying or a CAD model you were building, or even possibly the face of a loved one in full color 3D, without the need for cumbersome glasses creating simulated 3D glasses. This technology also allows a user to feel objects with their fingers and to hear sound localized to parts of the image - so not only can you see the face of a loved one, you could also touch and hear them.

All the build files, including source code, schematic and layout files and FPGA code can be found here: https://github.com/danfoisy/vdatp

This project is based on a Nature Journal paper: http://sro.sussex.ac.uk/id/eprint/86930/

Licences:

Qt: LGPLv3

KiCad: GNU General Public License version 3

KiCad Libraries: CC-BY-SA 4.0

Quartus 18.1 Lite: proprietary Intel license

Dan Foisy

Dan Foisy

Dave Merrett

Dave Merrett

Fabien-Chouteau

Fabien-Chouteau

danielmcgraw

danielmcgraw

Dillon Nichols

Dillon Nichols

Wow what a gem of a project, really amazing work!