Automatic Speech Recognition AI Assistant

Turning a Raspberry Pi 4B into a satellite for self-hosted language model, all with a sprinkle of ASR and NordVPN Meshnet

Turning a Raspberry Pi 4B into a satellite for self-hosted language model, all with a sprinkle of ASR and NordVPN Meshnet

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

|

x-python-script - 3.45 kB - 12/21/2023 at 14:50 |

|

|

|

Portable Network Graphics (PNG) - 15.26 kB - 12/21/2023 at 14:50 |

|

|

|

x-python-script - 2.70 kB - 12/21/2023 at 14:50 |

|

|

In my first project log, I mentioned that the examples provided with the Vosk library were very easy to work with and worked right from the get-go. This was a no-brainer to use it for this project. After all, if it's not broken, don't fix it.

To the code provided by the Vosk devs, I've added a simple POST request handling along with PyGame based display. The "frontend" shows the assistant "thinking", and once the POST request has been responded to by the language model, it displays the response.

For the time being and as a proof-of-concept, the assistant has only two states: "thinkingFace" and "responseFace". The “thinkingFace” involves eyes moving side to side, which, at least in my mind, mimics someone trying to figure things out, and the “responseFace” displays the text the language model responded with.

One more kink that needs to be ironed out is flipping the display orientation 180 degrees. However, as it turns out, it is not so simple, and all the guides I found on the internet for doing so didn't work with my display.

Project files are available in their respective section if you want to give it a try.

Now that things were shaping up, I needed a way to access the language model from the outside of my local network. The idea is that you can just grab the box and take it wherever, or give it to whoever. However, I really don't want to just expose the model to the internet and cross my fingers, hoping that no one stumbles upon it by accident. While security by obscurity might work, it certainly is not a long-term solution.

While I have a certain understanding of how NGINX, dynamic DNSes, and port forwarding work together, setting it up this way still means that it's exposed to the open internet.

But! There is another way to address this, and it's quite convenient. It's free, easy to set up, it's available on almost any platform (Windows, MacOS, iOS, Android, Linux), and did I mention that it's free? What I'm talking about here is NordVPN Meshnet.

It allows you to create a mesh network of devices, effectively establishing direct connections between them. This enables a couple of things, but most importantly it grants access to the language model from anywhere in the world. The two additional benefits are that we can SSH into the Satellite at any given point, and all the connections through Meshnet are encrypted.

It comes with a convenient open-source Linux app, and an install script.

You can install it with the following command:

sh <(curl -sSf https://downloads.nordcdn.com/apps/linux/install.sh)

There are two options to log in: either through a web browser or in the typical Linux user fashion, using a token. Keep in mind that either way, you will need to open a browser at some point - to either click through the log in steps or generate a login token.

Web browser:

nordvpn login

Token:

To generate a token, log into your Nord account, head over to the Meshnet tab, set up NordVPN manually, and last but not least, click on "Generate new token".

Then you can choose to create a permanent or temporary (30-day) token.

nordvpn login --token <insert_your_token_here>

After you log in, make sure Meshnet is on with:

nordvpn set meshnet on

With Meshnet running, you can check your Satelite's IP/Nord name using:

nordvpn meshnet peer list

The same applies to the server hosting the LocalAI model. Once both devices have Meshnet running, everything is ready to go.

And that's literally it for the networking setup. As long as the satellite is connected to a WiFi network, it can access the language model through a POST. There are no hidden fees, no small print. It just works.

Oh and by the way, if you want to run all of this from an actual virtual machine, that works too! Just make sure the guest has internet access.

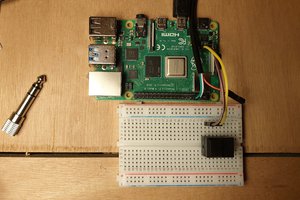

With the proof of concept ready, I could finally think about the hardware. The shopping list is as follows:

While the first point is a no-brainer, with Raspberry Pi stock no longer being a problem, you can easily get your hands on a reasonably priced RPI 4B. I won't need that much RAM, so I opted for the 4GB model.

The tricky part is the microphone, and as it later turned out, the display. There is plenty of offerings on the market when it comes to microphone arrays. However, they're pretty pricey, and I'd rather stay on the budget-friendly side of things for hobby projects. Shields are absolutely out of the question, as I can't pack them reasonably well with a GPIO display.

Looking around the web, I came across Playstation Eye, which has a 4x microphone array built-in. It also has a USB connector, works on Raspbian pretty much out-of-the-box, and comes with the bonus of a low-resolution optical sensor. All for around 4 dollars (shipping not included!). Sold!

With the help of the internet, I found some pictures of the PCB inside, laid out on a size reference mat. Perfect!

With a certain degree of confidence, I could start blocking out the case for my device.

I ordered a run-of-the-mill 3.5" 320x480 TFT GPIO Display shield, and while it does the job, I really think I should have opted for something else. The refresh rate is quite low due to the SPI interface being the limiting factor.

The first version of the case was well... Let's say it was the reason I made another version.

Here is a couple of pictures.

But this idea turned out way too bulky, so I gave it another shoot. This time, I packed everything tightly, reducing the footprint considerably. By that time, all of the other parts had been delivered, allowing me to supplement the pictures I found on the internet with some good'ol caliper measurements.

Once printed, it required some heated inserts, as I had a lot of DIN912 bolts lying around.

I even went as far as printing two batches with differently colored filament to add some flair and ultimately settling down on a red front with a black base.

I set out to try and find open-source projects that already achieved the same thing or something similar.

The first thing that I found was Rhasspy, which is "an open source, fully offline set of voice assistant services for many human languages... ". While it's nearly everything that I need for my project, it's also a little bit too much, as many of the available features were of no use to me.

What's really great is that you can explore all the libraries that Rhasspy, or (Rhasspy v3 more likely) takes advantage of. There, I discovered Vosk, a voice recognition toolkit that can run on Raspberry Pi 4B.

Before ordering a Raspberry Pi for the project, I decided to test the examples provided in Vosk's GitHub repository. I chose to go with the Python API, even though I’m more comfortable with JavaScript. Setting up the Python project was just much easier overall.

To absolutely nobody's surprise, the example worked beautifully. It started right up and inferred text from speech with quite high accuracy, even with the smaller model.

Now that I had automatic speech recognition set up, I wanted someone or something to talk to. Hence, I started looking for language models I could interface with. Long story short, I found LocalAI. According to the linked website "LocalAI act as a drop-in replacement REST API that’s compatible with OpenAI API specifications for local inferencing. It allows you to run LLMs, generate images, audio (and not only) locally or on-prem with consumer grade hardware, supporting multiple model families that are compatible with the ggml format. Does not require GPU.".

There are two really important points in this description:

And throw it on my homelab server I did! The very helpful thing is that LocalAI provides a great step-by-step guide on how to set up the model with a Docker container. I'm not sure if there is an easier way to do that currently (great job!).

Now that I had a language model up and running, I needed to send the inferred text to the LocalAI API as a request. Once the model replies, I can display the response.

With a little bit of fiddling with Postman, handling POST requests, and a lot of ChatGPT assistance, due to my less-than-adequate programming skills, I managed to make it work. So far everything is being run on my homelab server - hence the long cable running from the cupboard to the microphone.

Create an account to leave a comment. Already have an account? Log In.

O ten tramwaj co nie biega. ;)

On a serious note, it's a little bit different. If you're looking for a self-hosted alternative to the big Voice Assistant providers like Amazon Alexa or Google Assistant, you want to look at Rhasspy V3 (the V3 part is important). It has been integrated into Home Assistant too, which is a big plus.

This project focuses more on being able to interface with an AI model through a portable device.

Become a member to follow this project and never miss any updates

Leonard

Leonard

Alexander Hagerman

Alexander Hagerman

Danny

Danny

it work with https://mycroft.ai/ ?