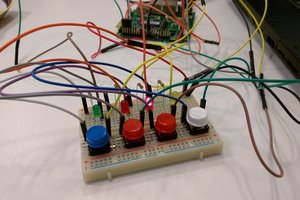

The sensitivity of the gesture to be detected can be tuned to the motion that each individual can comfortably make. Development is taking place between InfoLab21, Lancaster University and Beaumont College, Lancaster, UK. The students at Beaumont have a variety of physical mobility issues, mostly as a result of cerebral palsy. This means that I can't ask them to replicate a predefined gesture. I need to be able to recognise whatever gesture each of the students can make. The aim is to use HandShake as a virtual switch to enable the student to interact with their communication devices. The college uses Sensory Software's Grid 2 communication software. This type of software can be operated with a single switch to create speech or operate environmental controls. So giving somebody a single switch can make a difference to their ability to communicate.

Preliminary testing can be seen here:

The need for this project was identified by the Technologists and Occupational Therapists at Beaumont College.

The latest manual with build details and code can be found on my github at:

https://github.com/hardwaremonkey/microbit

Some of my other projects and earlier work on this project can be found at https://sites.google.com/site/hardwaremonkey/

With thanks to InfoLab21, Lancaster University and Beaumont College, Lancaster for all of the help with this and my other projects.

matt oppenheim

matt oppenheim

Daan Pape

Daan Pape

Ian Shannon Weber

Ian Shannon Weber

Lutetium

Lutetium