1.Introduction

As part of our student project called Speak4Me we want to help people who are unable to communicate verbally and suffer from severe physical disabilities by providing a wearable “eye-to-speech” solution that allows basic communication. By using our device, users can express themselves with customizable phrases using situational and self-defined profiles that allow them to communicate with their environment with 128 phrases.

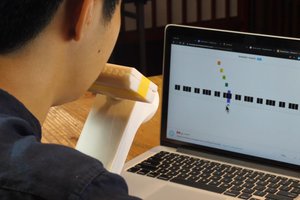

Through simple eye movements within certain timeframes and directions, Speak4Me will output audible predefined phrases and allow basic communication. A simple Web-Interface allows the creation of profiles, without any limitation on word complexity or length.

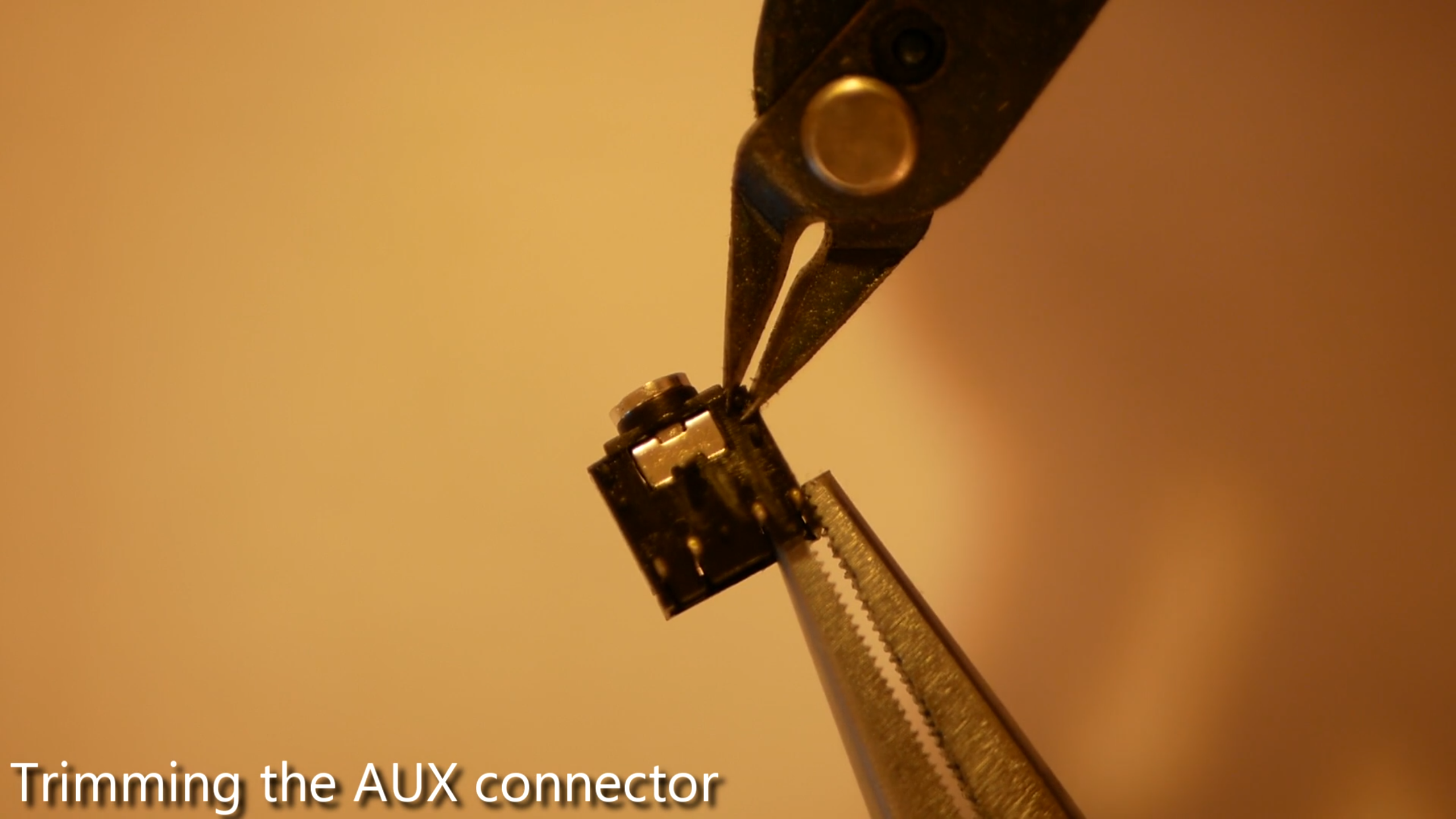

Following a simple step by step video tutorial Speak4Me can be built for around 100$, which is way below given solutions. All product related information regarding needed materials and construction manuals are provided via a central homepage.

We won the 2021 Hackaday Prize challenge 03: Reimagine Supportive Tech Finalist Award, which is endowed with $500: WINNERS OF THE HACKADAY PRIZE SUPPORTIVE TECH CHALLENGE

Project GitHub page including step-by-step video tutorials and the no-code customization tool: https://speak4me.github.io/speak4me/

1.1 Motivation

The UN has defined 17 Sustainability Goals (SDGs) that all members of the UN have adopted in 2015. Main focus of the SDGs is the desire to create a shared blueprint for more prosperity for the people and the environment by 2030 (cf. UN, 2015). Speak4Me embraces SDG 10 which aims to remove inequalities within and among countries, especially in poorer regions. Within this SDG our solution can bring value to people with speech related disabilities by giving them a way to communicate. Worldwide over 30 Million people are mute and therefore unable to communicate using their voice. (cf. ASHA, 2018)

Our main focus are patients with serious mental and or physical disabilities, that are not only verbally mute, but are also unable to communicate using gestures and other means. This includes patients with ALS, Apraxia and other degenerative diseases that lead to a slow loss of control over body functions, as well as patients affected by spine damage that makes it impossible to communicate via body language and other means. Birth defects, damage to vocal cords and accidents that damage relevant organs and many other conditions, can also lead to muteness.

Physical muteness is rarely an isolated condition. Most commonly it is just the result of other underlying conditions like deaf-muteness which is the most common reason, for people to be unable to communicate verbally (cf. Destatis 2019).

Speak4Me could also provide help to patients with temporary conditions. Just in Germany over 250.000 people suffer from strokes every year (cf. Destatis 2019). During recovery our solution can help strongly affected patients to communicate with their environment, which otherwise might be impossible. Other nursing cases like patients suffering from the Locked-In syndrome could also benefit, as only control of the eyes is required.

1.2 Objectives

Speak4Me wants to provide an affordable, customizable, easy to build and use device to support handicapped people to communicate with their environment. Language synthesizers and speech computers exist, but are very expensive which can make them unaffordable depending on socio economic background including the country of residence. Our target is to deliver a solution below 100$ in total cost, to reach as many affected people in the world as possible. By providing blueprints and code basis of the entire solution we want to encourage others to build upon our work an improve or adapt it.

1.3 Background

Our entire solution is based on the open Arduino platform, which is built around standardized hardware and a basic coding language. Previous projects based on the Arduino platform, have shown promising results...

Read more » Malte

Malte

Matej Nogić

Matej Nogić

Shu Takahashi

Shu Takahashi

Debargha Ganguly

Debargha Ganguly

Facundo Noya

Facundo Noya

The project is aimed at solving one of the major challenges in the healthcare industry: communication between caregivers and those who need their help. The wearable technology will assist caregivers during home visits by translating eye movements into text or speech based on a person's preference. Reading https://trendblog.net/how-to-create-an-awesome-junior-software-engineer-resume-in-2022/ article also helps you to know how to create an awesome resume.