The goal here is to make this hardware assisted virtualization platform as easy to use as it's bare metal counterpart. Or, the virtalization should be completely transparent to the end user. They should be "in the Matrix", in a virtual world unaware of the virtual nature of their constructed environment, yet endowed with "powers" that allow all kinds of amazing feats formerly thought impossible.

The Xbox One is completely "in the Matrix", with all management removed. Boots 2 VM's & that's it, if they break it's back to Day1 snapshot for you. If you're going to be in the "Matrix" you want to be Neo....

But datacenter level management comes with the expectation that you at least have your head wrapped around the whole virtualization thing.

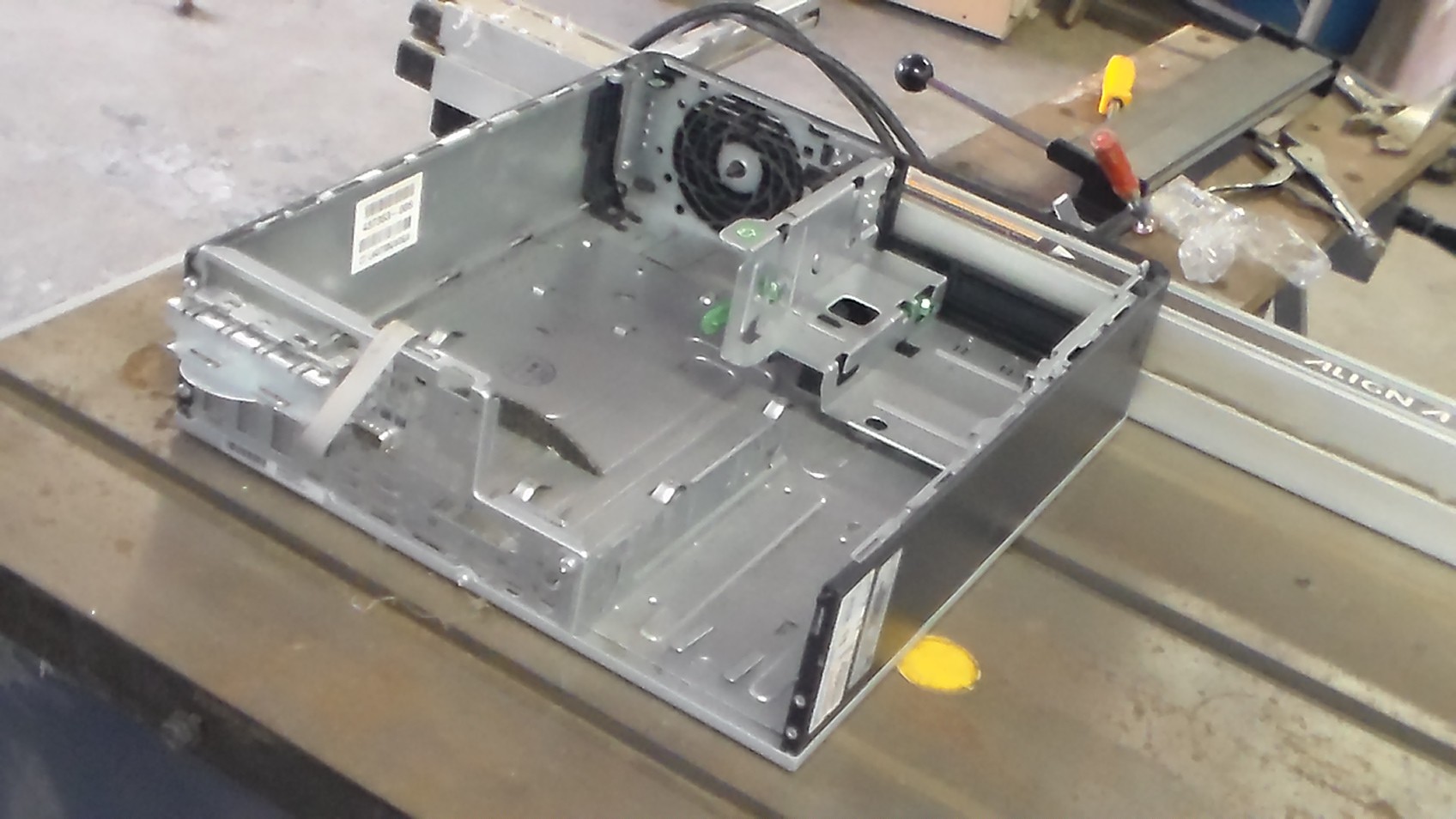

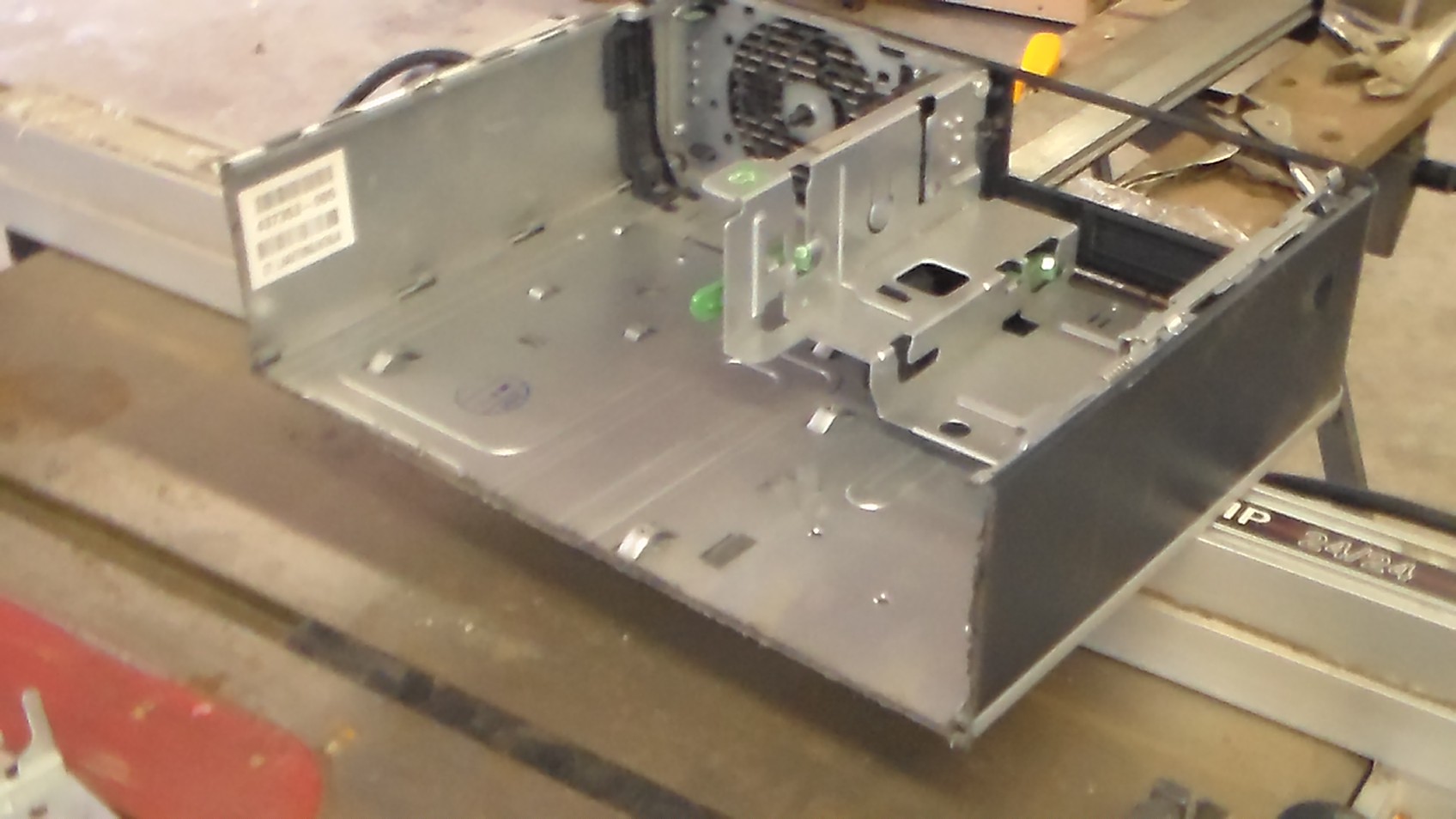

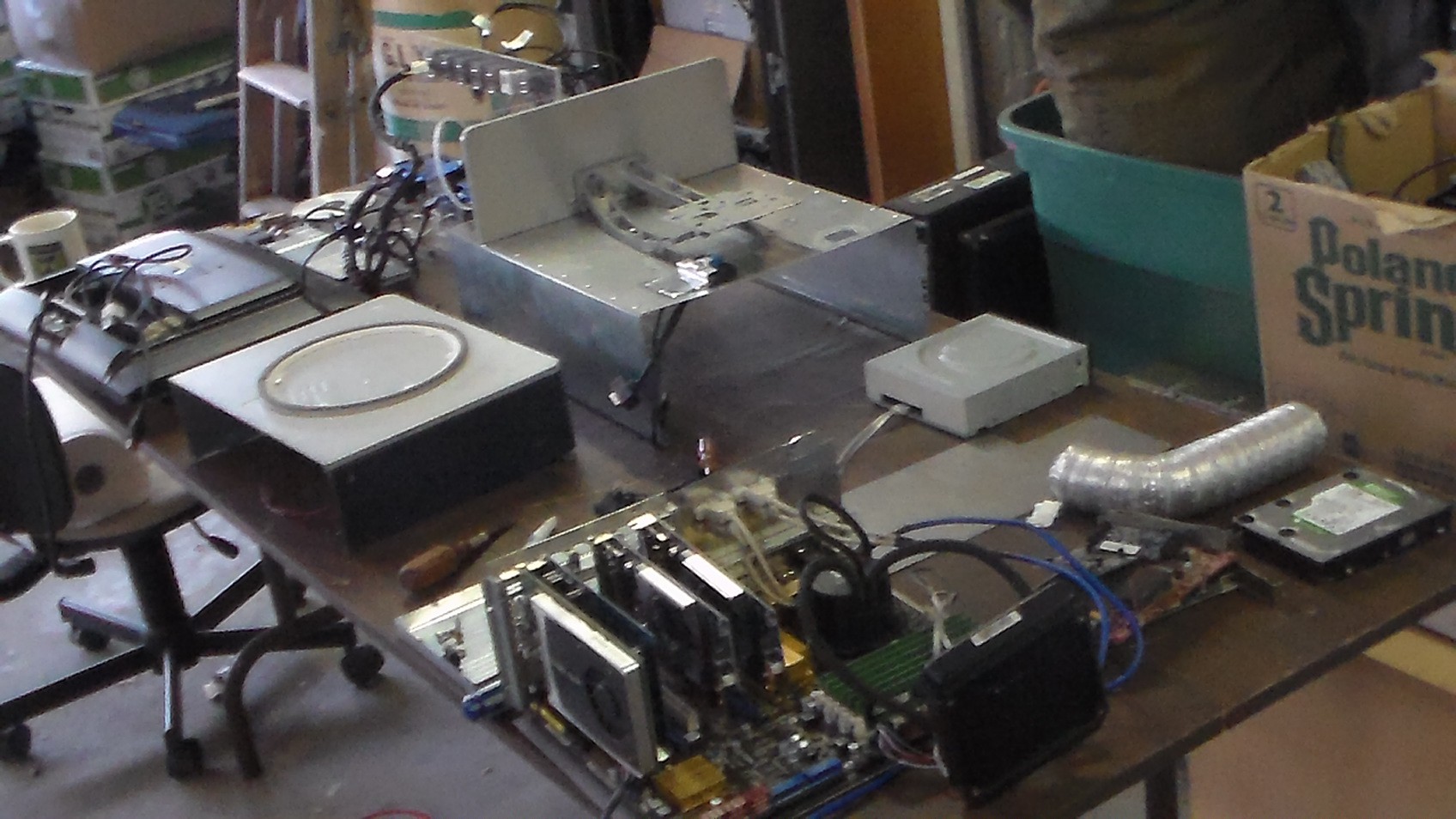

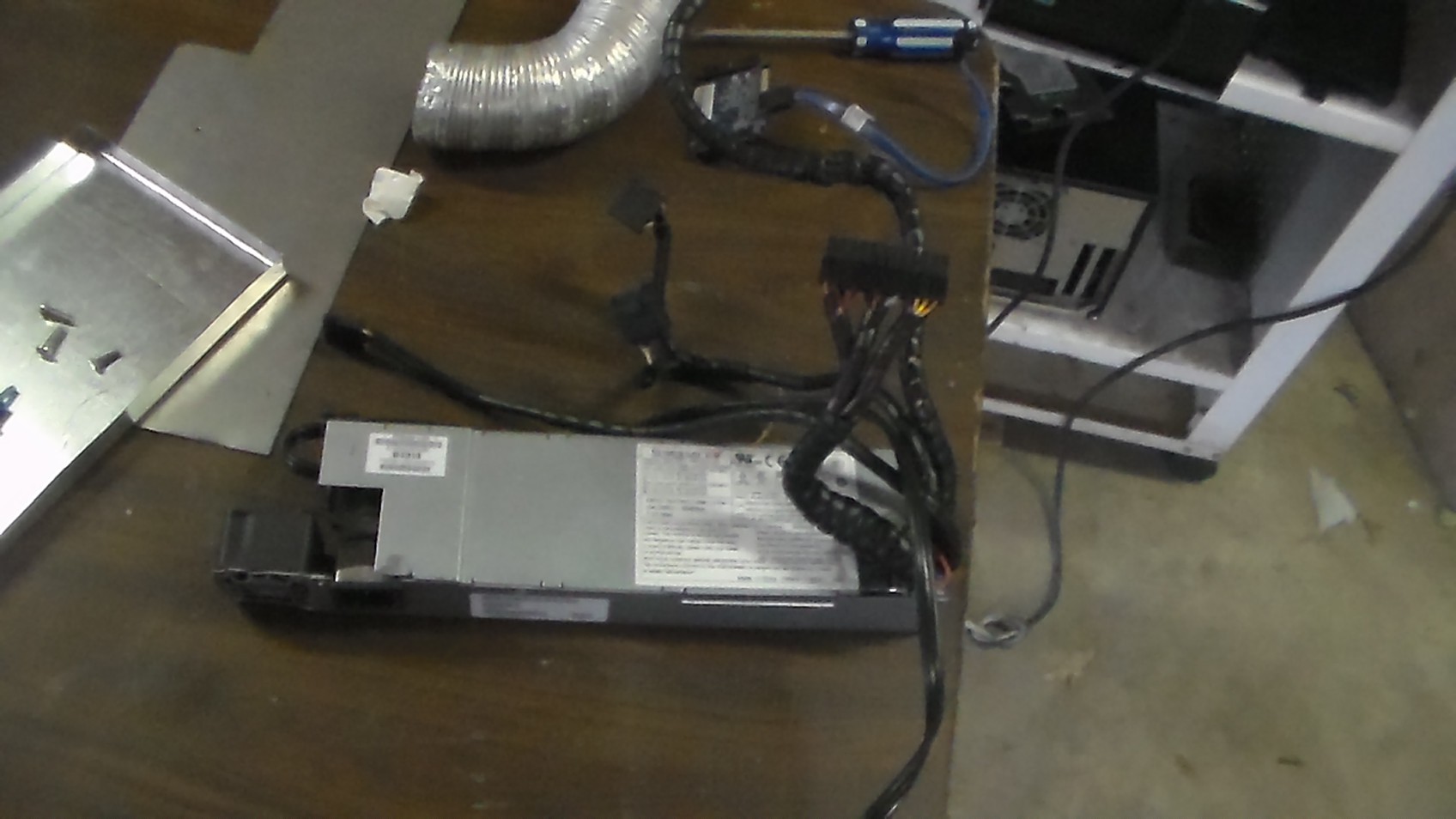

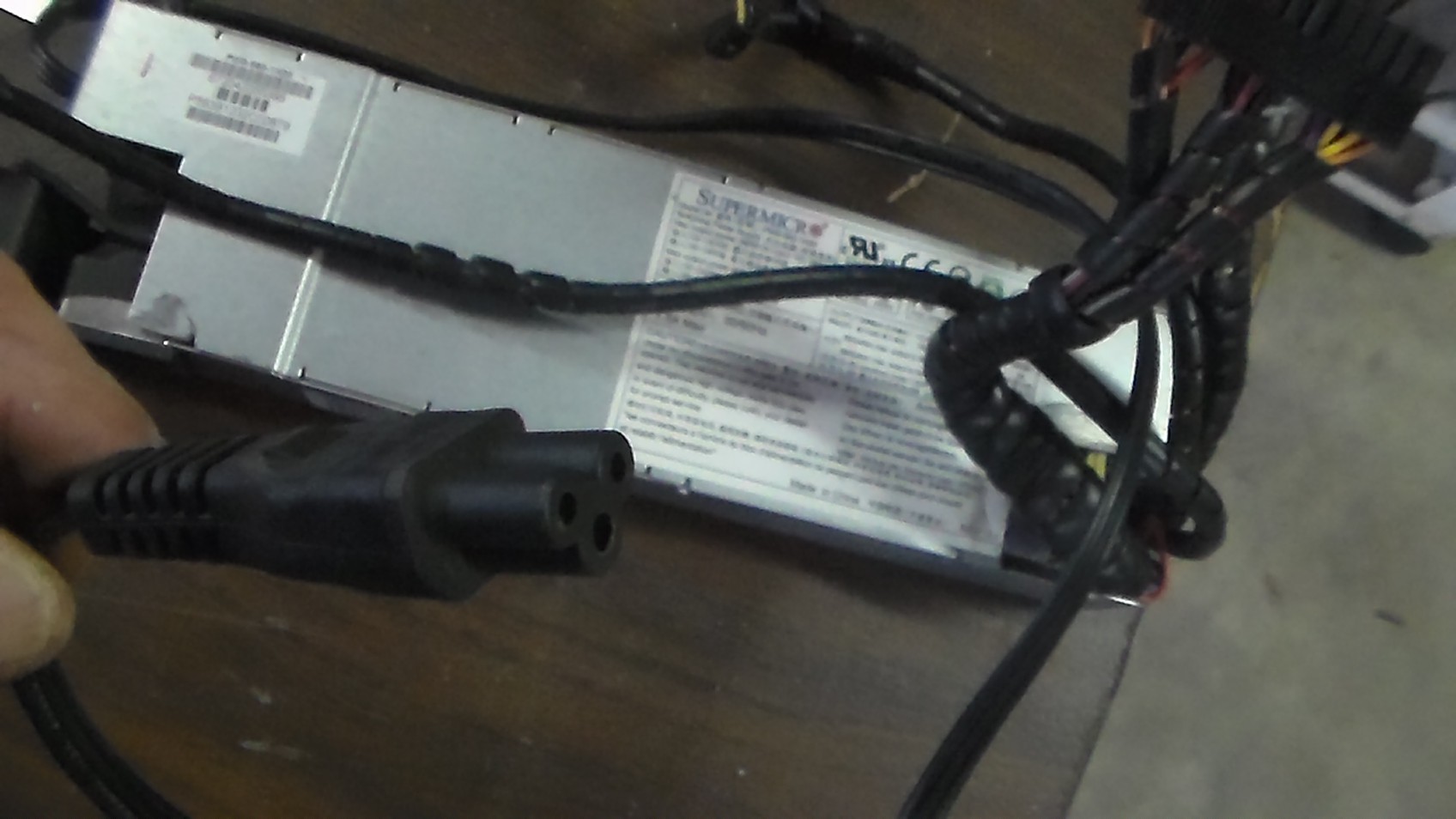

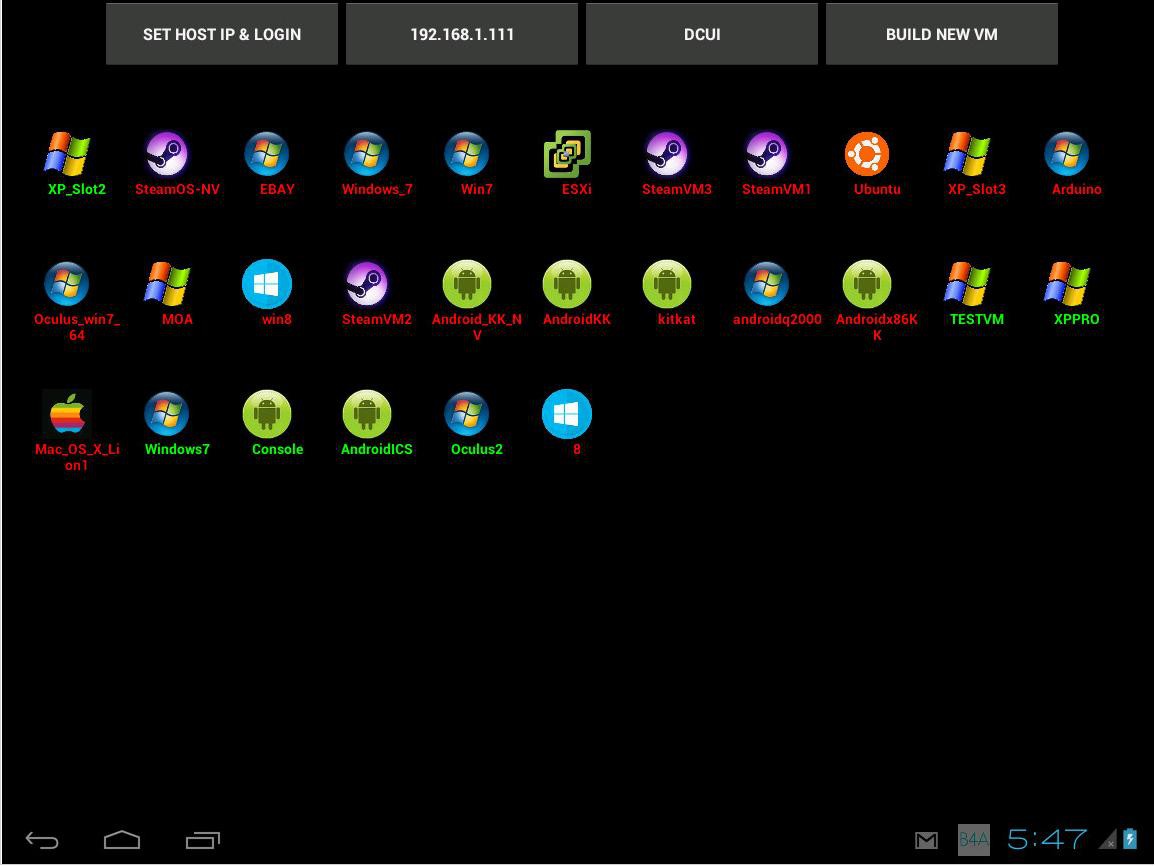

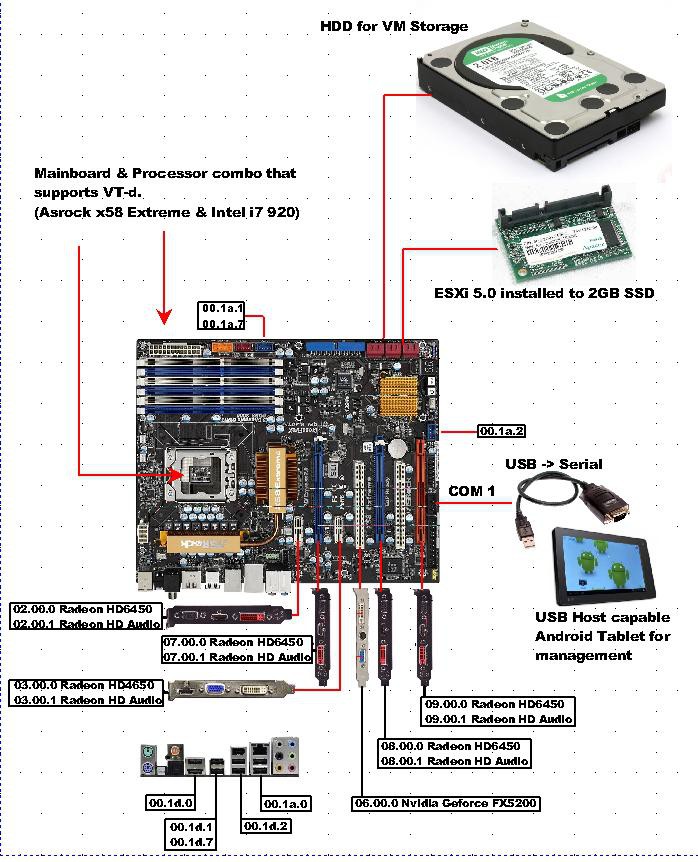

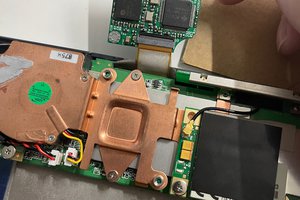

The HYDRA attempts to strike a balance. ESXi boots from the primary gpu, which gets "stolen" for passthrough during boot at which time the screen freezes. The VM assigned to this GPU boots and takes the screen. Starting other VM's or switching the OS on the primary display needs some interface......

The Hydra was originally developed 1.5 years ago to be a digital menu board system for a sub shop. Since then I have used this system as my primary PC (s) , and have 2 deployed in others use. This allowed me to get feedback and explore alternative, user friendly methods for the tasks a typical end user may encounter, as well as discover all the capabilities.

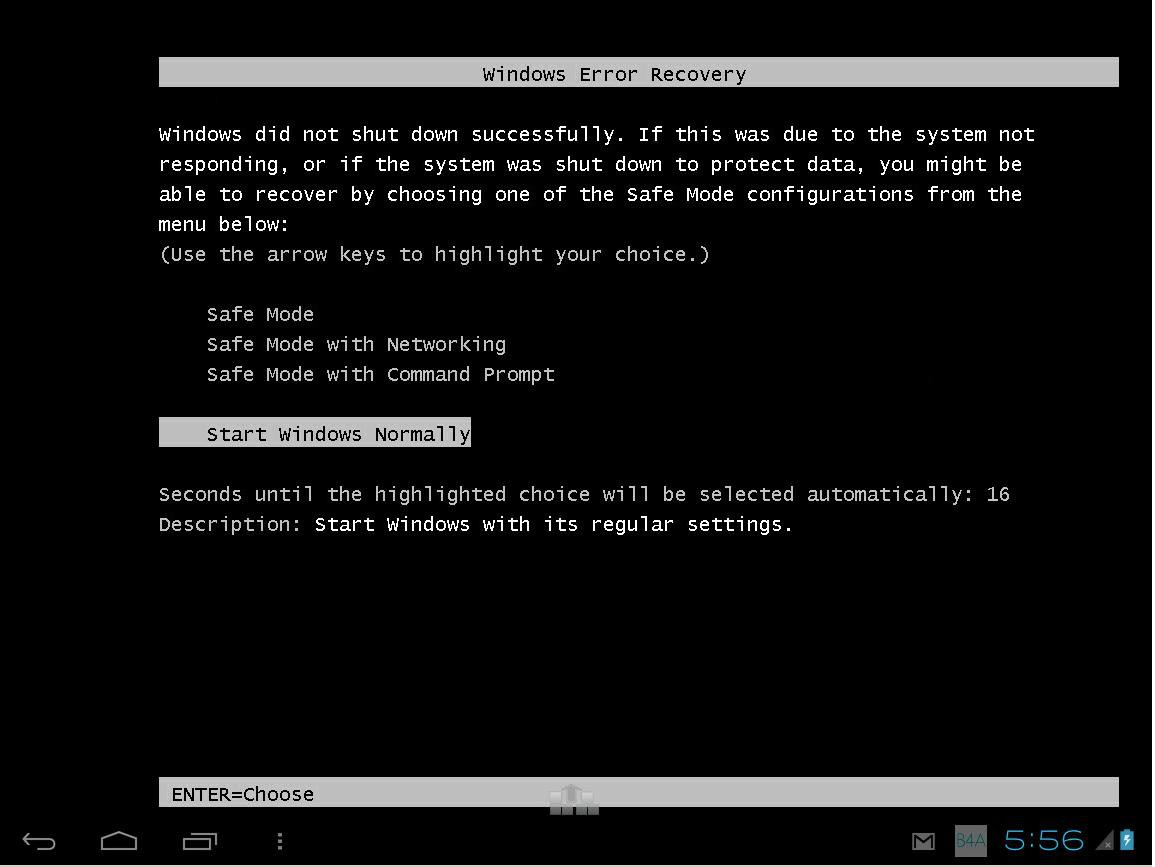

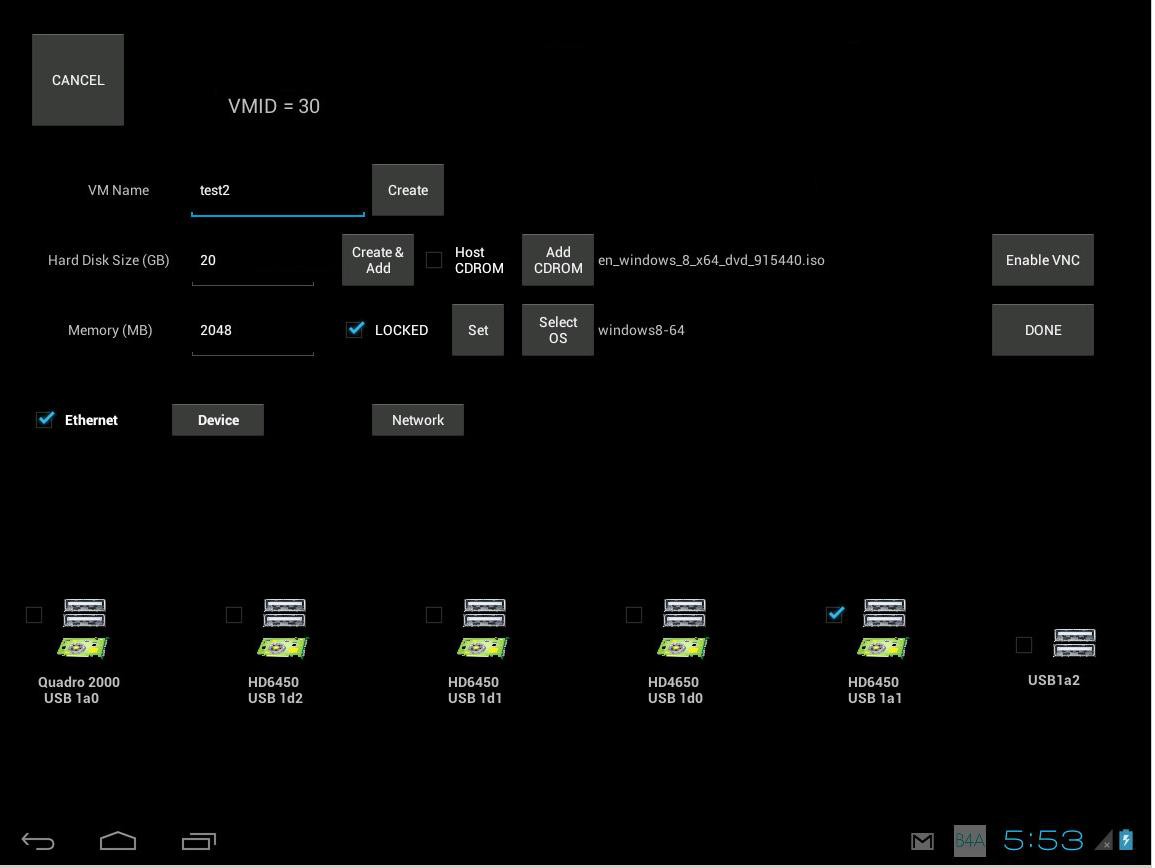

First try: Installed a vm with only 1 usb passed through for a wifi card, & created a softAP. Wrote an Android app and got a cheapo Android tablet. This allowed power & snapshot operations, even showed the VMware vga interface during boot over VNC, so if you were stuck in "startup repair" you would see it at the tablet. However, wireless deemed unreliable.

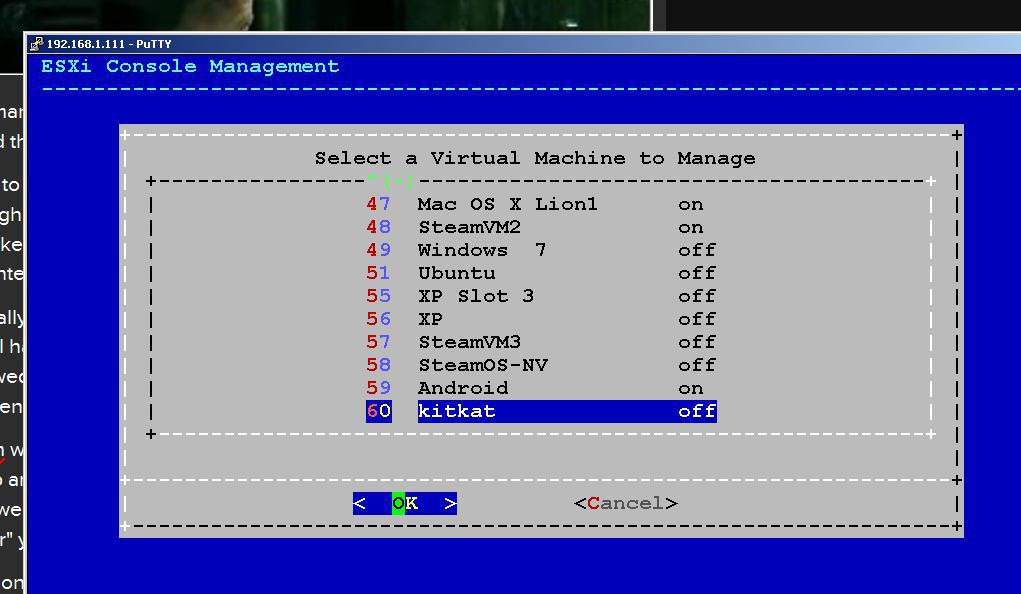

2nd try: ESXi has python, so I wrote a menu system that is accessed from the console of the server, or by ssh, or over serial. Created installable VIB patch to easily install the menu. This works very well, power, snapshot, even networking settings are available. But, the end user is required to use a text menu at the console. Should be ok, but as it turns out it's NOT.

I once accidentally assigned the primary GPU for passthrough on my system, the ESXi screen froze halfway booting. This was an HP Riloe II card, but it wouldn't pass through. I tried an NVidia PCI card and could actually assign it to a vm.

3rd try: built mgmt VM including all the tools, simple & complex. Added the softAP. Set to autostart. Works very well, just wastes a GPU....

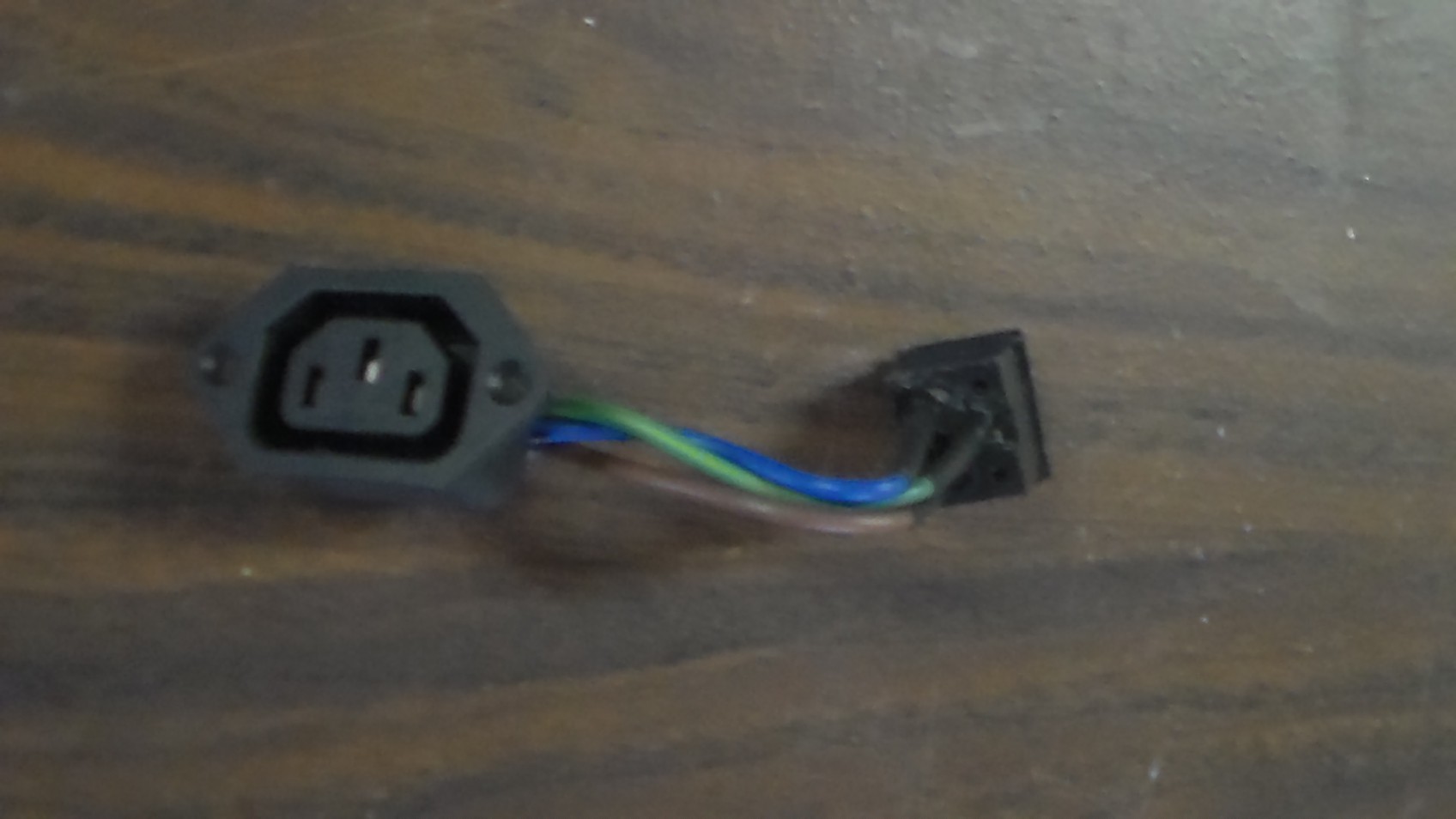

Redirecting the console to serial seems to be the answer. A microcontroller based serial console could handle all that is needed, as well as maybe perform other functions, such as HDMI KVM switching.

Current mgmt interface: I redirected TTY2 (techsupportmode) to com1. Now we have 6 GPU's for passthrough. Added bonus: modules loading are shown on the terminal during boot. The python menu will run on a laptop with putty, or even a palm3. I can't really require a laptop be attached for mgmt and there's only so many palm3's out there. Under development is a serial terminal menu device based on a ATMEGA328 with a 4x20 LCD. Very similar to:

http://hackaday.com/2008/05/29/how-to-super-simple-serial-terminal/

I am however using other libraries, to get the type of menu I want and support for a jog wheel rather than buttons. It will show 4 VM's with power status at a time, highlighting your selection, and have indicators for more above or below. Only power & snapshot operations would be available, due to limited space. The unchangeable management VM would head the list, and allow full management capabilities. But for daily operations, the 4 line lcd will be an interface end users can deal with.

Like this:

The HYDRA can be configured with 6 video cards, or with only one. Next prototype will be a mini-itx board with 2 GPUs. So a small...

Read more » eric

eric

Ben Combee

Ben Combee

Wenting Zhang

Wenting Zhang

naguirre

naguirre

Hey Eric,

nice work! I found this german article that mentions some difficulties and I wonder if you experienced the same issues and if you were able to resolve it:

https://struband.net/esxi-6-0-gpu-passthrough/

I translate:

The start (of the vm with gpu passtrough) only runs properly after a ESXi-Host restart. This means, the VM itself can't shutdown and start again afterwards, or simply being restarted.

Monitor Standby won't work either.

By the way, the ESXI Embedded host client might be of interest to you: https://labs.vmware.com/flings/esxi-embedded-host-client