Telepresence is really cool. Being able to have my mum, who lives some 7000km away, look in the direction of one of my kids whilst we're on the dinner table is just priceless. Nevertheless, the solutions available are either expensive, require a lot of complex ML trickery or a remote control. Each one of this solutions has it's pros and cons, I'll focus on the why I didn't want any of it below.

Telepresence robots

They're so cool. Put simply, I cannot afford one.

AI-driven solutions

There are really great projects out there which capture images or sound, analyse them using ML algorithms and trigger an action on the remote host. These solutions are really fantastic, but they rely on a computer to do the heavy lifting, some of them need to interface with platforms that sit outside our control and are hard to implement from my POV. Also, people don't always have a spare computer or Raspberry Pi to leave on the telepresence robot to digest the input.

Remote control

Personally I think this is the worst of all the solutions. When calling someone on the phone, I need the solution to be operated remotely without resorting to another machine, or another app on the phone. This is a must since it's not so easy to ask someone to go fetch a laptop or a tablet to control the robot.

Also it's hard to come by any APIs to for example overlay remote control buttons over the Watsapp screen.

The simplest solution

First, the constraints:

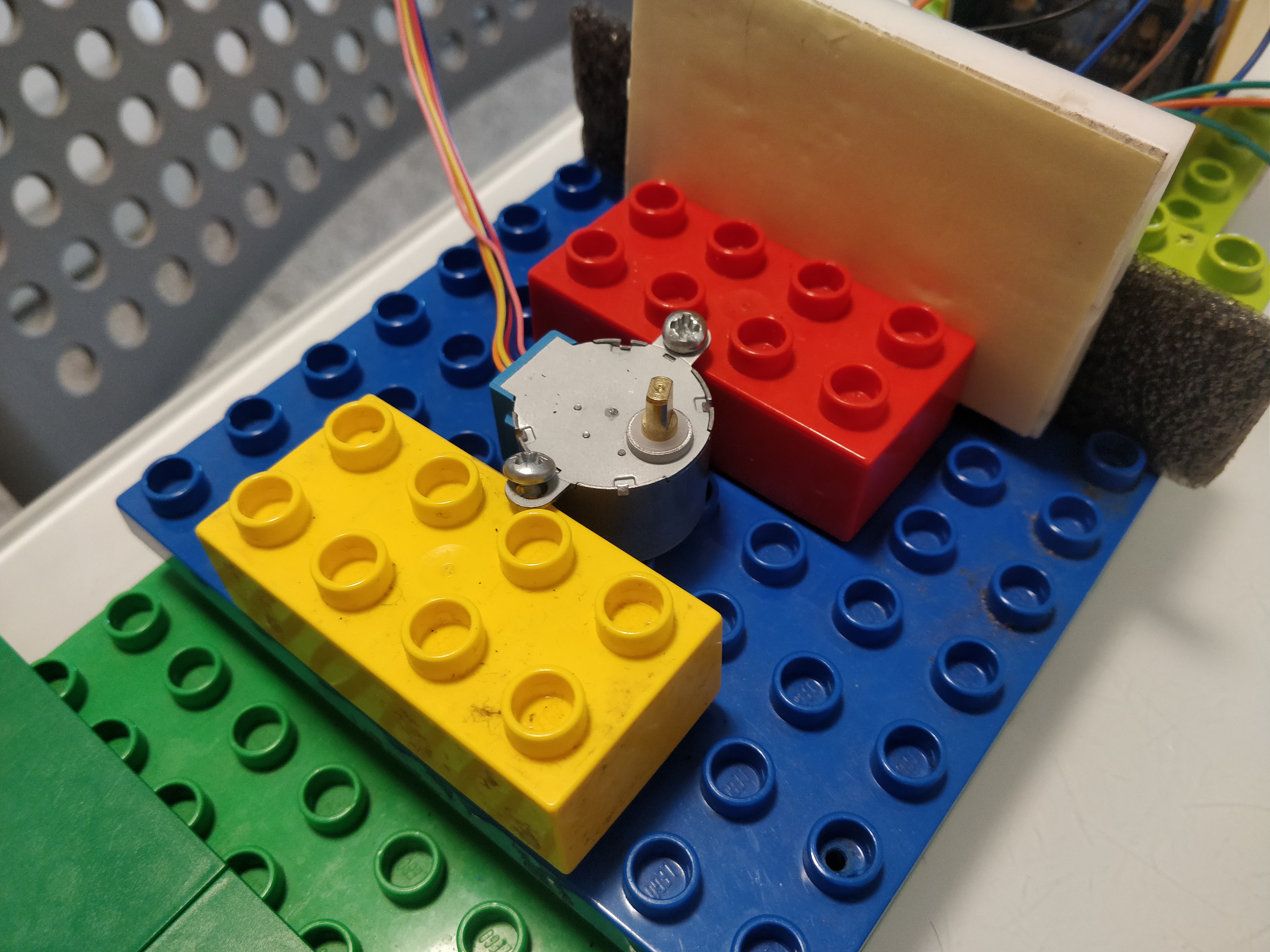

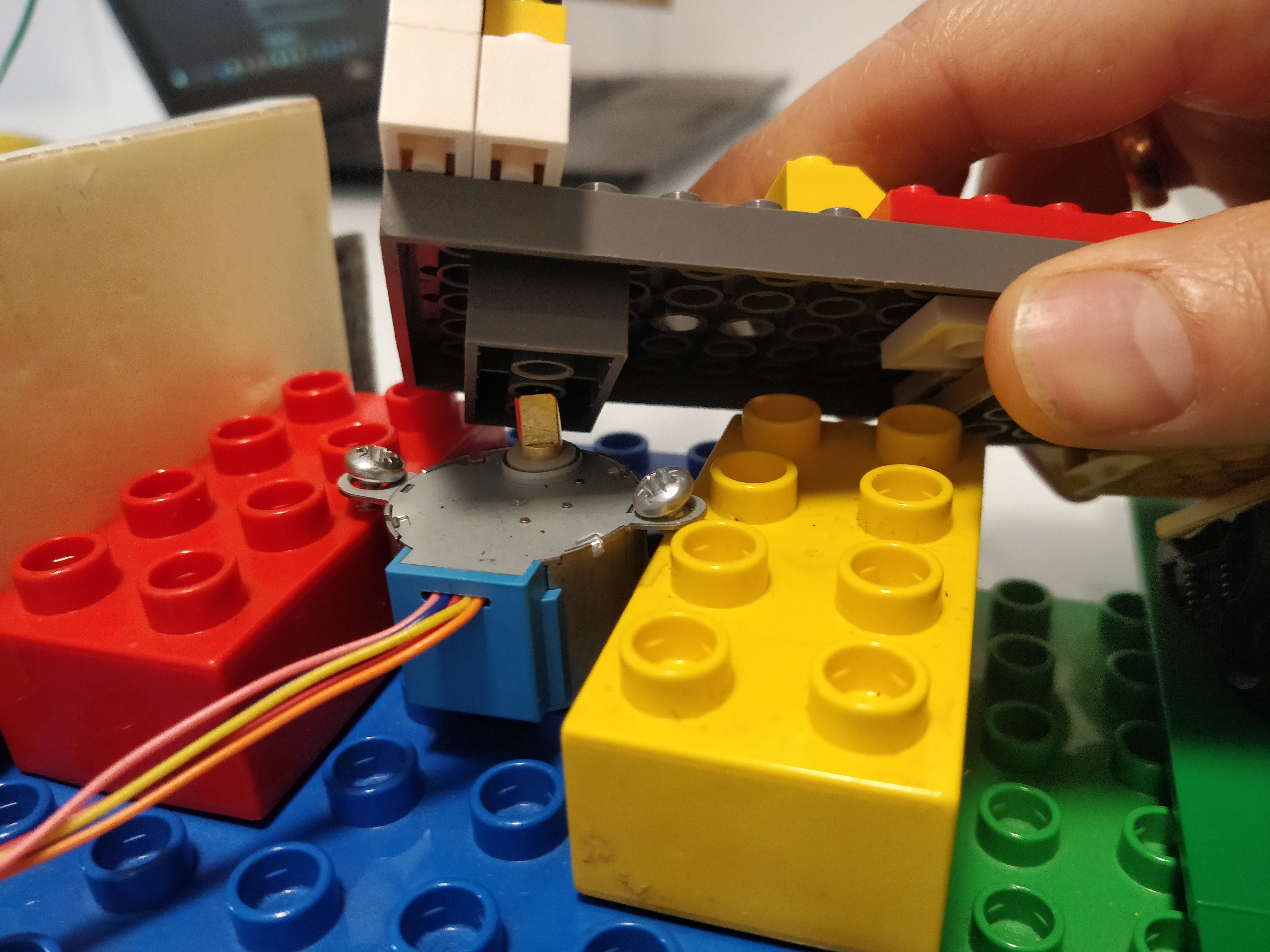

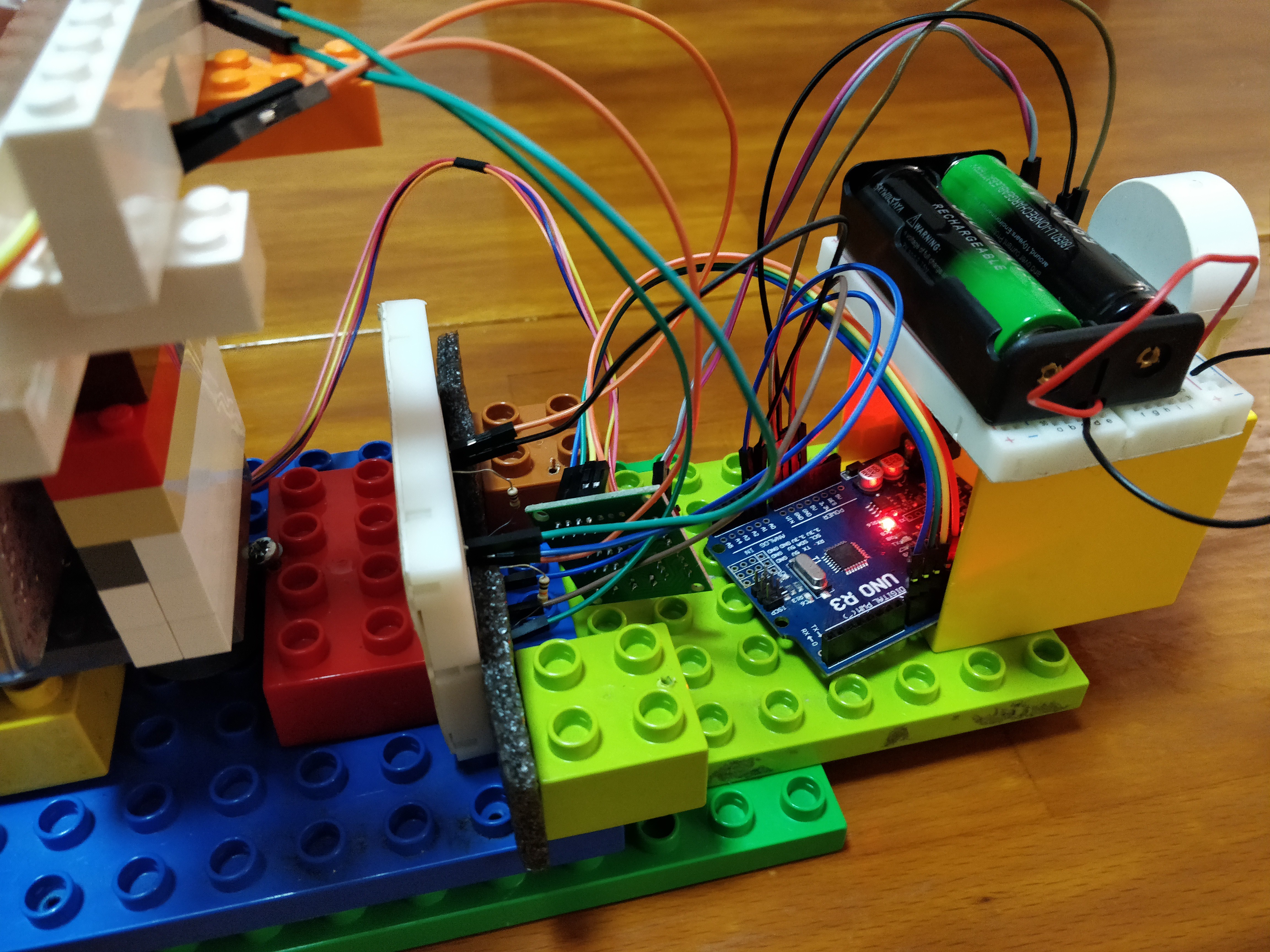

- I only have LDRs

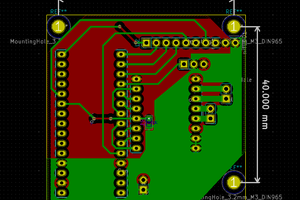

- A couple of Arduinos and a few NodeMCUs

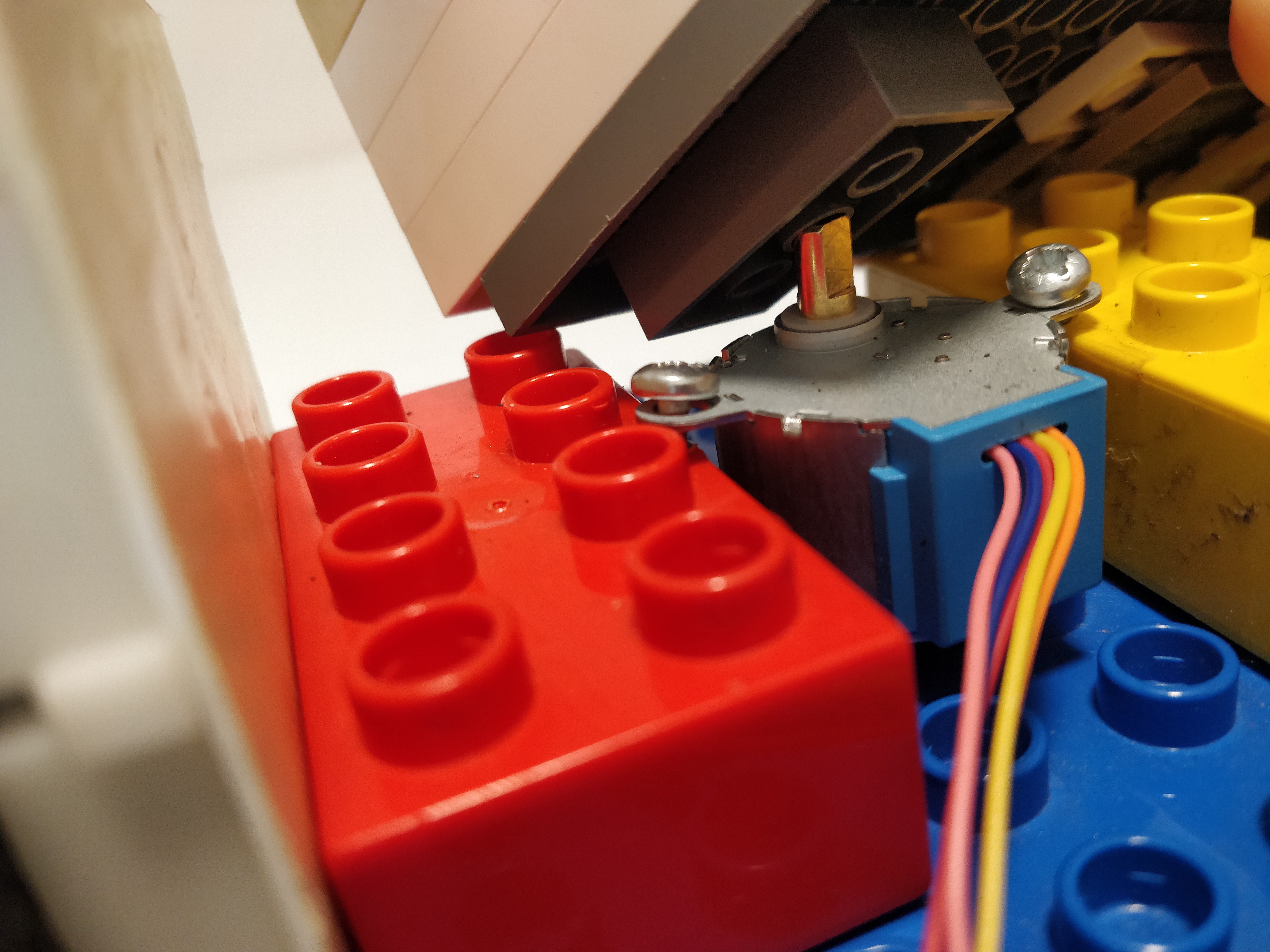

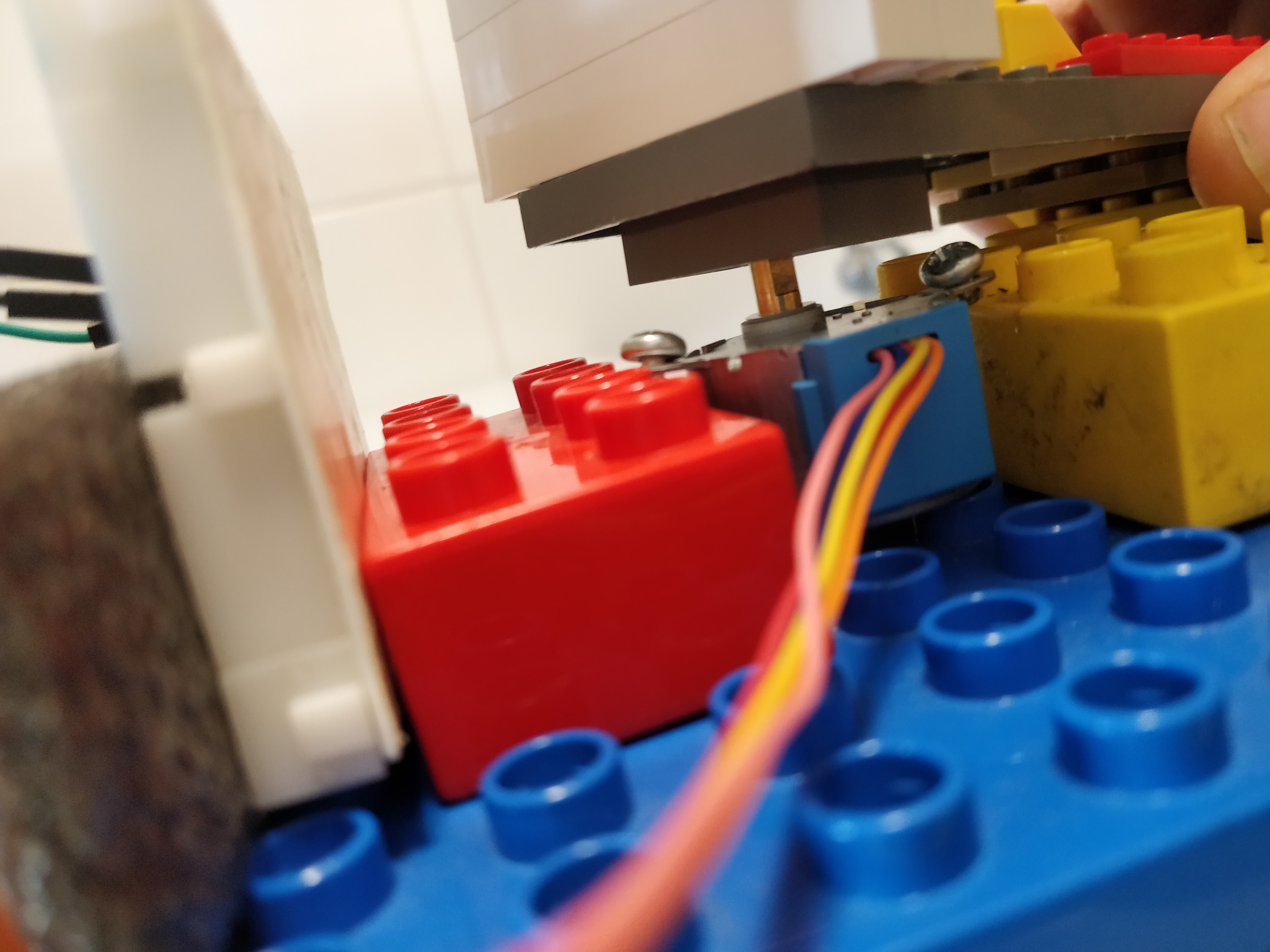

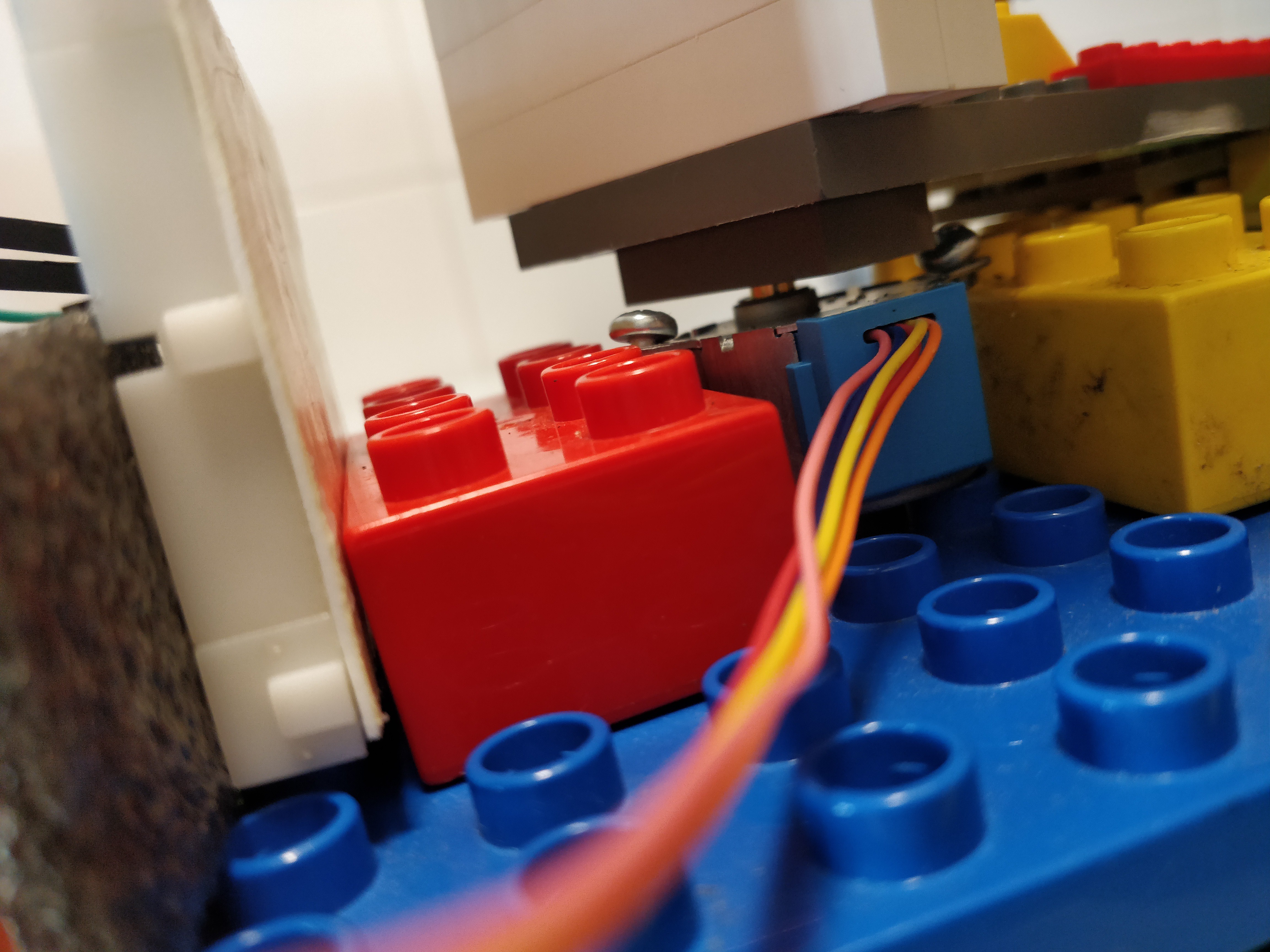

- I'm not very good with motors

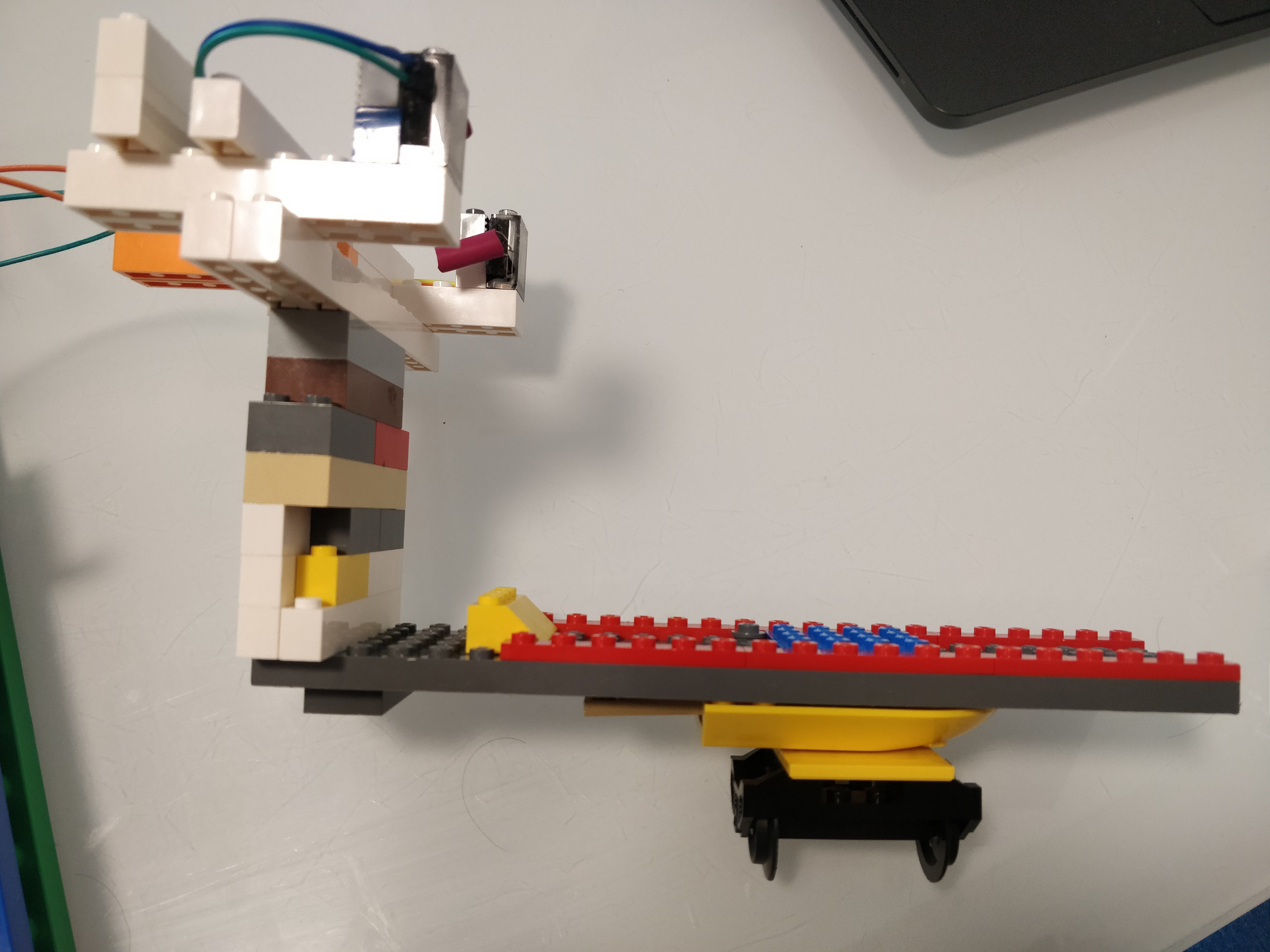

- I don't have a 3D printer

- I don't have a workshop

Following upon the robot project from a recent Hackaday blog post, I realised I could maybe use the image on the screen to control the robot only by looking at the brightness of a certain area of the screen. This idea came from a different project of mine that used LDRs to measure the intensity on a screen to recognise numbers.

The objective was to put two LDRs, one at each side of the screen, and check the brightness using a photoresistor in a voltage divider configuration.

If the edge of the phone changes brightness, i.e. lighter or darker, like for example when someone moves their head to the side, the phone will turn in that direction. The result can be seen in the video below with a dark background one one side and a lighter background on the other side.

This solution has its limitations, namely:

-

The background has to be lighter than the caller, but I'm planning on improving upon this. - The caller cannot move around. If the brightness in the background changes, then the phone will start moving.

- This version can only turn around the Z-axis, but I'm already thinking of ways to get this off its place.

I know, another tele-presence project, really? But this one is different.

Maximiliano Palay

Maximiliano Palay

adria.junyent-ferre

adria.junyent-ferre

Myrijam

Myrijam

Juan Sandubete

Juan Sandubete

that’s really neat.