-

Add-On Board: LiDAR

05/15/2022 at 17:16 • 0 commentsThe first add-on board for the Mini Cube Robot is here! It is a LiDAR board based around the VL53L5CX ToF sensor from STMicroelectronics. This ToF sensor has 8x8 separate ranging zones and a 45° by 45° FoV, giving it a angular resolution of 5.625°, with a maximum range of 400 cm in low light conditions. It also has a fully programmable I2C address, allowing the connection of multiple sensors to a single I2C bus.

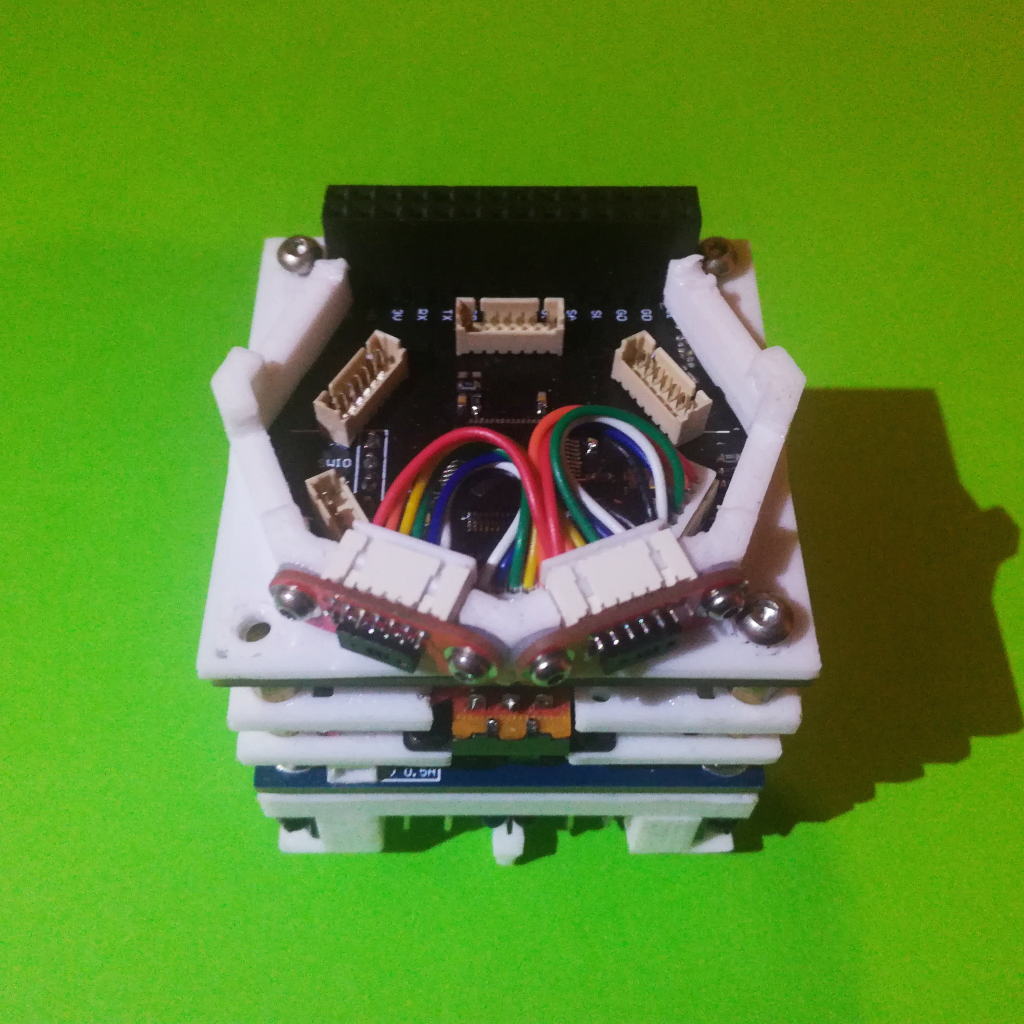

A simple breakout board for the VL53L5CX ToF sensors was designed, which holds the necessary decoupling capacitors and pull-up resistors. The module has a 1.25mm pitch header space to which a 7-pin 1.25mm JST connector can be soldered to. Up to six of these modules are hold in place, 45° apart, with a 3D printed holder and connected to the main board.

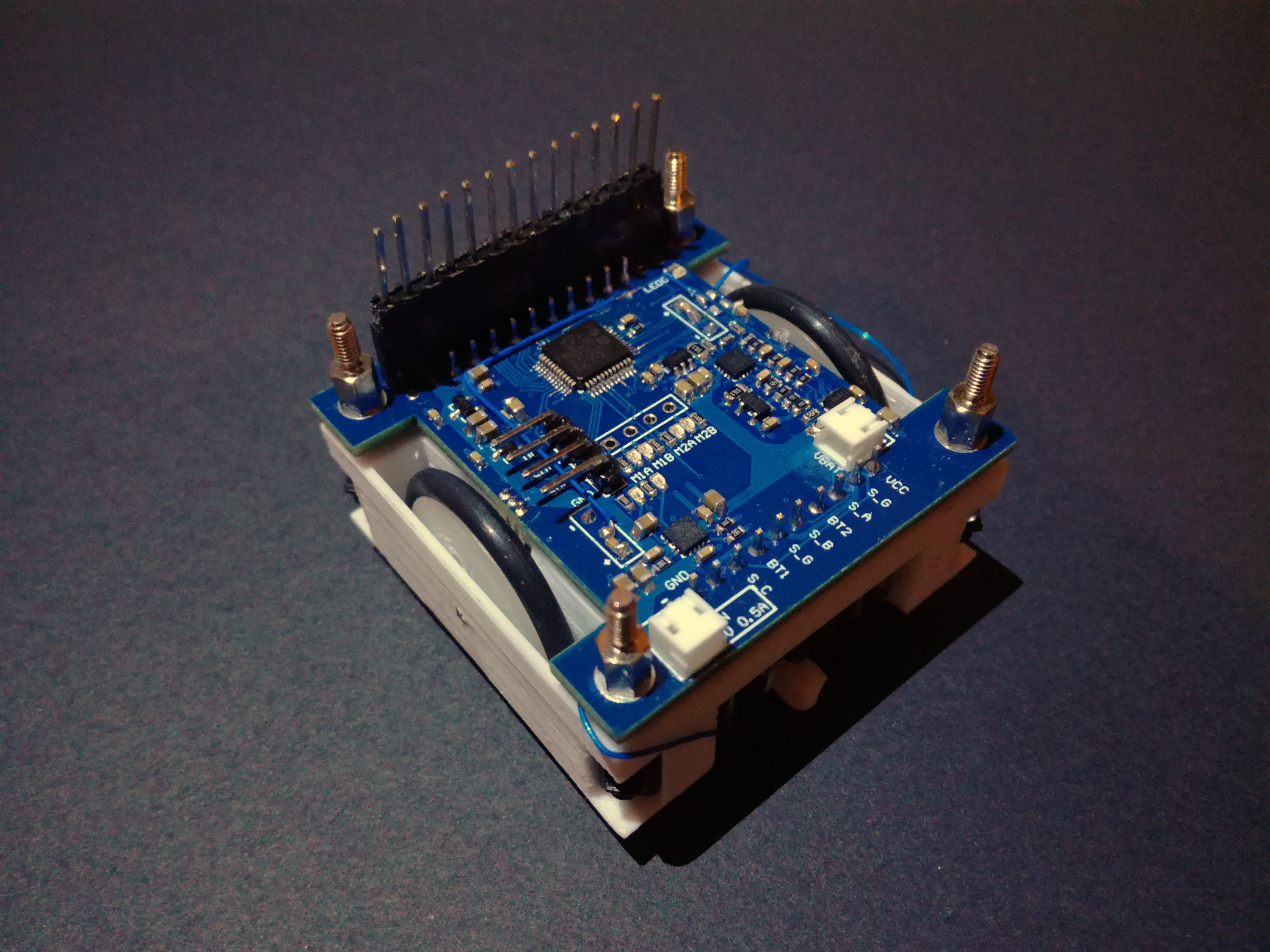

The main board uses a STM32F103RCT6 at its core, and it powers, controls, acquires and aggregates up to six VL53L5CX ToF sensors. All sensors share a single I2C bus but have separate power down and interrupt GPIO lines, allowing the programming of unique I2C addresses at start-up. Bellow is a picture of the LiDAR add-on board: the main board with the 3D printed holder and two VL53L5CX sensor modules:

![]()

The set-up above gives a aggregated horizontal FoV of 90° with 16x8 ranging zones. This can be expanded to a FoV of 270° horizontally with 48x8 ranging zones, when all six modules are added. With that configuration, the maximum readout rate is around 5 Hz, due to the limited I2C bandwidth of the used MCU (max. 400 kBits) and each acquisition being quite large at around 1.4 kBytes.

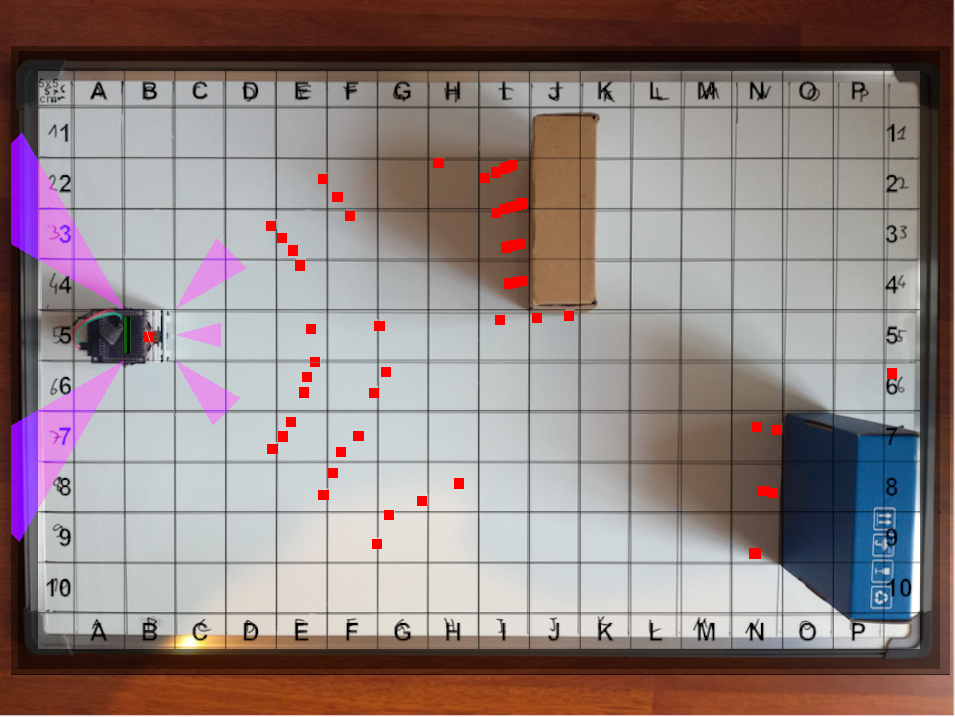

The aggregated ranging information is then sent over Bluetooth to the Robot Hub software, where it is rendered as a point cloud. An example of this can be seen in the picture bellow, where the Robot Hub rendered point cloud is overlayed with a picture of the real scene:

![]()

The LiDAR add-on board still requires tuning of the ToF sensor settings and acquisition optimizations. Also, it was not yet tested fully populated. At the same time software for mapping and localization will also be developed, in C# for the Robot Hub, starting with ICP. The basic ICP algorithm is already implemented and updates are posted to Twitter.

The firmware of the LiDAR Main Board is available on GitHub and schematic and gerber files of both the VL53L5CX breakout module and the main board are available on the website, as well as some additional information about it.

-

Odometry and Robot Hub

03/11/2022 at 09:24 • 0 commentsAfter implementing the motor drive controller it was time to implement movement feedback of the robot, calculating its position and rotation based on wheel rotations, this is, the odometry of the robot. Because this is a differential drive robot it is over constrained, it can only move in two axis: forward translation and the yaw rotation. The forward movement speed is simply calculated by the average wheel speeds, in mm/s:

And the rotation rate, in rad/s, is calculated with the following formula, where Spacing is the distance between the two wheels in mm:

Now these values are in the robots local reference frame and have to be converted into a global reference frame that is stationary. With the robot moving on the same plane (XY plane) as the global reference frame, the yaw rotation is the same in both and only the forward translation must be converted into its X and Y translation in the global frame:

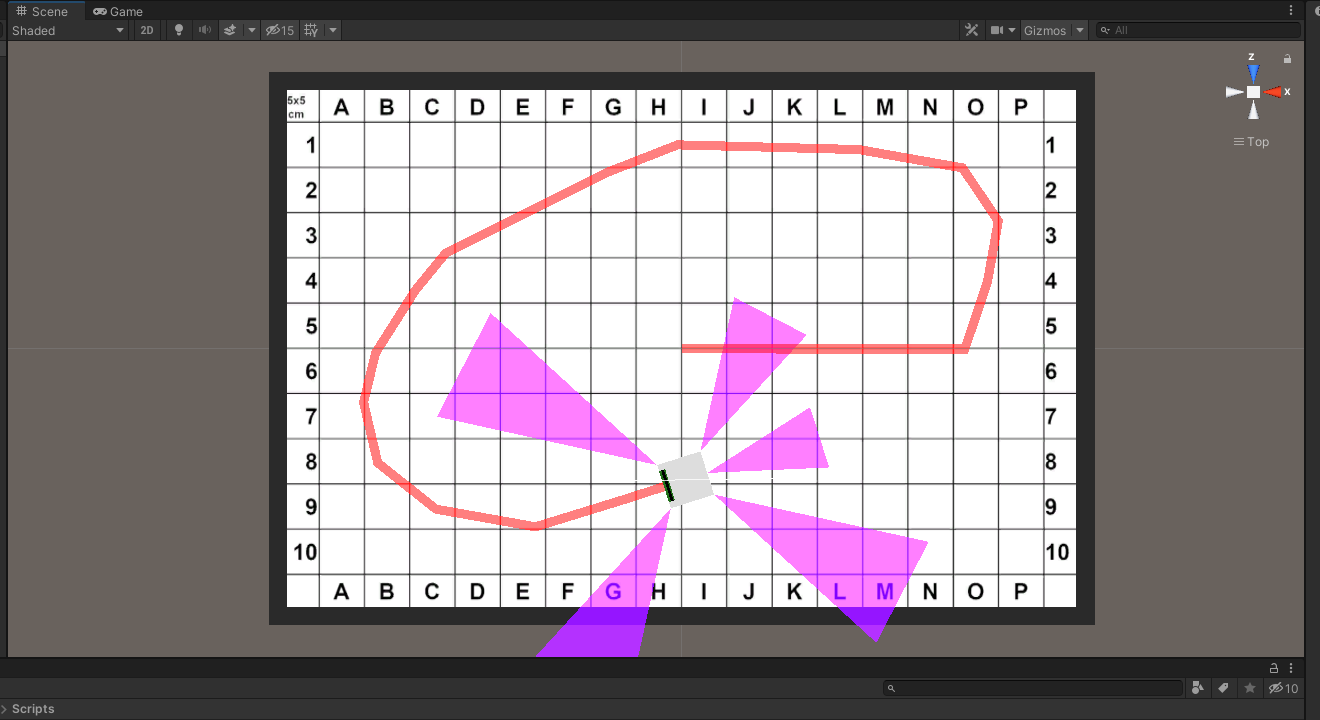

Both the yaw rotation speed and X and Y translation speed must be integrated over time to get the actual position and rotation of the robot. These values are then sent to the PC where they are used to update the robots position and to draw its movement path:

![]()

The above image is from the Robot Hub that is also being developed, using the Unity game engine and is also available on GitHub:

https://github.com/NotBlackMagic/MiniCubeRobot-Hub

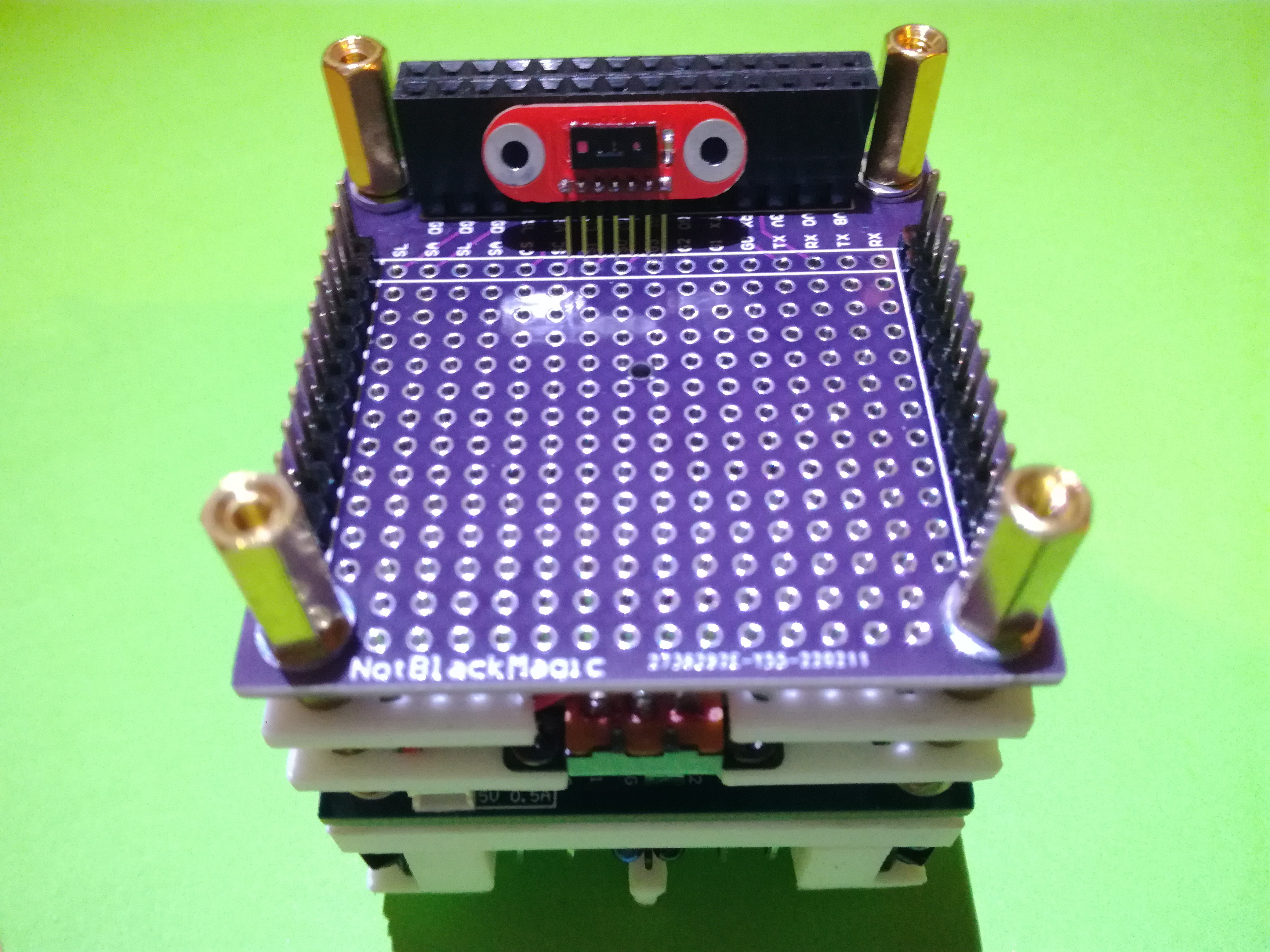

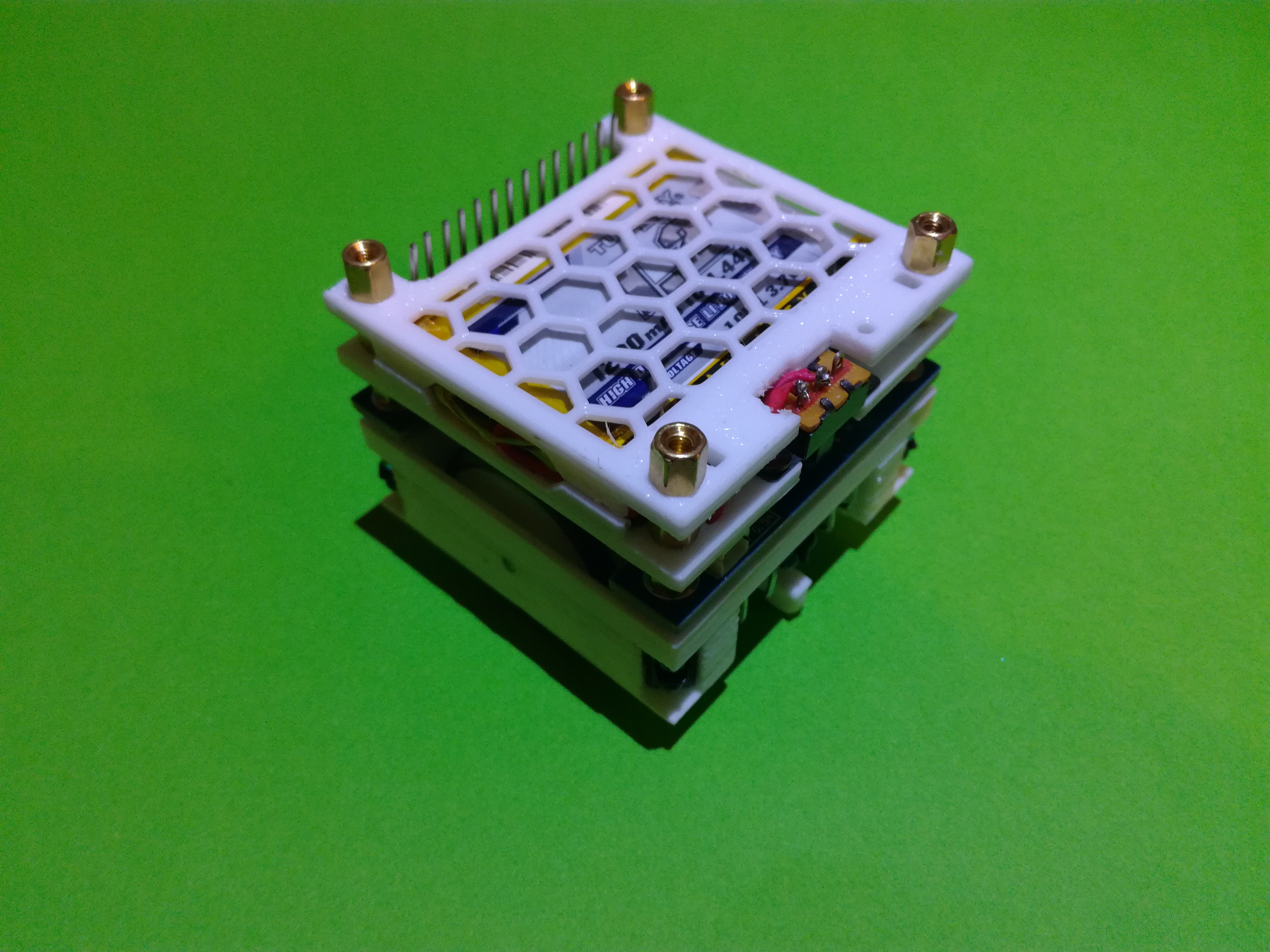

Also, the newly developed prototype board for the Mini Cube Robot has arrived and it looks and fits very well on the robot:

![]()

Together with the prototype board, a test module for the VL53L5CX LiDAR sensor from STM was also ordered. This small and "cheap" LiDAR sensor can output a 8x8 range matrix with range of up to 400cm. Looks very interesting to use as a simple range finder and/or LiDAR for the Mini Cube Robot! Already working on testing it, with progress being published to Twitter.

The updated firmware with the odometry is available on GitHub and some more information odometry and the reference frames are as always available on the website.

-

Motor Drive Controller

02/23/2022 at 19:44 • 0 commentsThe Mini Cube Robot motors are driven by a dual H-Bridge, with the motor speed controlled with a PWM signal and the rotation direction with a GPIO. Each motor also has a encoder for motor speed, wheel speed, feedback. Both of these where tested and characterized in previous updates. Controlling the PWM signals directly with a simple wheel speed to PWM signal conversion function is not ideal and would not results in a very accurate system, large discrepancies between the desired wheel speeds and actual wheel speeds specially with changes in battery voltage or motor load. Because of this a more sophisticated controller with feedback is used, the ubiquitous PID controller.

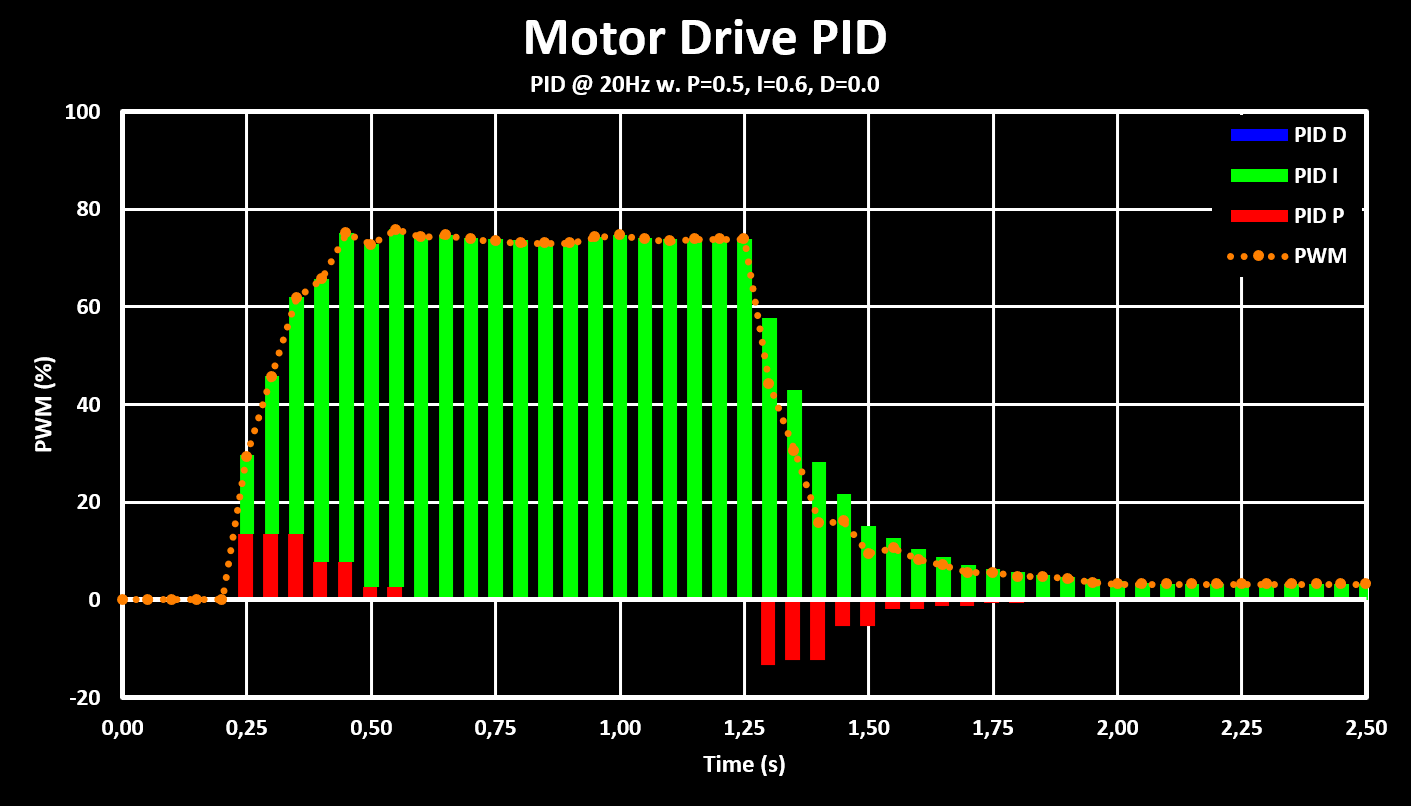

The implemented PID controller only uses the proportional, P, and integral, I, terms, with the I term being the most important one as it is necessary to have a none zero output, PWM value, even with a 0 error signal in the input, which is the case in the steady state with a constant speed. The derivative term was not necessary because the robot drive system is relatively slow responding and naturally dampened, so additional dampening was not necessary.

The inputs of the PID controller are the desired wheel speeds, in mm/s, and the output is the PWM value used to control the H-Bridge. The PID controller is implemented in fixed point for improved performance and compatibility for fpu less MCUs. It is running at a 20 Hz refresh rate while the encoder calculates new values at a lower 10 Hz rate. The gain values arrived at after some tuning are 0.5, 16384 in Q15, for the proportional term and 0.6, 19661 in Q15, for the integral term. The step response of the controller, for a input step (wheel speed) from 0 mm/s to 70 mm/s and then back to 0 mm/s, is shown in the figure bellow.

![]()

These settings are then used for a simple drive test, shown in the clip bellow, where the robot is controlled over bluetooth, from the PC. The translation speed used is 70 mm/s and the rotation speed use is 45 deg/s.

This tests shows the robot moving and rotating with the set speeds. It also highlights some problems with the drive train, first it is very loud, some lubrication will be added to help with that as well as testing if increasing the PWM frequency (currently set to 1 kHz) is possible and which should decrease the whining noise. The robot also has some sideways drift, in part because one wheel has a dent in it (from using the hot air gun to close to it…).

The firmware is available on GitHub and some more information on the PID controller and on the results are as always available on the website.

New add-on boards for the robot are arriving soon like a simple prototype board for testing some IMU sensors! Stay tuned for early previews of what is to come, for both this and other projects, on Twitter.

-

Reflective Collision Sensor Test

11/25/2021 at 21:16 • 0 commentsThe robot drivetrain holds two collision sensor boards where each of them has three IR reflective sensors (TCRT5000). This sensor is composed of a IR LED and a phototransistor to sense the reflected IR light from an obstacle. The IR LED is driven through a 100 Ohm resistor, which gives a drive current of around 20mA, and the phototransistor is connected to a pull-up resistor of 10 kOhm that converts its current output to a voltage that is then converted by the MCUs ADC.

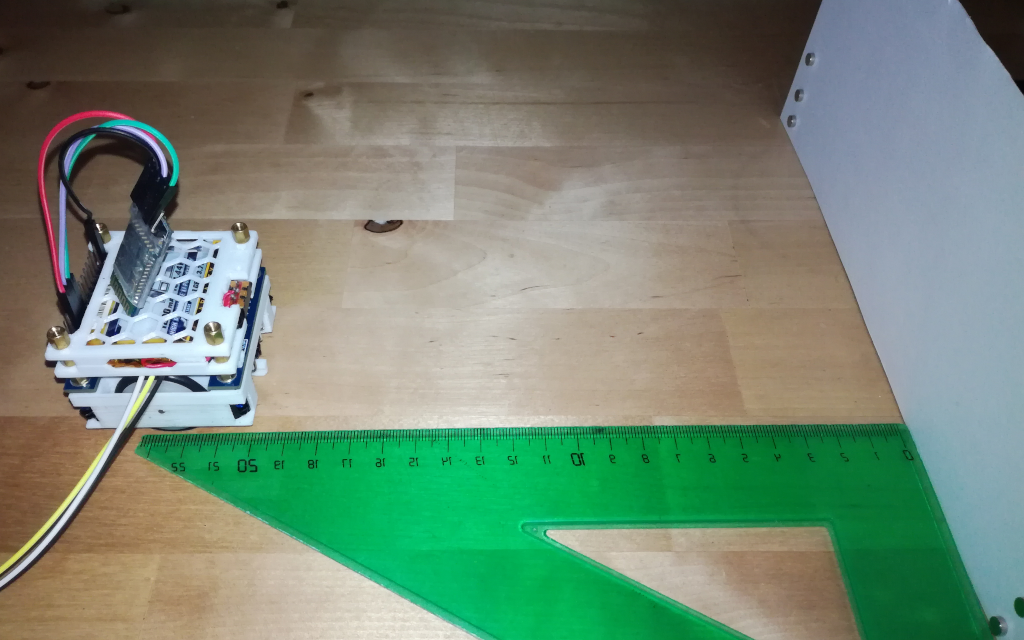

To test the effective range of the IR reflective sensors, the distance that they can sense an obstacle, the setup shown in the figure bellow is used. The robot is set at a known distance from the target and the ADC value of the front facing sensor is registered.

![]()

The test was performed with distances from 1 cm to 20 cm and with both a white and black paper target. All the tests were performed without any sunlight (window shades closed) and with very little indirect sunlight (window shades open but north facing window). This because sunlight has a very high IR content and any high indirect sunlight saturates the phototransistor and therefore obstacles can't be detected at any useful distance.

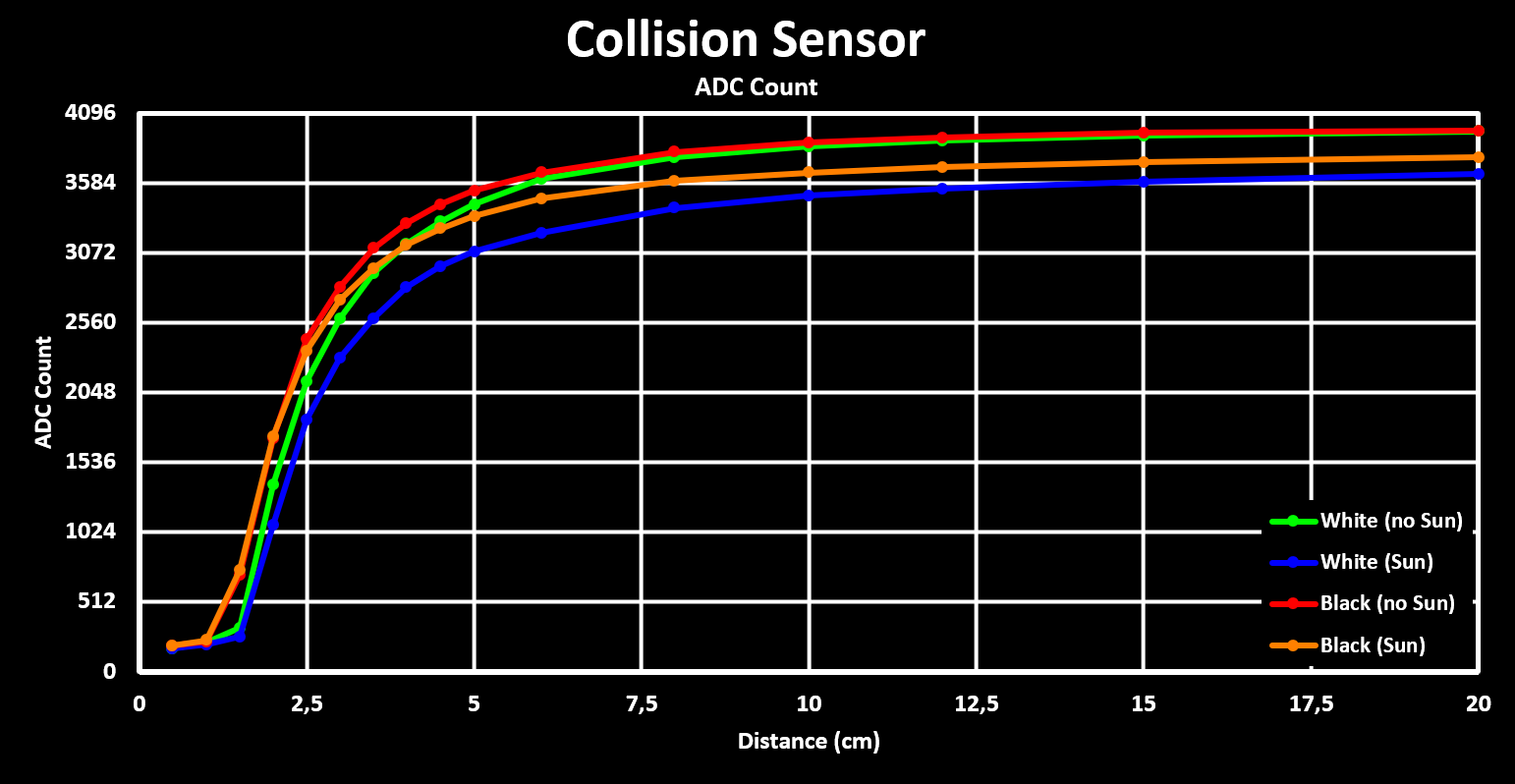

Bellow is a figure showing the results for these tests.

![]()

These results show that the IR reflective sensors can be used for distances bellow around 5 cm, in this range the distance can be, more or less, estimated and without having to worry to much about the obstacle color. This is sufficient for the intended use, to detect an obstacle at a distance (> 1.5 cm) that allows the robot to turn around without having to reverse.

As always there is some more information and results available on the Website. Also, more frequent progress updates on projects are published on Twitter.

-

Motor Encoder and Drive Results

11/05/2021 at 10:26 • 0 commentsMotor Encoder Tuning

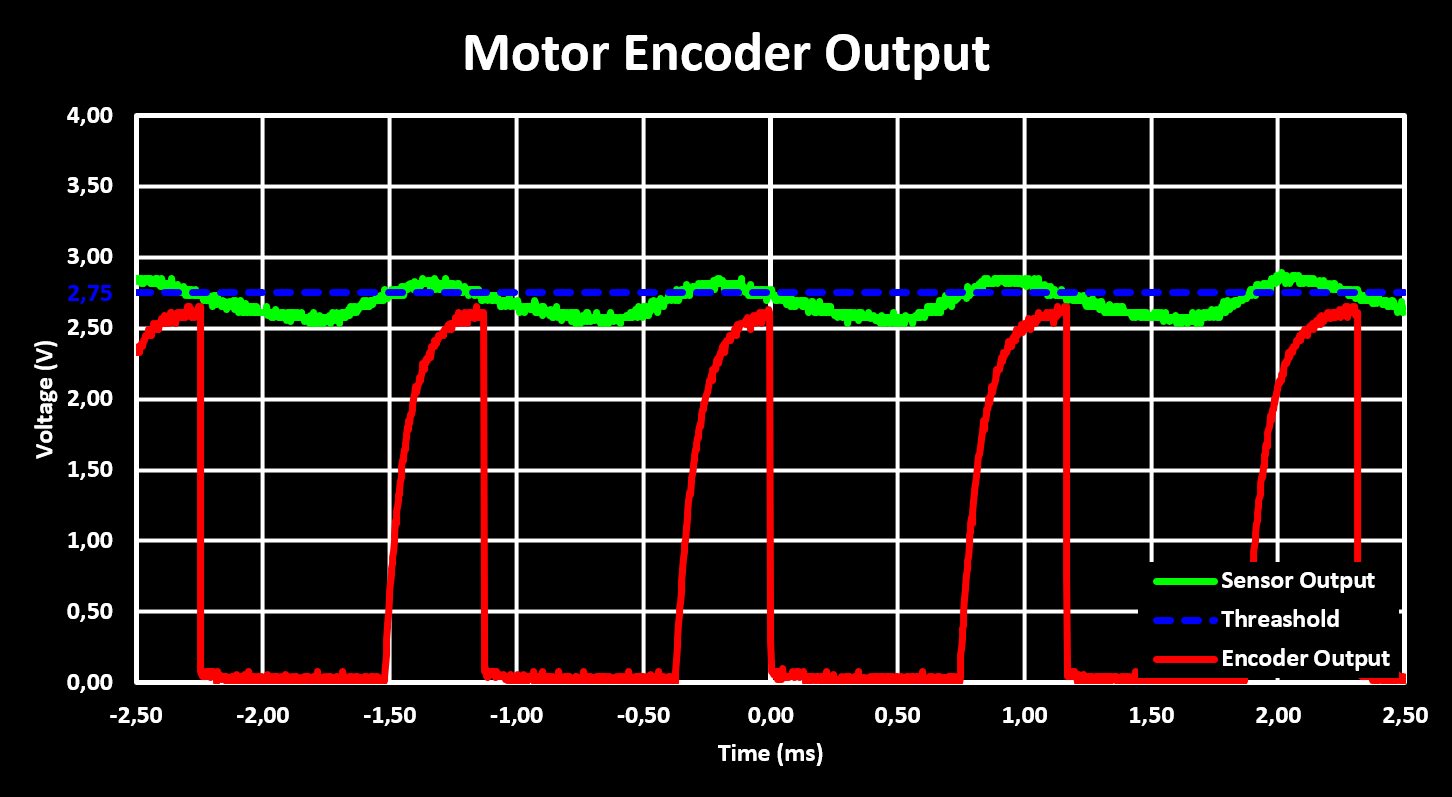

As mentioned in the previous project log, the motor encoders was tuned before gluing them in place. The first part of the tuning consists on setting the reflective sensors LED drive current, by changing the LED drive resistor, and the phototransistor output resistor, which sets the sensibility. The values used are 330 Ohm for the LED drive resistor, a drive current of 6mA, and 47 kOhm for the phototransistor resistor. These give a good output swing when the leaf passes in-front of the sensor, around 250mV, while keeping the current consumption relatively low.

Next the voltage comparator threshold voltage is set to about the middle of the reflective sensor output swing, which is 2.75V. All these signals are shown in the figure bellow, in green the output of the reflective sensor, in blue the threshold voltage and in red the output of the comparator. The latter one had to be filtered by adding a 10nF capacitor to the output to remove false triggers/pulses caused by noise in the reflective sensor output. This is why it has a slow rise time and a high voltage level bellow 3.3V.

![]()

The tuned motor encoder was then glued in place, the figure above is from the final assembly already, in more then one robot chassis and it always gave a reliable output confirming that with the used resistor values the motor encoder is reliable.

Motor Drive Characterization

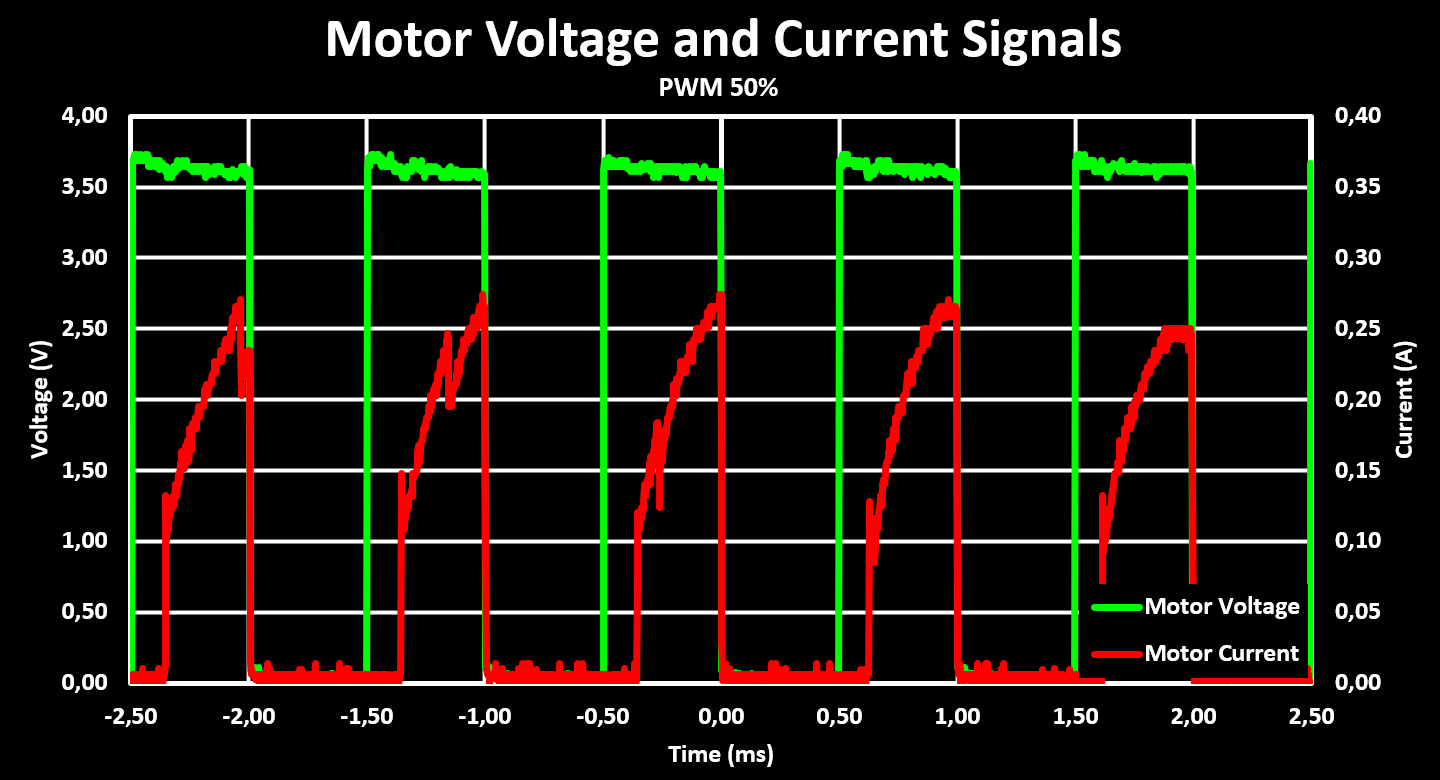

With the motor encoders working, glued in place and the Motor Drive PCB added on top and soldered on it was time to test the motor drive. The motors are driven with two H-Bridges in a single IC, the STSPIN240. The motor RPM is controlled by using PWM signals to drive the H-Bridge. Bellow is a figure showing the voltage and current at one of the motors terminals. The current is obtained from the INA180 connected to the current sense resistor on the motor output of the STSPIN240.

![]()

The figure shows that there is a slight voltage drop when the current increases, this can maybe be mitigated with higher capacitor values to bridge the current draw spikes but the drop is not large and so not considered a concern.

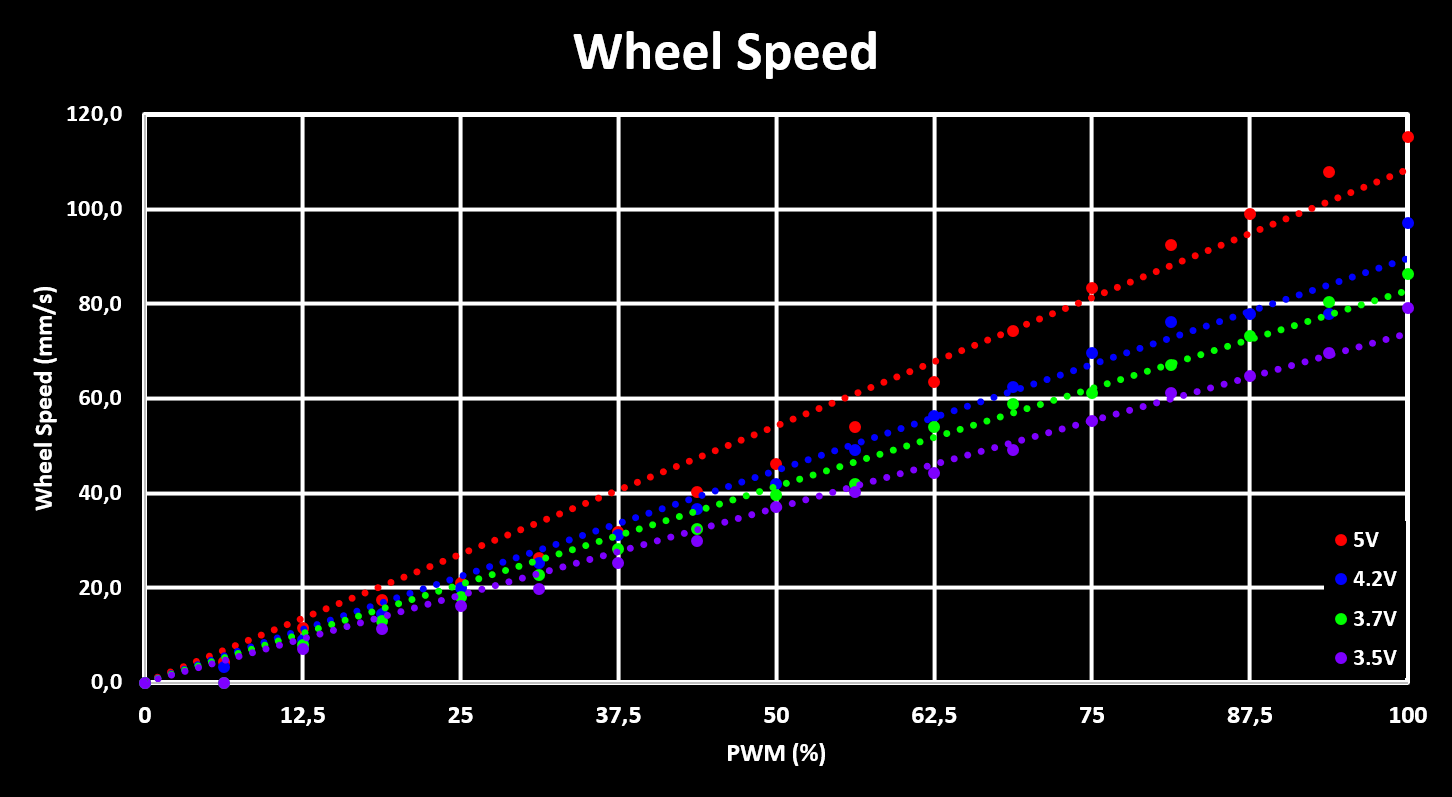

Finally, with both the motor drive and encoders working it is possible to characterize the motor RPM vs PWM duty cycle. With that and using the gear ratio and wheel diameter, the expected robot drive speed with different PWM duty cycles values and supply voltages, battery voltage levels, is obtained. This is shown in the figure bellow.

![]()

This figure shows that the maximum expected drive speed of the robot is around 90 mm/s with a full battery (4.2V) and decreases to around 75mm/s when the battery gets empty (3.5V). This means that the robot firmware will have a software limited maximum drive speed of 70mm/s so that the limited maximum drive speed can always reached, with any battery voltage.

The next step is to characterize the reflective collision sensors.

A more detailed description of the tuning of the motor encoder as well as some additional results of the motor drive are available on the Website.

Updates and progress on this project, and other projects, are published on Twitter.

-

Hardware (PCBs)

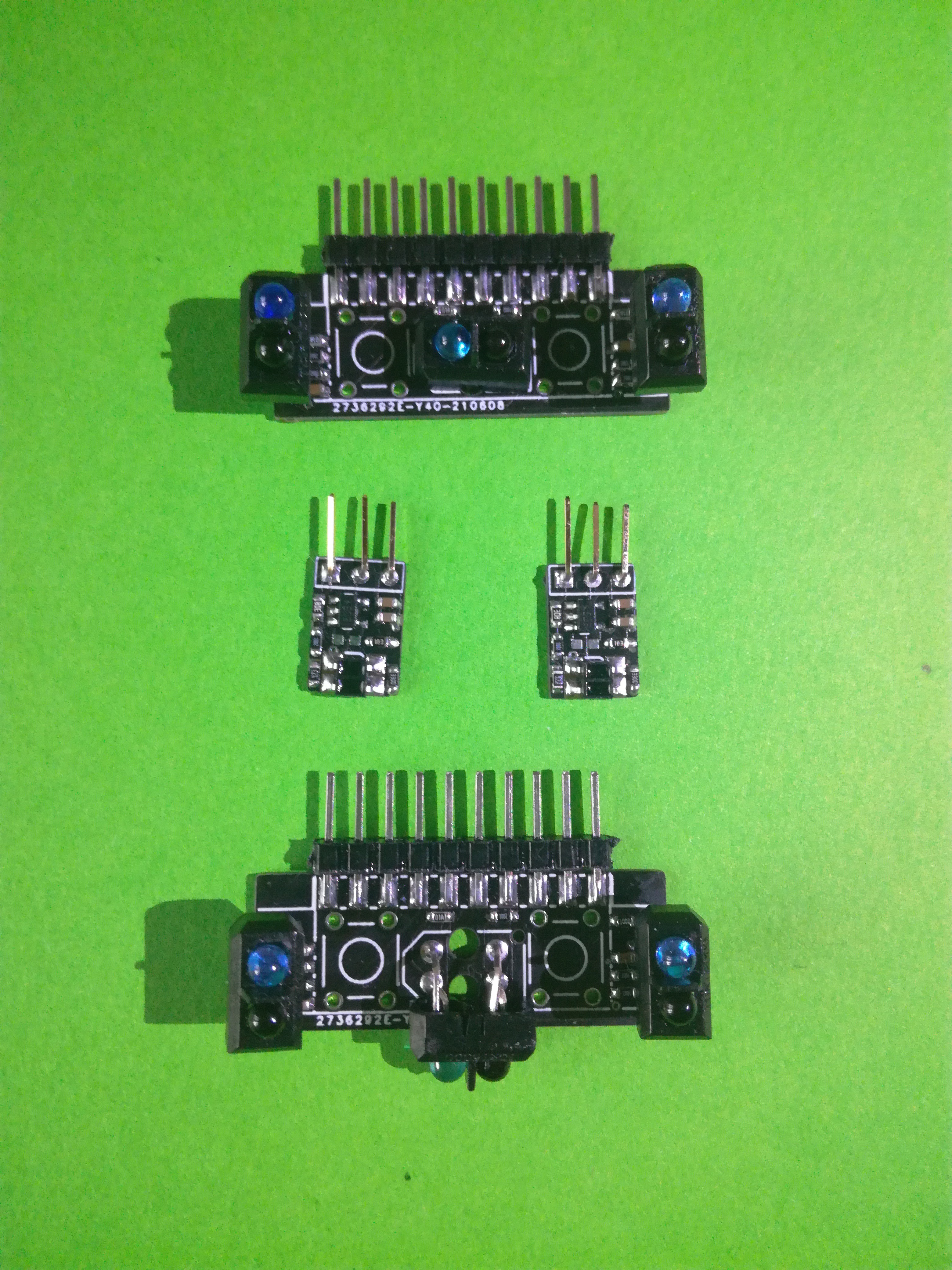

10/17/2021 at 19:08 • 0 commentsThis update is focused on the Hardware of the Mini Cube Robot drive-train (base). All necessary mechanical parts have arrived, screws, gears, motors, wheels etc... As well as all three PCBs (Motor Encoder, Collision Sensor and Motor Drive) and the needed components to populate them.

First all PCBs were assembled and tested for functionality. Both the Motor Encoder and Collision Sensor Boards (in the figure bellow) are working, all sensors are returning a good signal and are working as expected.

![]()

The Motor Drive PCB was also tested, the MCU is working as well as the Motor Drive and Battery Manager ICs. The peripherals are still being tested as well as the acquisition of all the sensors and the control of the motors.

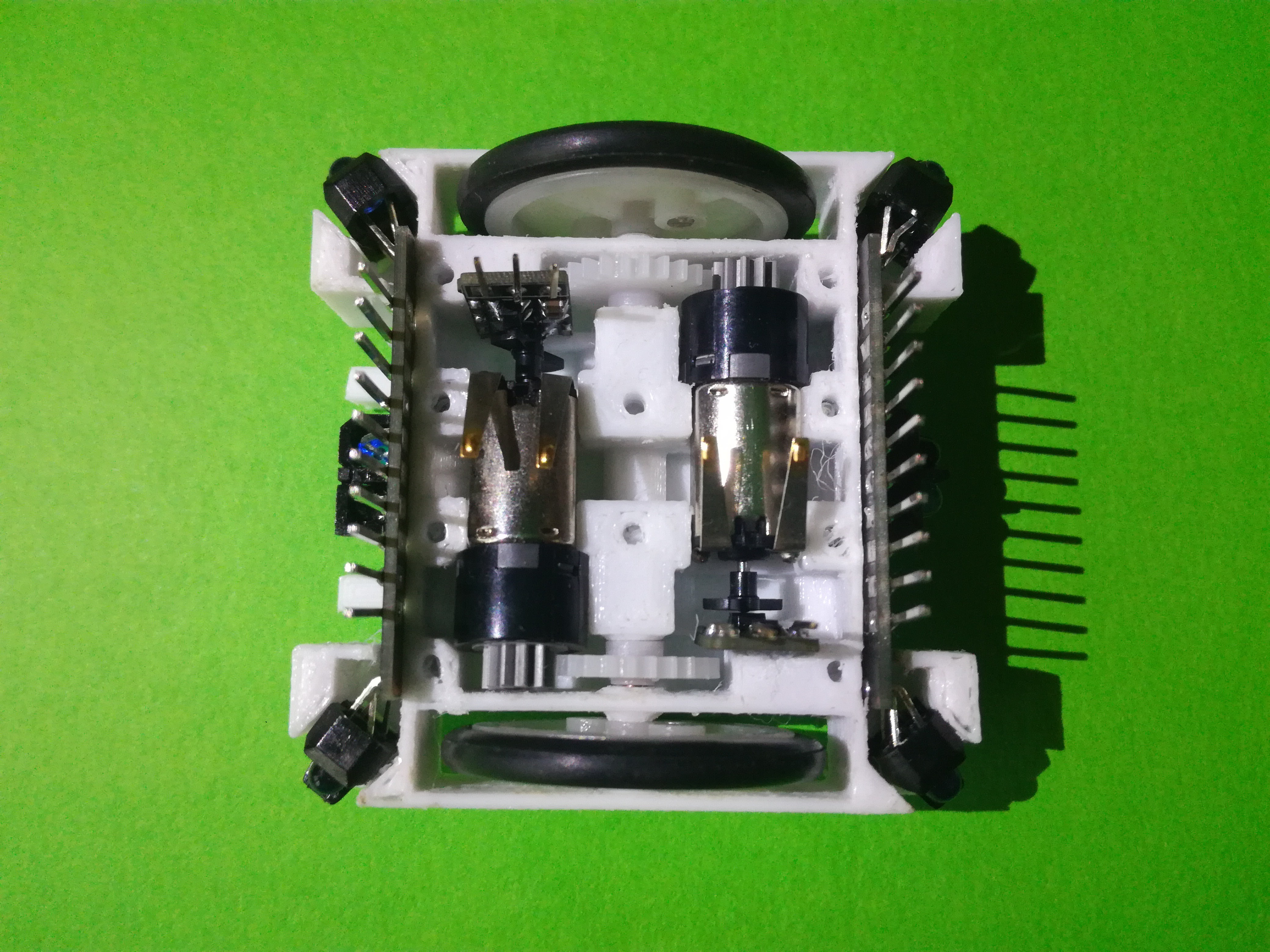

With the PCBs functionality tested, the first Mini Cube Robot base was assembled. The mechanical parts are fitted into the bottom drivetrain holder, together with the collision sensor boards and the motor encoder boards. The latter have to be glued in place but before that they were tuned so that the voltage comparator returns a clean signal whenever the motor's plastic leave passes in-front of it (results in a future update/log). Bellow is a view of the bottom drivetrain holder fully assembled.

![]()

As can be seen all the parts fit very snug into there locations. The gears make good contact and the motors can spin the wheels perfectly. This is of course not the first version 3D printed, there where quite a few versions before arriving on these. The latest, the ones used here, 3D printed part files are linked to download.

With this, the top cover was added and the Motor Drive PCB was mounted on top. The collision sensor and motor encoder boards have to be soldered to the Motor Drive PCB, this is something that is planned to be changed in a future version. The assembly can be seen in the figure bellow.

![]()

Looking at the photo there are a few blue wires visible, those connect to the motor encoder IR reflective sensor outputs to monitor if the voltage output changed with the complete assembly. Nothing major was detected and the motor encoder output continued to work as expected.

Finally the battery pack was added. It is composed of two 3D printed parts, the same part is used as the base and the top just flipped, holding a 1200mA Turnigy (HobbyKing) 1S LiPo in-between them, as well as a power switch. The fully assembled Mini Cube Robot base can be seen in the figure bellow.

![]()

This is the current state of the Mini Cube Robot project. Next is the development of the software for it, to control the motors and read all the sensors.

For some more details on each part and a first assembly guide are available on the website. The assembly instructions will be added to this project page later.

Also, updates and progress on this project, and other projects, are published on twitter.

Mini Cube Robot

A small 5x5cm Cube Robot with differential two wheel drive. Modular design with a simple and cheap base.

NotBlackMagic

NotBlackMagic