5 minute video:

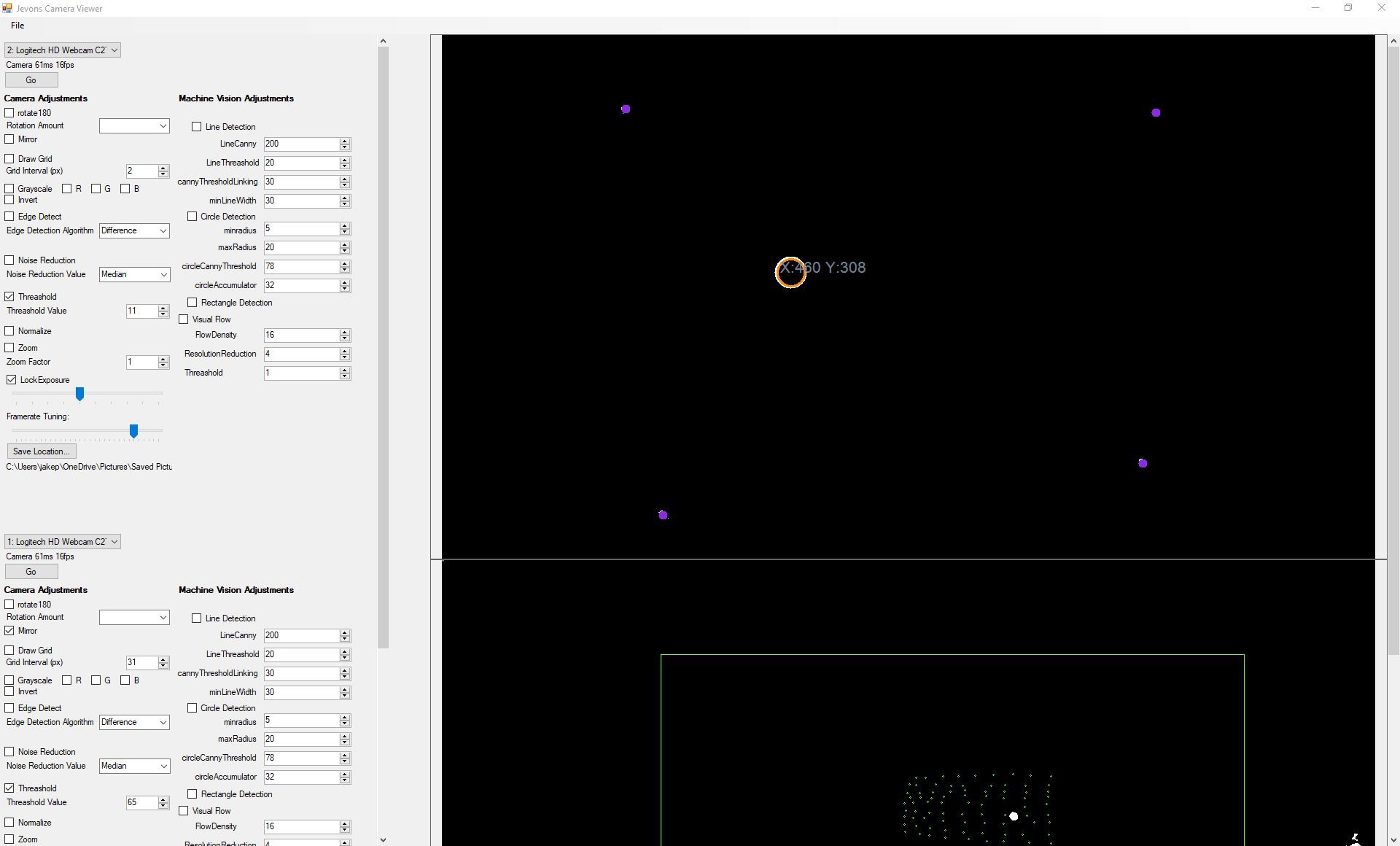

The project software repository is located on Github here: The Jevons Camera Viewer

Please see the github repository for all design files. This is a working prototype.

Please see Eye_Tracker_Software_Quick_Start_Guide.pdf in the files to get started with the software.

The project is also capable of processing video in real-time for optical flow.

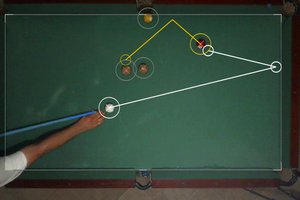

It can identify shapes such as circles, lines, and triangles.

The c270 can be exposure locked.

The image can be posterized for blob detection.

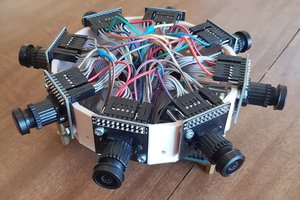

Multiple cameras can be displayed at the same time.

The video feeds can be mirrored and rotated in realtime

The video can be zoomed

Basic edge detection can be utilized

Real-time Human Machine Interface:

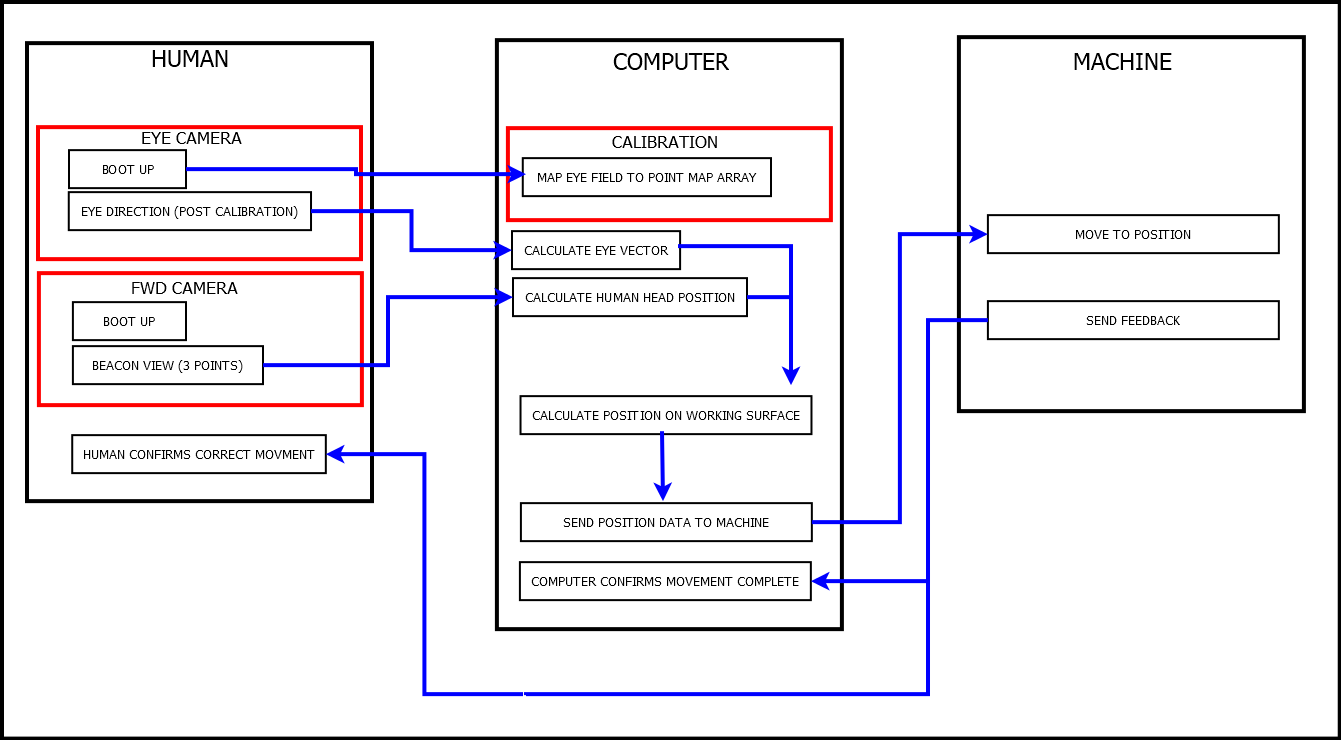

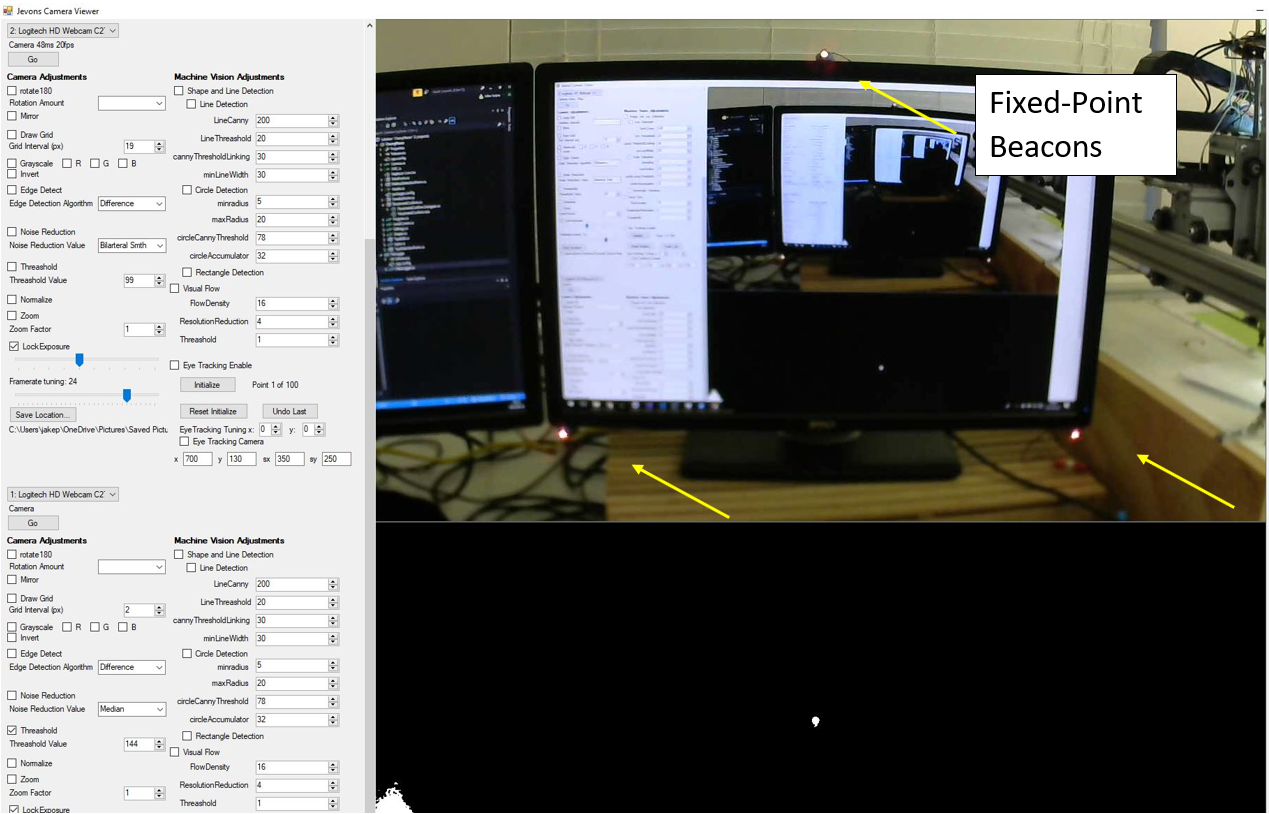

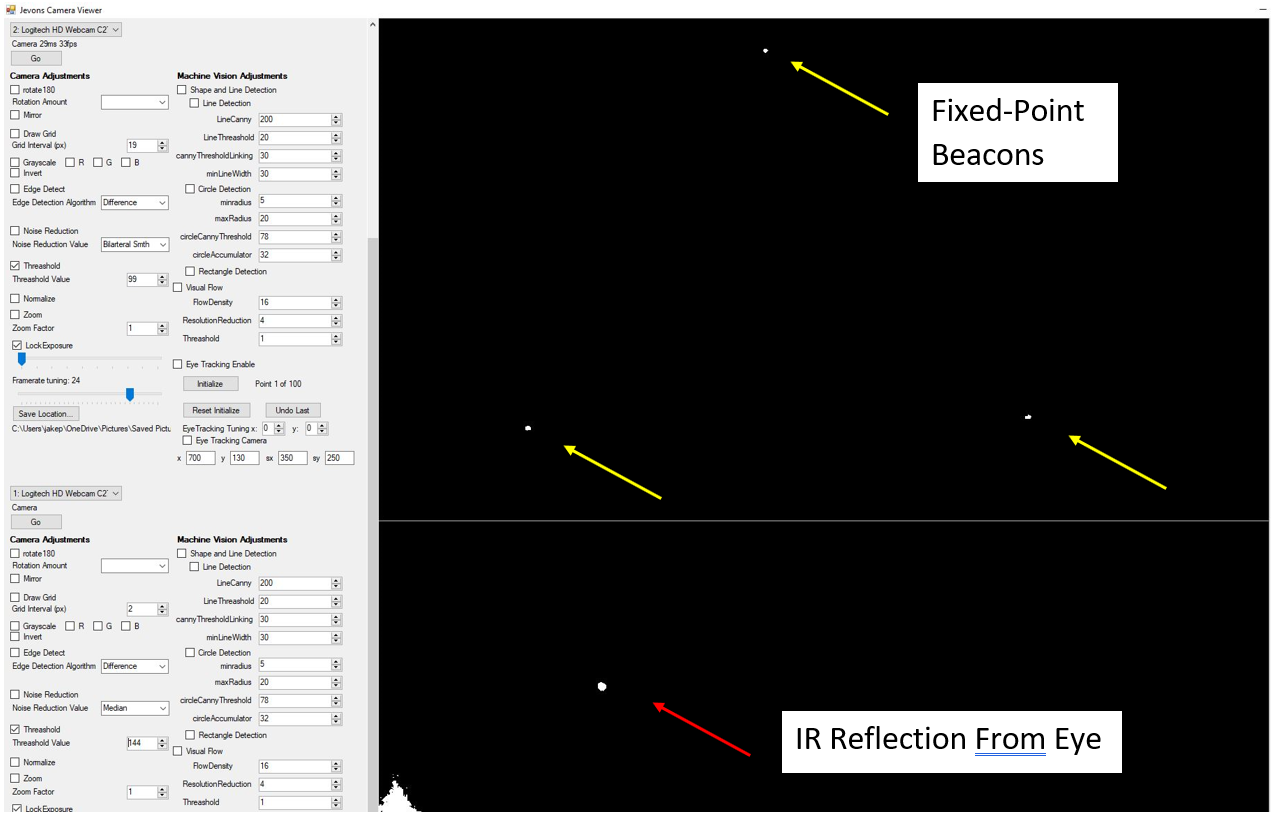

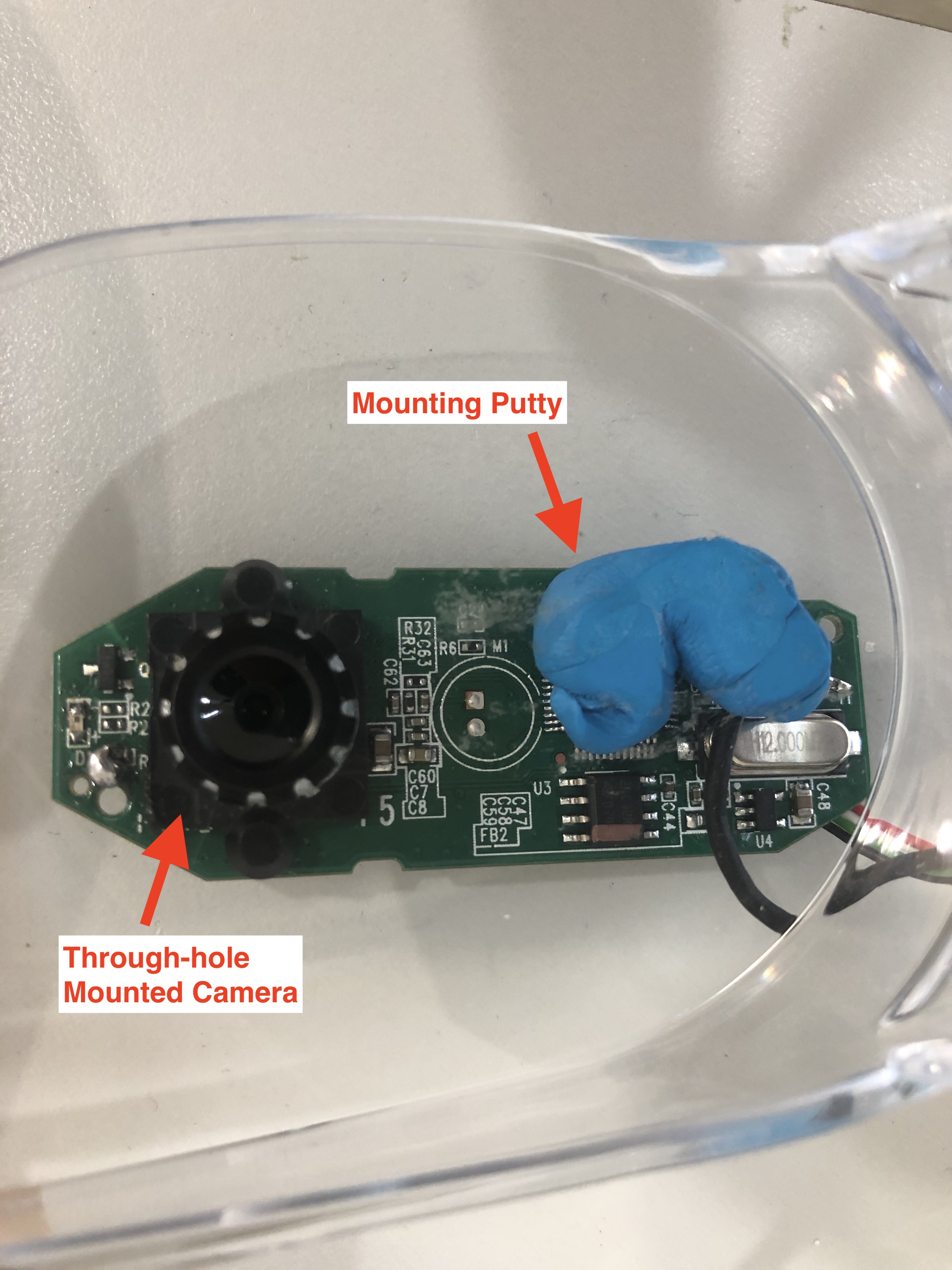

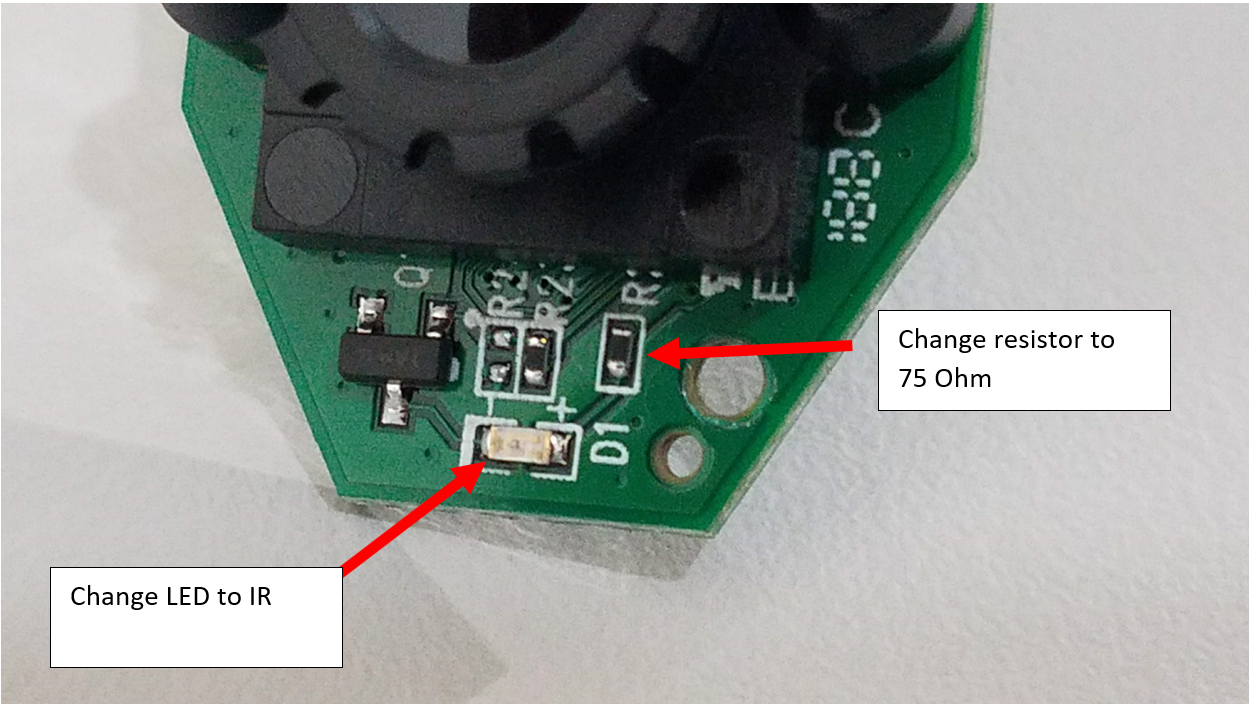

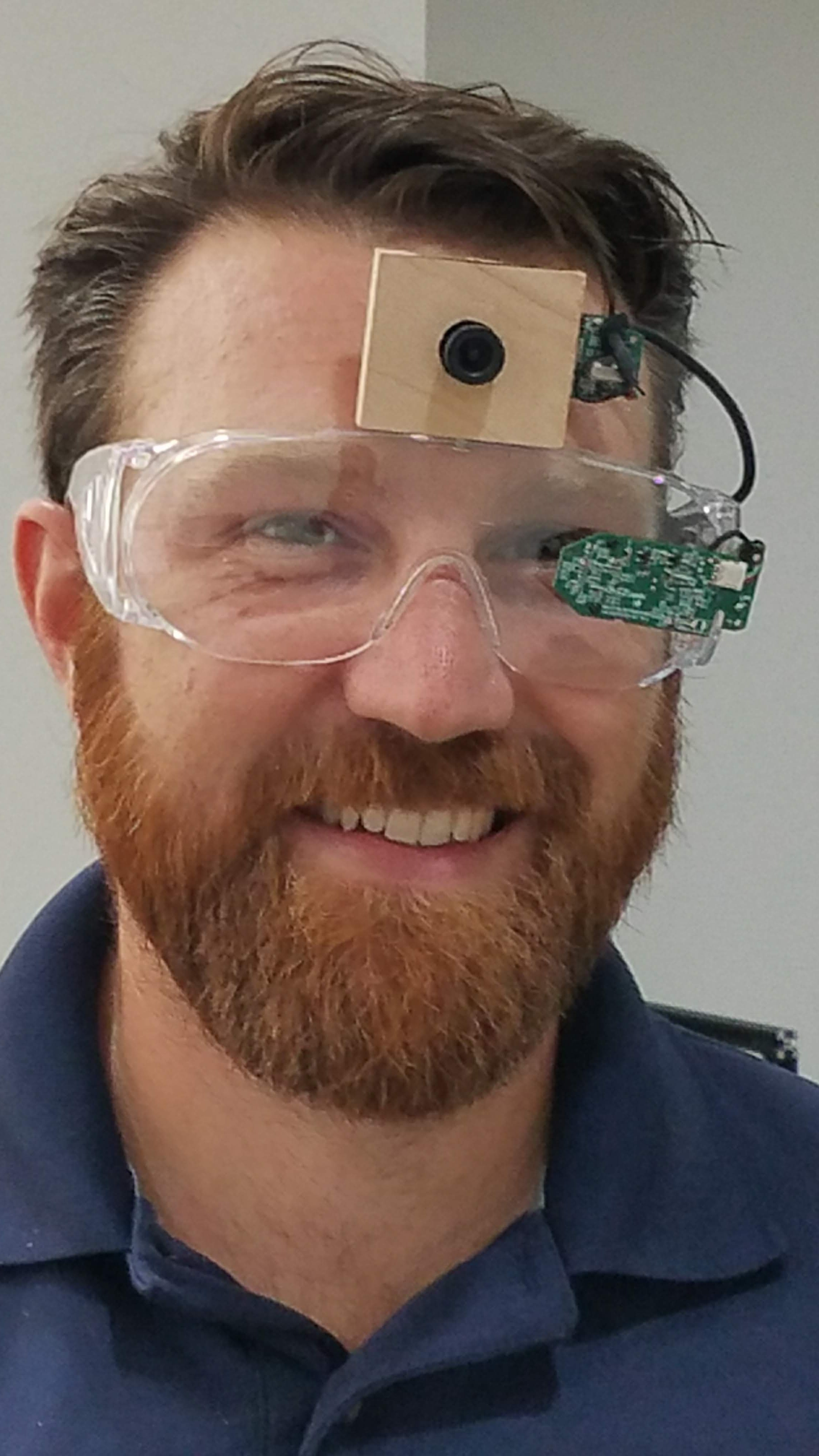

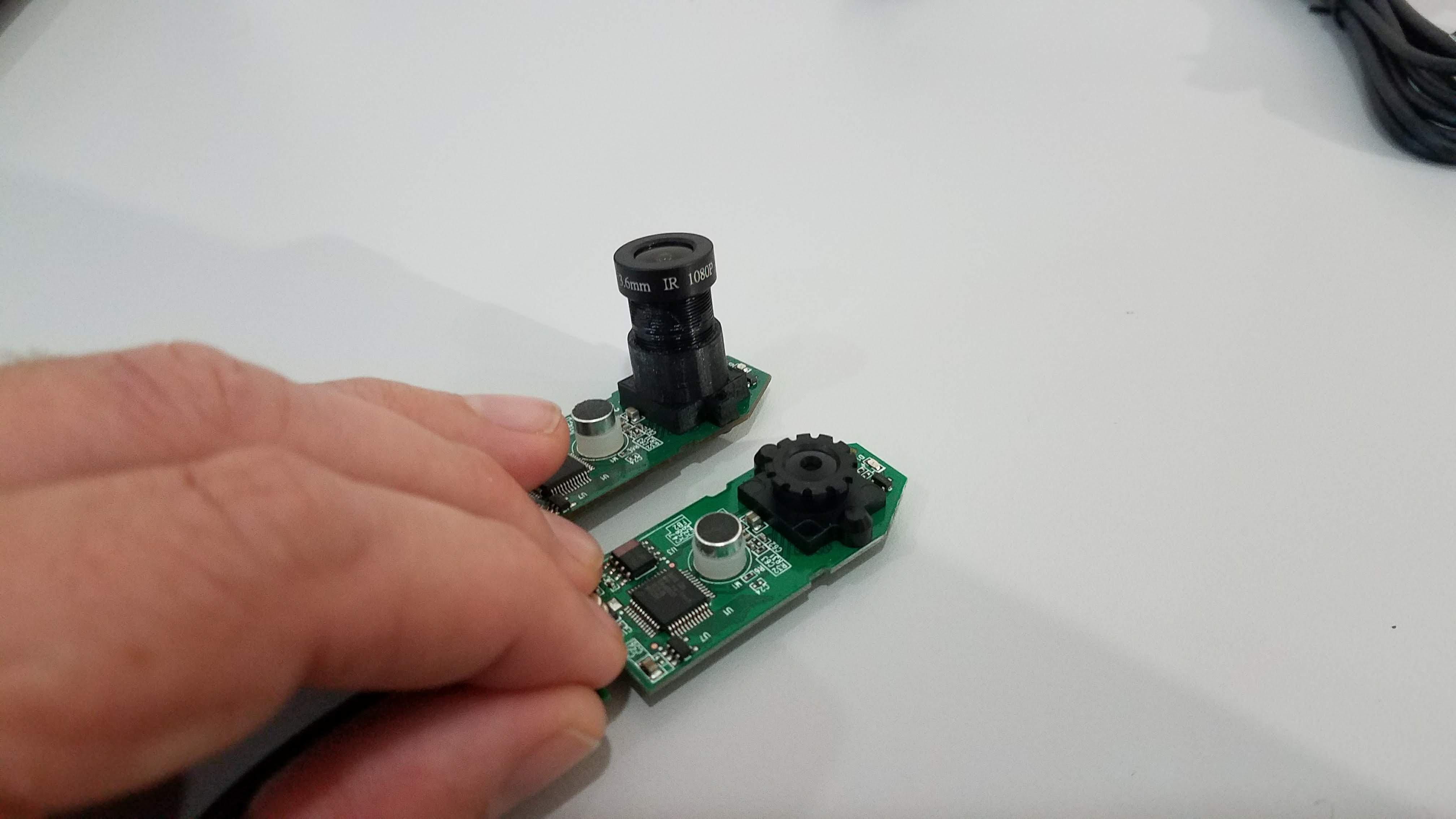

This system accomplishes this by using a set of fixed-point laser beacons on a working surface and two cameras. One camera looks at the users eye and another camera looks forward to see what the user is looking at. The eye camera operates by capturing an infrared reflection off of the users eye, calculating the direction of the human users gaze, then defining a "looking vector." The forward camera recognizes the fixed-point beacons on the working surface, measures the pixel distances between the beacons in each video frame, and defines the position of the user relative to the working surface. Using the information from both cameras the system will map the "looking vector" onto the working surface, thus communicating the users desired location in space to a machine simply by looking.

A flow diagram showing how all the different parts of the system work together is shown below.

Future Plans:

Calibration improvements:

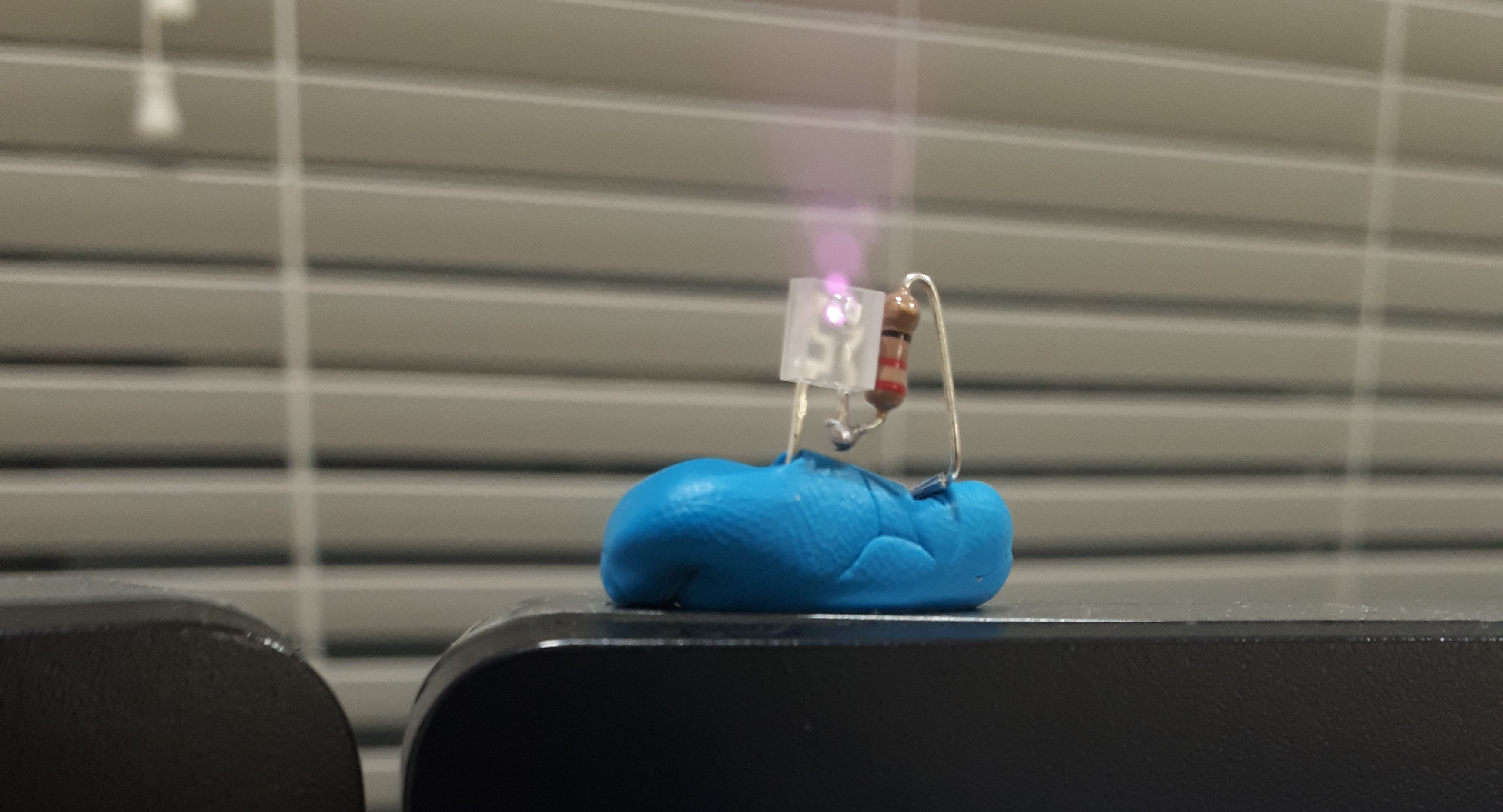

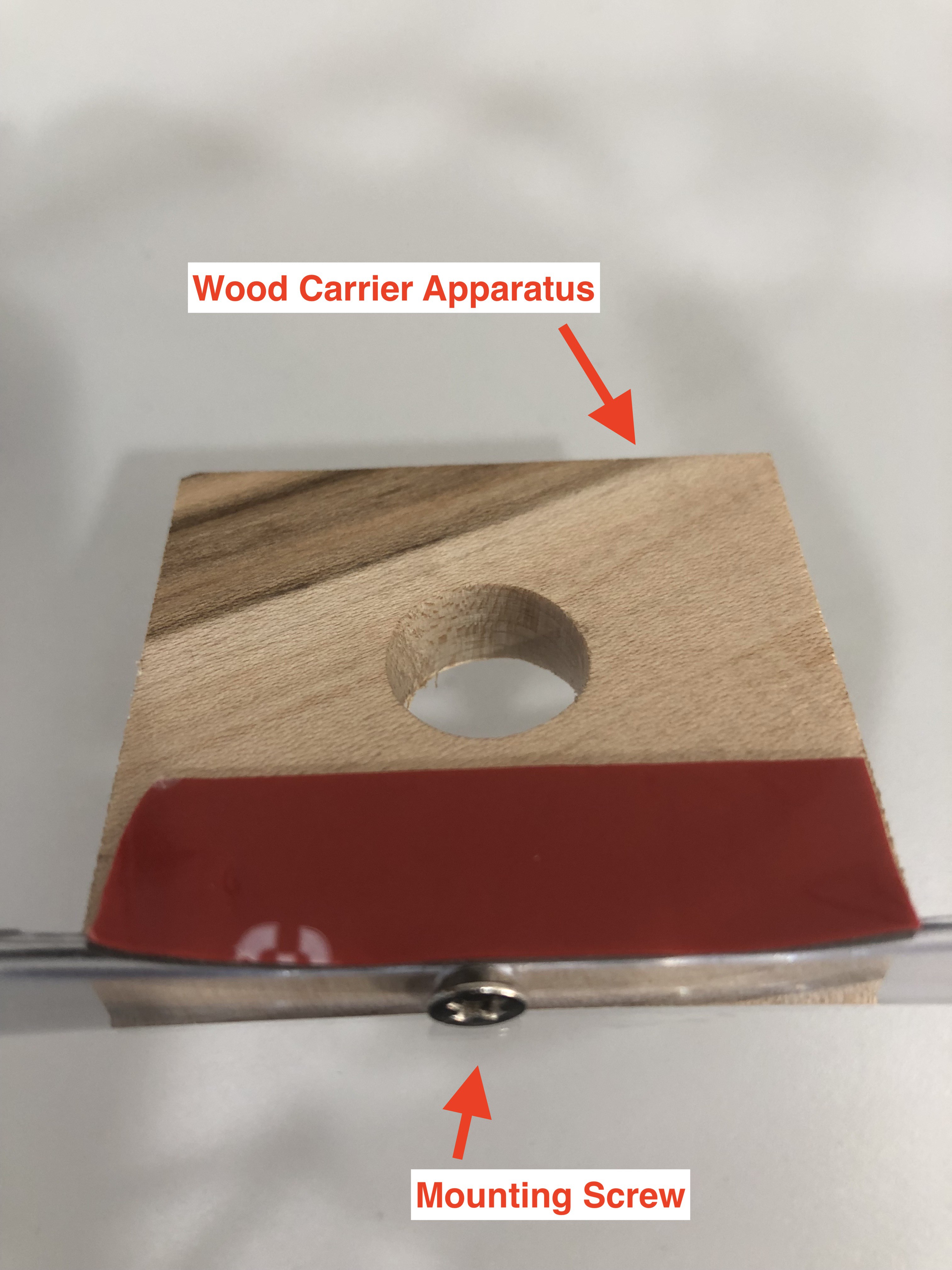

Red Lasers with masking tape to diffuse the beam have been used for location of the surface onto which the user's gaze will be mapped. This will greatly improve calibration and allow the user's gaze to be fed into the computer as a user input. Any surface can be utilized, including very large production floors or warehouses.

The calibration is going to be converted into a workflow that involves the user looking at a moving dot. Bad calibration values will be automatically removed based on a statistical algorithm that has yet to be implemented, but will involve basic rules about how far the calibration point should be from the last calibration point and in which direction. A calibration point that is more than twice the distance from the last calibration point will be removed (subsequent input will be interpolated, or the user will be asked to repeat calibration), furthermore any calibration point that is in the opposite direction from the last point will be removed.

Eye Tracking Logging and Analysis:

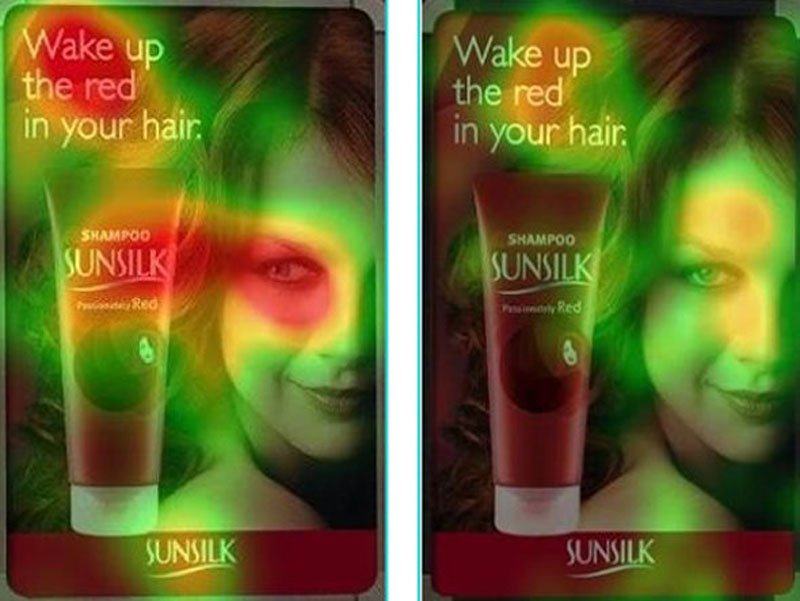

Currently no eye tracking data is being logged. A custom build logging library has been added to address this. Video will also need to be recorded in order to map the user's gaze onto a specific image or video for further analysis. This means a timecode will need to be recorded with the video to be mapped to a file created by the system containing the user's eye gaze. An R script may be written to provide heat maps in the case of static UI analysis.

Machine Addressing and Control:

The end goal of this project is to be able to interact with a machine to direct it's end affector (or machine itself) to a location. This is currently an extremely difficult problem to solve hands free. By doing this the user will be able to direct a machine without taking their hands off their current task.

Currently the proof of concept revolves around moving a modified pick and place machine to pick up and move blocks based on a user's eye gaze. Please see the branch Machine Control on github for more information.

Licensing:

This project uses an Apache...

Read more » John Evans

John Evans

James Gibbard

James Gibbard

alex

alex

Dan Schneider

Dan Schneider

Dear John,thank you for your job. By reading the "get started" pdf file, I can run the code and access the camera viewer, but every time I attempt to select a USB camera and click 'go',I receive a message that my camera is being used in another process. I have reset and fiddled with my camera settings several times. But there is still the problem. I wonder how I can solve the problem.